News that Baidu, the Google of China, cheated to take the lead in an international competition for artificial intelligence technology has caused a storm among computer science researchers. It has been called machine learning’s “first cheating scandal” by MIT Technology Review and Baidu is now barred from the competition.

The Imagenet Challenge is a competition run by a group of American computer scientists which involves recognising and classifying a series of objects in digital images. The competition itself is no Turing test, but it is an important challenge, and one of commercial importance to many firms.

The cheating by Baidu was nothing sophisticated, more akin to an initial stolen glimpse at the answers, which was followed by more of the same when it went unnoticed. Even that makes it sound worse than it was. Part of the competition involved looking at the answers anyway: someone in the Baidu team simply did it more than they were officially allowed to.

In their paper about the submission, Baidu themselves weren’t claiming anything more than an engineering advance: they built a large supercomputer that could handle more data than previous implementations. A necessary advance, but very much a “scaling up” of existing solutions — one that would be financially outside the reach of a typical academic research group. They participated in the competition as an attempt to demonstrate that, after such significant investment in hardware, their new supercomputer was able to perform. They have since apologised for breaking the rules of the competition.

In any case, the significant breakthrough in the area had already been achieved by Geoff Hinton’s group at the University of Toronto. They produced the machine learning equivalent of the high jump’s “Frosbury Flop” to win the 2012 version of the competition with such a significant improvement that all leading entries are now derived from their model. That model itself also built on a two-decade-long program of research by Yann LeCun, then of New York University.

Blown out of proportion

The result of Baidu’s entry into the competition was posted as an “e-print” publication. E-prints are articles that are unreviewed. They are a slightly more formal versions of a “technical blog post”. The problem was identified by the community quickly, within three weeks, and a corrected version was published. This is science in action.

The “cheating scandal” was labelled as such by the very same prestigious technical publication that broadcast the initial results to its readers within two days of the e-print’s publication: MIT Technology Review.

Singling out MIT Technology Review in this case may be a little unfair, because this is part of a wider phenomenon where technical results are trumpeted in the press before they are fully tasted (let alone digested) by the scientific community. E-print publication is a good thing, it allows ideas to be spread quickly. However, the implications of those ideas need to be understood before they are presented as scientific fact.

Ideally knowledge moves forward through academic consensus, but in practice that consensus itself is swayed by outside forces. This raises questions about who is the ultimate arbiter of academic quality. One answer is opinion: the opinion of those that matter, such as governments, businesses, other scientists or even the press. Success in machine learning has meant it is attracting such attention.

Getting on with it for decades

Ironically, the developments that enabled recent breakthroughs in AI all took place outside of such close scrutiny. In 2004 the Canadian Institute for Advanced Research (CIFAR) funded a far-sighted program of research. An international collaboration of researchers was given the time, intellectual space and money that they needed to make these significant breakthroughs. This collaboration was led by Geoff Hinton, the same researcher whose team achieved the 2012 breakthrough result.

This breakthrough led to all the major internet giants fighting for their pound of academic flesh. Of those researchers involved in CIFAR, Hinton has been hired by Google, Yann LeCun leads Facebook’s AI Research team, Andrew Ng heads up research at Baidu and Nando de Freitas was recently recruited to Google DeepMind, the London start-up that Google lavished £400m on acquiring.

The Baidu cheating case is symptomatic of a big change in the landscape for those who work in machine learning and who drove these advances in AI. Until 2012, ideas from researchers in machine learning were under the radar. They were widely adopted commercially by companies like Microsoft and Google, but they did not impinge much on public consciousness. But two breakthrough results brought these ideas to the fore in the the public mind. The Imagenet result by Hinton’s team was one. The other was a program that could learn to play Atari video games. It was created by DeepMind, triggering their purchase by Google.

However, just as Deep Blue’s defeat of Kasparov didn’t herald a dawn in the age of the super-intelligent computer, neither will either of these significant accomplishments. They have not arrived through better understanding of the fundamentals of intelligence, but through more data, more computing power and more experience.

Who follows in whose wake?

These apparent breakthroughs have whetted the appetite. The technical press is becoming susceptible to tabloid sensationalism in this area, but who can blame them as companies and universities ramp up their claims of scientific advance? The advances are somewhat of an illusion, they are the march of technologists following in a scientific wake.

The wake-generators are a much harder to identify ortrack, even for their fellow scientists. But the very real danger is that expectations of significant advance or misunderstanding of the underlying phenomenon will bring about an AI bubble of the type we saw 30 years ago. Such bubbles are very damaging. When high expectations aren’t immediately fulfilled then entire academic domains can be dismissed and far-seeing proposals like CIFAR’s go unfunded.

Even if Baidu’s result were valid, it would have been just the type of workaday scientific development that most of us spend most of our time trying to cook up. It did not merit a pre-publication announcement in MIT Technology Review and the pre-publication withdrawal should have been just a footnote to add to the diverse collection that keep all astute academics scientifically wary. Rather boringly, the only true marker of scientific advance is repeatability. Whether that is within the scientific community or by transfer of ideas to the commercial world.

When reporting on the scandal MIT Technology Review refers to participation in these competitions as a “sport”. I feel sporting analogies give a wrong idea of the spectacle of scientific progress. It is more like watching a painter at work. It is very rare that any single brushstroke reveals the entire picture. And even when the picture is complete, it may only tell us a limited amount about what the next creation will be.

Neil Lawrence is Professor of Machine Learning and Computational Biology at University of Sheffield.

This article was originally published on The Conversation. Read the original article.

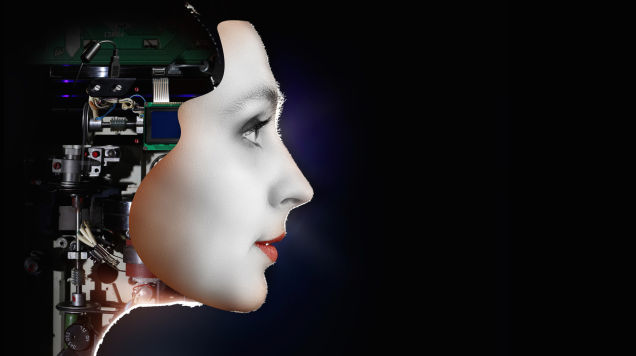

Picture: Shutterstock/Olga Nikonova