A few years ago, I gave a talk about how algorithms and social media shape what we know. I focused on the dangers of the “filter bubble” — the personalised universe of information that makes it into our feed — and argued that news-filtering algorithms narrow what we know, surrounding us in information that tends to support what we already believe. The image at the top is the main slide.

In the talk, I called on Mark Zuckerberg, Bill Gates, and Larry and Sergey at Google (some of whom were reportedly in the audience) to make sure that their algorithms prioritise countervailing views and news that’s important, not just the stuff that’s most popular or most self-validating. (I also wrote a book on the topic, if you’re into that sort of thing.)

Today, Facebook’s data science team has put part of the “filter bubble” theory to the test and published the results in Science, a top peer-review scientific journal. Eytan Bakshy and Solomon Messing, two of the co-authors, were gracious enough to reach out and brief me at some length.

So how did the “filter bubble” theory hold up?

Here’s the upshot: Yes, using Facebook means you’ll tend to see significantly more news that’s popular among people who share your political beliefs. And there is a real and scientifically significant “filter bubble effect” — the Facebook news feed algorithm in particular will tend to amplify news that your political compadres favour.

This effect is smaller than you might think (and smaller than I’d have guessed.) On average, you’re about 6% less likely to see content that the other political side favours. Who you’re friends with matters a good deal more than the algorithm.

But it’s also not insignificant. For self-described liberals on Facebook, for example, the algorithm plays a slightly larger role in what they see than their own choices about what to click on. There’s an 8 per cent decrease in cross-cutting content from the algorithm vs a 6 per cent decrease from liberals’ own choices on what to click. For conservatives, the filter bubble effect is about 5 per cent, and the click effect is about 17 per cent — a pretty different picture. (I’ve pulled out some other interesting findings from the study here.)

In the study, Bakshy, Messing and Facebook data scientist Lada Adamic focused on the 10 million Facebook users who have labelled themselves politically. They used keywords to distinguish “hard news” content — about, say, politics or the economy — from “soft news” about the Kardashians. And they assigned each article a score based on the political beliefs of the people who shared it. If only self-described liberals shared an article, it was deemed highly liberal-aligned. (There are some caveats worth paying attention to on this methodology, which I highlighted below.)

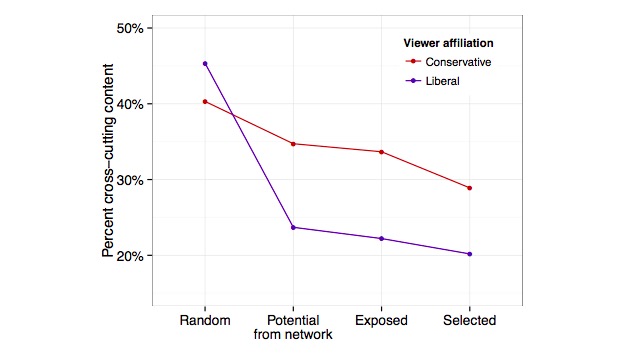

Then they looked at how often liberals saw conservative-aligned content and vice versa. Here’s the key chart:

First (“Random”), this shows the total proportion of hard news links on Facebook if everyone saw a random sample of everything. Liberals would see 45 per cent conservative content, and conservatives would see about 40 per cent liberal content. Second (“Potential from network”), you see the average percentage of cross-cutting articles posted by a person’s friends. Third (“Exposed”) is the percentage that they actually saw — this is where the algorithm plays in. And fourth (“Selected”) is the percentage that they actually clicked on.

One important thing to note: The slope of this line goes down. At each stage, the amount of cross-cutting content that one sees decreases. The steepest reduction comes from who one’s friends are, which makes sense: If you have only liberal friends, you’re going to see a dramatic reduction in conservative news. But the algorithm and people’s choices about what to click matter a good deal, too.

In its press outreach, Facebook has emphasised that “individual choice” matters more than algorithms do — that people’s friend groups and actions to shield themselves from content they don’t agree with are the main culprits in any bubbling that’s going on. I think that’s an overstatement. Certainly, who your friends are matters a lot in social media. But the fact that the algorithm’s narrowing effect is nearly as strong as our own avoidance of views we disagree with suggests that it’s actually a pretty big deal.

There’s one other key piece to pull out. The Filter Bubble was really about two concerns: that algorithms would help folks surround themselves with media that supports what they already believe, and that algorithms will tend to down-rank the kind of media that’s most necessary in a democracy — news and information about the most important social topics.

While this study focused on the first problem, it also offers some insight into the second, and the data there is concerning. Only 7 per cent of the content folks click on on Facebook is “hard news”. That’s a distressingly small piece of the puzzle. And it suggests that “soft” news may be winning the war for attention on social media — at least for now.

The conversation about the effects and ethics of algorithms is incredibly important. After all, they mediate more and more of what we do. They guide an increasing proportion of our choices — where to eat, where to sleep, who to sleep with, and what to read. From Google to Yelp to Facebook, they help shape what we know.

Each algorithm contains a point of view on the world. Arguably, that’s what an algorithm is: a theory of how part of the world should work, expressed in maths or code. So while it’d be great to be able to understand them better from the outside, it’s important to see Facebook stepping into that conversation. The more we’re able to interrogate how these algorithms work and what effects they have, the more we’re able to shape our own information destinies.

Some important caveats on the study

That ideological tagging mechanism doesn’t mean what it looks like it means. As the study’s authors would point out — but many people will miss — this isn’t a measure of how partisan-biased the news article or news source is. Rather, it’s a measure of which articles tend to get shared the most by one ideological group or the other. If conservatives like unicorns and there’s content that passes the “hard news” filter about unicorns, that will show up as conservative-aligned — even though the state of unicorn discourse in America is not partisan.

It’s hard to average something that’s constantly changing and different for everyone. This result is true on average during this period of time (July 7, 2014, to Jan. 7, 2015). That’s a period when Facebook video and Trending became much more prominent — and we can’t see what effect that had. (I think the authors would say that the finding’s pretty durable, but given Facebook’s constant reinvention, I’m somewhat more sceptical.)

This only measures the 9 per cent of Facebook users who report their political affiliation. It’s reasonable to assume that they’re a bit different — perhaps more partisan or more activist-y — from the average Facebook reader.

It’s really hard to separate “individual choice” and the workings of the algorithm. Arguably all of the filtering effect here is a function of an individual choice: the choice to use Facebook. On the other hand, the algorithm responds to user behaviour in lots of different ways. There’s a feedback loop here that may differ dramatically for different kinds of people.

In my humble opinion, this is good science, but because it’s by Facebook scientists, it’s not reproducible. The researchers on the paper are smart men and women, and with the caveats above, the methodology is pretty sound. And they’re making a lot of the data set and algorithms available for review. But at the end of the day, Facebook gets to decide what studies get released, and it’s not possible for an independent researcher to reproduce these results without Facebook’s permission.

Eli Pariser is the author of the New York Times bestseller The Filter Bubble: What the Internet is Hiding From You and the co-founder of Upworthy, a website dedicated to drawing attention to important social topics. He’s at @Elipariser on Twitter. This post originally appeared on Medium and is published here with permission.