Sgt. Star is the U.S. Army’s dedicated marketing and recruitment chatbot, and he isn’t going to turn whistleblower any time soon. There’s no use threatening him for answers either — he’s programmed to report that kind of hostility to the Army Criminal Investigation Division.

Last year, EFF began to look at how the government deploys chatbots to interact with and collect information from the public. Sgt. Star was a natural place to start, since he’s almost famous. Serving as the Army’s virtual public spokesperson, each year he guides hundreds of thousands of potential recruits through goarmy.com and fields their questions on Facebook.

(On the Media’s TLDR recorded an informative and entertaining podcast about Sgt. Star, our research and the issues AI chatbots raise — listen here.)

Since Sgt. Star wasn’t going to tell us everything he knows without us breaking it down into a thousand simple questions, we decided to just use the Freedom of Information Act to get it all at once. At first the Army ignored our inquiries, but with a little digging and pressure from the media1, we have been able to piece together a sort of personnel file for Sgt. Star.

We now know everything that Sgt. Star can say publicly as well as some of his usage statistics. We also learned a few things we weren’t supposed to: Before there was Sgt. Star, the FBI and CIA were using the same underlying technology to interact with child predators and terrorism suspects on the Internet. And, in a bizarre twist, the Army claims certain records don’t exist because an element of Sgt. Star is “living.”

Everything We Know About Sgt. Star

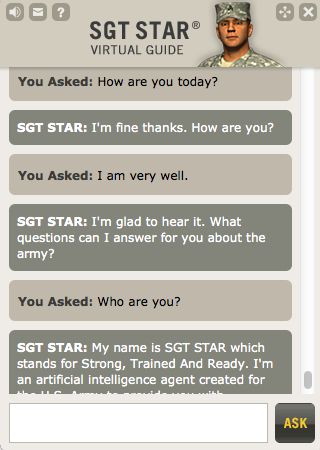

Chatbots are computer programs that can carry on conversations with human users, often through an instant-message style interface. To put it another way: Sgt. Star is what happens when you take a traditional “FAQ” page and inject it with several million dollars worth of artificial intelligence upgrades.

Sgt. Star’s story dates back to the months after the 9/11 attacks, when the Army was experiencing a 40-per cent year-over-year increase in traffic to the chatrooms on its website, goarmy.com. By the time the U.S. invaded Iraq, analysts predicted that the annual cost to staff the live chatrooms would be as high as $US4 million.

A cost-cutting solution presented itself in late 2003 in the form of an artificial intelligence program called ActiveAgent, developed by a Spokane, Washington-based tech firm called Next IT. After years of trial runs and focus groups, the Army debuted Sgt. Star2 in 2006.

Technology and law scholars, such as Ryan Calo of the University of Washington School of Law and Ian Kerr of the University of Ottawa Faculty of Law, have warned of the threats to privacy posed by bots that combine social manipulation with mass data gathering. As Calo wrote of Sgt. Star in his paper, “Peering HALs: Making Sense of Artificial Intelligence and Privacy“:

As in the context of data mining, a computer equipped with artificial intelligence is capable of engaging thousands of individuals simultaneously, twenty-four hours a day. But here the agent is able to leverage the power of computers to persuade via carefully orchestrated social tactics known to elicit responses in humans. In an age of national security and targeted advertising, citizen and consumer information is at an all time premium. Techniques of AI and HCI [Human-Computer Interaction] create the opportunity for institutions to leverage the human tendency to anthropomorphise and other advantages computers hold over humans (ubiquity, diligence, trust, memory, etc.) to facilitate an otherwise impracticable depth and breadth of data collection.

Through a FOIA request, we were able to quantify Sgt. Star’s reach. According to a spreadsheet provided by the Army, Sgt. Star does the work of 55 human recruiters. Over the last five years, he has answered 10.5 million questions (94 per cent of them correctly) in 2.8 million chat sessions. That means, on average, that Sgt. Star is engaging more than 1,550 people each day.

Sgt. Star is currently operated by the Army Marketing and Research Group, a division created in 2012 that operates out of the Pentagon. According to contracting documents, as of 2011, the Army had spent $US5 million on the program, plus another couple of million in maintenance contracts3 in the years since.

The Army does not have a privacy policy specific to Sgt. Star, but officials say they are not tracking cookies or retaining personal information beyond IP address traffic to goarmy.com. But Sgt. Star is recording the questions he’s asked, which the Army acknowledged in an interview with TLDR are often more personal than what someone would ask a real recruiter (for example, a potential recruit might be afraid he’ll be seen as “soft” if he asks if he has to shower in front of other people or if there are jobs where he won’t have to be deployed).

The Army also revealed to TLDR that Sgt. Star is not so smart that he can increase his own knowledge base. Instead, the Army and Next IT hold monthly meetings to assess and adjust how Sgt. Star responds to questions.

While Sgt. Star may seem innocuous enough, he provides a window into how other agencies may use the technology for far more invasive purposes.

Sgt. Star’s Family

You Asked: Are there other government chatbots?

Sgt Star: I’m here to help you navigate though goarmy.com and answer your questions about the Army. I’m not here to discuss politics.

Sgt. Star has a large family of “intelligence virtual assistants” at Next IT. His brother Spike assists incoming students at Gonzaga University. Ann helps health consumers at AETNA. Travellers interact (and flirt) with Jenn at Alaska Airlines, Alex at United Airlines, and Julie at Amtrak. Next IT’s newest addition is Alme, a healthcare AI designed to help physicians interface with patients. But so far, Sgt. Star is the only federal government chatbot acknowledged on Next IT’s website.

Secretly, however, Sgt. Star does have family at law enforcement and intelligence agencies. According to an inadequately redacted document publicly available on the federal government’s contracting site, FBO.gov, Sgt. Star is built on technology developed for the FBI and CIA more than a decade ago to converse with suspects online. From the document:

LTC Robert Plummer, Director, USAREC PAE, while visiting the Pacific Northwest National Laboratories (PNNL) in late 2003, discovered an application developed by NextIt Corporation of Spokane, WA, that PNNL identified for the FBI AND CIA. The application used chat with an underlying AI component that replicated topical conversations. These agencies were using the application to engage PEDOPHILES AND TERRORISTS online, and it allowed a single agent to monitor 20-30 conversations concurrently.

The bolded text was redacted, but still legible in the document. At this point we don’t know whether the CIA and FBI are still using these bots.4 That will likely take a much longer FOIA process and, considering the redaction, the agencies may not be willing to give up the information without a fight.

Some food for thought: Sgt. Star engaged in almost 3 million conversations over the last five years, and those were people who actually wanted to talk to him. How many people could two CIA and FBI covert bots converse with over 10 years? What happens to conversations that aren’t relevant to an investigation, and how do the agencies weed out the false positives, such as when a chatbot misinterprets a benign conversation as dangerous?

We also know that Next IT developed an artificial intelligence program called ActiveSentry5, specifically designed to identify and neutralize threats to data security within a computer network. According to promotional materials, ActiveSentry’s origin story is very similar to Sgt. Star’s:

Our solution, ActiveSentry, is evolved from a proven technology we developed that has been deployed by government agencies to monitor Internet Relay Chat (IRC) rooms for such suspicious behaviour as potential threats to national security, child exploitation, and drug trafficking.

ActiveSentry is not a chatbot, but a kind of patrol guard, watching keystrokes, conversations and monitoring network activity, looking for employee misbehavior and then springing into action. Here’s how Next IT’s founder and CEO described its investigative and evidence-gathering capabilities in a presentation at a homeland security technology showcase:

If bad behaviour occurs, ActiveSentry can respond with real-time proactive steps to block the action. It might shut down the computer, shut down the application, alarm a security officer, or give the user a warning. If the security breach is sufficient, ActiveSentry creates a detailed record of everything the user does, stores all the action with date/time stamps, and secures the data for foolproof evidentiary material.

So far, we are unaware of any criminal cases where information gathered by a Next IT bot has been used as evidence. If you do know of such a case, please get in touch with us.

Is Sgt. Star Alive?

You Asked: Are you alive?

SGT STAR: I am a dynamic, intelligent self-service virtual guide, created by the Army, that will help you quickly navigate the GoArmy Web site and retrieve Army-related subject matter, while simultaneously answering questions and directing communication based on interaction with the user.

Generations upon generations of folklorists, philosophers, futurists and science fiction authors have asked the question: at what point does a machine become a living being?

Sorry, we can’t settle that debate. That said, the Army’s FOIA office did use some rather strange language in describing one piece of Sgt. Star’s programming.

In our request, we sought his output script (every possible response in his database) as it stands now, but also for each year since he went live. That way, we could compare how his answers have evolved and grown through military policy changes, such as the end of Don’t Ask Don’t Tell and the ban on women in combat. The Army gave us the 835 current responses, but could not give us the rest. Apparently, the historical scripts don’t exist because the script is a “living body.” The Army’s exact words in describing the spreadsheet of 835 responses:

This collection is the current running content that appears on the site and represents a “living body” of content that reflects the additions and updates to answers that have evolved since the introduction of the Sgt. Star feature.

Do they mean, “living” like the Constitution is said to be a living document? Or are they using it in the sense of Johnny 5 from Short Circuit? We plan to file a FOIA appeal to learn more. Likely what they mean is that they don’t maintain older versions of Sgt. Star’s script because they constantly update a single file. If that’s the case, then that indicates poor record-keeping by the Army. If the FBI treats their bots in the same way, that would raise serious questions about the ability of defendants to challenge the reliability of a bot if they are charged with a crime after the bot’s programming has changed.

The Army also refused to provide us with Sgt. Star’s input patterns, i.e. all the phrases and keywords that he is programmed to recognise, claiming that this information is Next IT’s proprietary material and therefore exempt from FOIA. We will address this in our FOIA appeal; we are not seeking Sgt. Star’s underlying off-the-shelf ActiveAgent code, but we would like to see the customisation details that give Sgt. Star his distinct Army flavour. It’s also worth knowing what Sgt. Star is programmed to flag, even if he’s not programmed to actually respond to it.

Everything Sgt. Star Knows

You can view all of Sgt. Star’s current responses here. It’s 288 pages.

Sgt. Star has a seemingly exhaustive supply of answers to questions about military service, from opportunities for dentists and veterinarians to whether soldier are allowed to use umbrellas (only women and under certain conditions). He also has answers that simply exist to deepen his personality, such as his music and film preferences, and information about his Rottweiler, “Chomp.” He will also deliver rather in-depth, scientific answers to throwaway questions, including “why is the sky blue?” and “why is grass green?”

For all his character quirks, a user would never mistake Sgt. Star for human — that’s just not how he was designed. That can’t necessarily be said for other government bots. Military, law enforcement and intelligence agencies have employed virtual people capable of interacting with and surveilling the public on a massive scale, and every answer raises many, many more questions.

You Asked: Until next time.

SGT STAR: Goodbye.

NOTES

1. Special thanks to Alex Goldman of On The Media for following up with the Army, and journalist Jason Leopold for firing off a supporting FOIA request.

2. Technically, he is SGT STAR, with STAR as an acronym for “Strong, Trained And Ready,” which Sgt. Star says all soldier must be before being assigned to a unit or deployed. However, in the development stages, the program used the name “Sgt. Rock.”

3. While the Army does work directly with Next IT, it has also contracted out maintenance to two defence contractors — Nakuuruq Solutions and Truestone Communications — both subsidiaries of a corporation owned by the Iñupiat people of Northwest Alaska.

4. Next IT is no longer the only company offering pedophile-hunting chatbots. In 2004, a British programmer introduced a product he called “NetNannies.” Last year, Spanish researchers announced another AI, called Negobot.5. ActiveSentry is now marketed by Next IT’s affiliate, NextSentry Corporation.