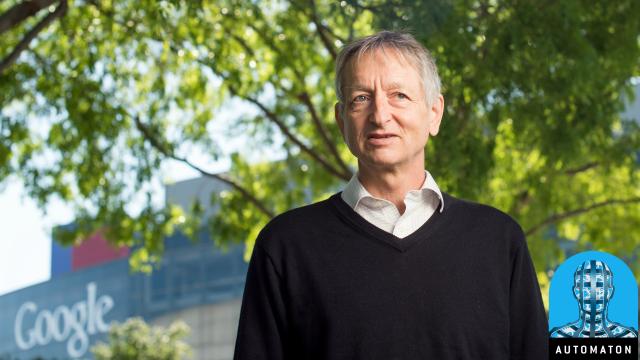

Martin Ford made waves with his 2015 book, Rise of the Robots, which details the many accelerating trends in automation and how they’re slated to impact business and, especially, employment. For his next book, Architects of Intelligence: The Truth About AI from the People Building It, he, well, attempts to hone in on precisely what that subtitle describes. It’s stuffed with in-depth interviews with the biggest names in AI. One of those is Geoffrey Hinton. Currently a professor of computer science at the University of Toronto and a part of the Google Brain project, Hinton is considered by many in his field to be the ‘godfather of deep learning,’ due to his pioneering work in artificial neural networks.

Below, find an excerpt from Architects of Intelligence in which the godfather of deep learning considers the economic and social ramifications of the ascendent systems he has pioneered. Enjoy. — Brian Merchant

MARTIN FORD: Let’s talk about the potential risks of AI. One particular challenge that I’ve written about is the potential impact on the job market and the economy. Do you think that all of this could cause a new Industrial Revolution and completely transform the job market? If so, is that something we need to worry about, or is that another thing that’s perhaps overhyped?

GEOFFREY HINTON: If you can dramatically increase productivity and make more goodies to go around, that should be a good thing. Whether or not it turns out to be a good thing depends entirely on the social system, and doesn’t depend at all on the technology. People are looking at the technology as if the technological advances are a problem. The problem is in the social systems, and whether we’re going to have a social system that shares fairly, or one that focuses all the improvement on the 1% and treats the rest of the people like dirt. That’s nothing to do with technology.

MARTIN FORD: That problem comes about, though, because a lot of jobs could be eliminated—in particular, jobs that are predictable and easily automated. One social response to that is a basic income. Is that something that you agree with?

GEOFFREY HINTON: Yes, I think a basic income is a very sensible idea.

MARTIN FORD: Do you think, then, that policy responses are required to address this?

Some people take a view that we should just let it play out, but that’s perhaps irresponsible.

GEOFFREY HINTON: I moved to Canada because it has a higher taxation rate and because I think taxes done right are good things. What governments ought to do is put mechanisms in place so that when people act in their own self-interest, it helps everybody. High taxation is one such mechanism: When people get rich, everybody else gets helped by the taxes. I certainly agree that there’s a lot of work to be done in making sure that AI benefits everybody.

MARTIN FORD: What about some of the other risks that you would associate with AI, such as weaponization?

GEOFFREY HINTON: Yes, I’m concerned by some of the things that President Putin has said recently. I think people should be very active now in trying to get the international community to treat weapons that can kill people without a person in the loop the same as they treat chemical warfare and weapons of mass destruction.

MARTIN FORD: Would you favour some kind of a moratorium on that type of research and development?

GEOFFREY HINTON: You’re not going to get a moratorium on that type of research, just as you haven’t had a moratorium on the development of nerve agents, but you do have international mechanisms in place that have stopped them being widely used.

MARTIN FORD: What about other risks, beyond the military weapon use? Are there other issues, like privacy and transparency?

GEOFFREY HINTON: I think using it to manipulate elections and to manipulate voters is worrying. Cambridge Analytica was set up by Bob Mercer who was a machine learning person, and you’ve seen that Cambridge Analytica did a lot of damage. We have to take that seriously.

MARTIN FORD: Do you think that there’s a place for regulation?

GEOFFREY HINTON: Yes, lots of regulation. It’s a very interesting issue, but I’m not an expert on it, so don’t have much to offer.

MARTIN FORD: What about the global arms race in general? Do you think it’s important that one country doesn’t get too far ahead of the others?

GEOFFREY HINTON: What you’re talking about is global politics. For a long time, Britain was a dominant nation, and they didn’t behave very well, and then it was America, and they didn’t behave very well, and if it becomes the Chinese, I don’t expect them to behave very well.

MARTIN FORD: Do you think we should have some form of industrial policy? Should the United States and other Western governments focus on AI and make it a national priority?

GEOFFREY HINTON: There are going to be huge technological developments, and countries would be crazy not to try and keep up with that, so obviously, I think there should be a lot of investment in it. That seems common sense to me.

MARTIN FORD: Overall, are you optimistic about all of this? Do you think that the rewards from AI are going to outweigh the downsides?

GEOFFREY HINTON: I hope the rewards will outweigh the downsides, but I don’t know whether they will, and that’s an issue of social systems, not with the technology.

MARTIN FORD: There’s an enormous talent shortage in AI and everyone’s hiring. Is there any advice you would give to a young person who wants to get into this field, anything that might help attract more people and enable them to become expert in AI and in deep learning, that you can offer?

GEOFFREY HINTON: I’m worried that there may not be enough people who are critical of the basics. The idea of Capsules is to say, maybe some of the basic ways we’re doing things aren’t the best way of doing things, and we should throw a wider net. We should think about alternatives to some of the very basic assumptions we’re making. The one piece of advice I give people is that if you have intuitions that what people are doing is wrong and that there could be something better, you should follow your intuitions.

You’re quite likely to be wrong, but unless people follow the intuitions when they have them about how to change things radically, we’re going to get stuck. One worry is that I think the most fertile source of genuinely new ideas is graduate students being well advised in a university. They have the freedom to come up with genuinely new ideas, and they learn enough so that they’re not just repeating history, and we need to preserve that. People doing a master’s degree and then going straight into the industry aren’t going to come up with radically new ideas. I think you need to sit and think for a few years

MARTIN FORD: There seems to be a hub of deep learning coalescing in Canada. Is that just random, or is there something special about Canada that helped with that?

GEOFFREY HINTON: The Canadian Institute for Advanced Research (CIFAR) provided funding for basic research in high-risk areas, and that was very important. There’s also a lot of good luck in that both Yann LeCun, who was briefly my postdoc, and Yoshua Bengio were also in Canada. The three of us could form a collaboration that was very fruitful, and the Canadian Institute for Advanced Research funded that collaboration. This was at a time when all of us would have been a bit isolated in a fairly hostile environment—the environment for deep learning was fairly hostile until quite recently—it was very helpful to have this funding that allowed us to spend quite a lot of time with each other in small meetings, where we could really share unpublished ideas.

Excerpted with permission from Architects of Intelligence: The Truth about AI from the People Building It by Martin Ford. Published by Packt Publishing Limited. Copyright (c) 2018. All rights reserved. This book is available at amazon.com.