There wasn’t anything particularly unusual about the court-martial at the Fort Huachuca military base in Arizona at the end of February. But when the analyst from the Department of Defence forensic laboratory presented a report on fingerprint evidence, it included an element that had never been used with fingerprint evidence in a courtroom in the United States before: a number.

That number, produced by a software program called FRStat, told the court the probability that the similarity between two fingerprints in question would be seen in two prints from the same person. Basic as it may sound, using any empirical or numerical evidence in fingerprint analysis is a major addition to a discipline that typically just relies on the interpretation of an individual expert – which opens it up to criticism. Fingerprint evidence isn’t infallible and, like a lot of forensic science, has led to high-profile false convictions.

A landmark report published in 2009 by the National Academy of Sciences highlighted the lack of scientific foundation for fingerprint evidence, as well as other commonly used metrics in forensic science, like bite marks and bloodstain patterns. This isn’t to say that fingerprints aren’t useful in the justice system. But they aren’t entirely reliable, and in the current practice of print analysis, there’s no place to signal that uncertainty to an attorney, judge, or jury.

Using statistics and probabilities to help bolster fingerprint results and signal the weight of the evidence isn’t a new idea, but this is the first time a tool has actually been put in the hands of fingerprint examiners. FRStat was developed by Henry Swofford, chief of the latent print branch at the U.S. Army Criminal Investigation Laboratory at the Department of Defence. “We’re the first lab in the United States to report fingerprint evidence using a statistical foundation,” Swofford said.

Swofford has been developing and validating his program for about four years, and the Fort Huachuca case marked the first time in the US that the program — or any statistical tool — had been deployed for use in a courtroom. A paper describing the statistical model behind the software was published at the beginning of April. The team at the Defence Forensic Science Center is looking for the best way to distribute the program, but for now, it’s freely available to labs that are interested in testing it out.

Adding a element of quantitative analysis to fingerprint identification is positive progress for forensic science, which struggles, overall, to live up to the “science” side of its name. Implementing the program, though, requires a significant culture change for a field that’s remained largely the same for decades, if not a century – posing additional challenges for people like Swofford who pushing for progress.

Fingerprint analysts, tasked with collecting and analysing evidence from crime scenes, work in police departments and state forensics labs across the country. Some are officers and detectives pulling double duty as forensic scientists; others are specialists, employed by dedicated crime labs.

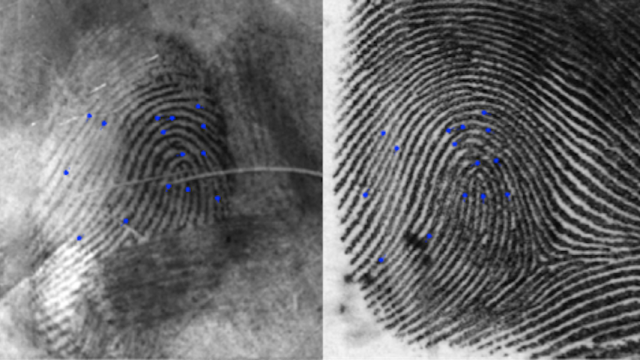

Faced with two prints, analysts determine if they came from the same source by marking small features in the loops and ridges of a fingerprint, called minutiae, and then deciding if the features correspond well enough to report a match. The result is often reported as a yes or no binary: either the prints came from the same source, or they didn’t.

But fingerprints are messy. They can be smudged, or only partially left on the edge of a doorknob or countertop. Fingerprints are also on actual, three-dimensional skin. What analysts are looking at is only a two-dimensional impression of the original. “We lose information all the time, from 3D to 2D,” explained Lauren Reed, director of the US Army Criminal Investigation Laboratory and a former fingerprint analyst. Furthermore, it’s never been proven, scientifically, that fingerprints actually are unique to individuals. The few research studies that have been done on fingerprint analysis shows a high rate of false matches, and examiners can be swayed in one direction or another if they’re given contextual information about a case.

Despite that built-in uncertainty, though, examiners generally don’t say how confident they are in their conclusions, or how strong their evidence is. “They often give the impression that it’s 100 per cent certain,” Reed said. That’s different than things like medical tests or political polls, which report results alongside margins of error to show how conclusive the finding actually is. “While the fingerprint community, as a whole, is pretty good, we’re not perfect,” she said. “We owe it to the people we serve to quantify what the strength of our evidence actually is.”

According to the Innocence Project, testimony that doesn’t signal the limitations of a tool like fingerprinting, or overstates the certainty of evidence, is one of the reasons that nearly half of wrongful convictions result from problem with forensic evidence.

It was aggravating to Swofford that the purported “science” he worked in had so many scientific gaps. He started building FRStat to address those concerns, and put the evidence coming out of the lab on an empirical foundation.

“I want to strengthen the foundations of our science,” he said. “I also want to make sure the evidence is presented in a way that the guilty people are convicted, and the innocent are exonerated.”

To use FRStat, fingerprint examiners still mark up the minutiae of an unknown and known fingerprint. Then, they feed the images into the program, which compares those flagged points on the images to a database of similarly marked, known matches. The software reports the likelihood that you would see that level of similarity in a prints that it knows came from the same source.

Importantly, Swofford said, the numerical score allows analysts to report the strength of the evidence in results. It removes the sense of all or nothing from the process, and is a more realistic picture of the value of the evidence, he said.

Swofford partnered with statisticians and other experts to develop and test his program, a highly unusual move for a forensic analyst, said Karen Kafadar, chair of the American Statistical Association’s Advisory Committee on Forensic Science, who consulted on the project. Fingerprint examiners are trained to view what they do as extremely specialised, and not something that they should discuss with outsiders, even though chemists, biologists, and statisticians can lend a lot of value, she said. “To Henry’s credit, he appreciated the contributions that we could make.”

This program isn’t the first time statistical modelling has been proposed or developed for fingerprinting. However, past work has primarily remained in academic circles, and building a tool for practical use wasn’t the objective of most researchers; the projects were just scholarly exercises.

FRStat is the first crossover from theory to practice, made possible by the flexibility, funding, and relatively low caseload at the Department of Defence lab. “As an organisation, we’ve been pretty committed to doing research and development,” Reed said. “This was kind of a no-brainer — if we could work on it, we feel obligated to.” The DoD lab sees about 3,000 fingerprint cases a year, Reed said, and they saw the project as an opportunity to make their methods for those cases more transparent and scientifically honest.

The program isn’t a perfect solution to the issues with pattern evidence, Kafadar noted. Programs like FRStat can’t create empirical certainty around fingerprint analysis. Fingerprints aren’t like DNA, which only has a certain number of possible combinations at each individual point. One stretch of DNA, for example, might have 200 possible combinations of genetic code, which makes it easier to calculate the probability that two people have the same combination at that particular point. The identifying markers on fingerprints — the ridges, lines, and branches — are more subjective and harder to pinpoint.

FRStat still relies on analysts’ subjective identification of the ridges and lines on the print, and again, there’s still no certainty that fingerprints are actually unique to individuals. “It’s a hard problem to solve,” Kafadar said. “But this is the best we’re able to do right now.”

There are also multiple ways to establish probability scores for prints, and people may disagree on the best approach to take, said Cedric Neumann, a professor of mathematics and statistics at South Dakota State University, who published his own model in 2012.

However, he said that building a program that puts the statistical tools into the hands of fingerprint analysts is a positive step, regardless.

“That way, the community can start playing with it, and figuring out the best way to use the results,” Neumann said. “These are discussions the community needs to have, and it’s very good that the software exists to enable those discussions.

The forensic laboratory in Connecticut, which handles all forensic testing, including fingerprints, for the state, is one of the first labs trying out the program. “We’re always looking for ways to improve our reporting,” said Cindy Lopes-Phelan, deputy director of identification in the Division of Scientific Services at the lab. “Especially with the critiques out there, we want to find ways our conclusions can be supported with technology.”

The lab is validating FRStat, making sure that it actually does what it says it does. So far, Lopes-Phelan said, it looks good. Throughout the process, she’s making sure that all of the analysts are exposed to the program.

“It’s a culture shock, to start using mathematical computations,” Lopes-Phelan said. “We want to give them a comfort level, and show that this is not a scary thing, and that it’s maths, but it’s supporting what they’re doing.”

Fingerprint examiners often have a degree in a science (though it’s not required) and take training courses focused on pattern recognition and courtroom testimony. Statistics aren’t part of that training, so an element of discomfort was expected, Swofford said. “You just needed to differentiate patterns. Integrating something like this, that sounds complex, can be overwhelming.” To preempt the problem at the Department of Defence labs, they rolled out the software over two years, gradually introducing analysts to the statistical language.

Analysts also might balk at the idea that statistical programs could limit their decision making power, Reed said. “It’s a major point of tension,” For example, there’s a grey area, Reed said, where some examiners might make a decision on a set of prints, and others might say that they’re inconclusive. Examiners might push back against a system that removes the choice. “But science isn’t just the particular analysts opinion,” she said.

Implementing FRStat also doesn’t make fingerprint examiners obsolete, or take away their jobs, Reed stressed — they still compare prints, analyse minutia, and come to a decision. The program is just an added step to give them more information on the strength of their conclusion.

Neumann thinks that software like FRStat is the natural next step in fingerprinting, and eventually, it will be the standard of practice. There are two ways examiners are going to approach the change, he said: “There are those who are accepting it as inevitable, and then the older generation, who think that they will be able to retire before they have to deal with it.”

A key hurdle for the transition, though, is ensuring that the software will be admissible in court. The evidence reported by FRStat wasn’t challenged in the Fort Huachuca court-martial, and the program will be in a bit of judicial limbo until it is. Expert testimony and forensic science presented in court can be scrutinised under either the Frye test or the Daubert standard, which establish if a piece of evidence, in the case of Frye, or an expert testimony, for Daubert, is based on generally accepted scientific knowledge. Lopes-Phelan, for one, said that the Connecticut lab will probably wait for that precedent before trying to report evidence using FRStat themselves.

That element is frustrating to Swofford, who thinks that forensic analysts are often too focused on the litigation, and not enough on the science. “People in forensics often still prioritise admissibility in court, and forget to ask if it meets the tenets of science.”

The slate of roadblocks are why, even if the program is available, and even though it improves the scientific validity of fingerprint analysis, analysts may be slow or reluctant to adopt it. “Ultimately, lab directors have to be willing to have this forward-thinking mindset,” Swofford said.

That a statistical tool will be available as an easy-to-use software program, though, means that the field will be forced to debate and grapple with it, he said.

The US Army Criminal Investigation Laboratory is still trying to determine the best way to release and distribute the software. “We’re not software developers,” Reed said. They might partner with a software company, who would take over the program and market it. “With that, one thing we would push for is for it to be affordable,” Reed said. Cost is an issue for forensic labs, many of which don’t have large budgets. Another option, Swofford suggested, would be to release the program for free, with the code open and available.

But despite all the challenges, Swofford is confident that FRStat can lead to progress. “It’s an odd feeling, being done. It feels like we’re safe now. I’ve done my job in strengthening the results we’re putting out of our lab,” he said. “Now, it’s about bringing the rest of the community on board.”

Nicole Wetsman is a health and science reporter based in New York. She tweets @NicoleWetsman.