If you use Google’s new Photos app, Microsoft’s Cortana, or Skype’s new translation function, you’re using a form of AI on a daily basis. AI was first dreamed up in the 1950s, but has only recently become a practical reality — all thanks to software systems called neural networks. This is how they work.

Making Computers Smarter

Plenty of things that humans find difficult can be done in a snap by a computer. Want to solve a partial differential equation? No problem. How about creating accurate weather forecasts or scouring the internet for a single web page? Piece of cake. But ask a computer to tell you the differences between porn and renaissance art? Or whether you just said “night” or “knight”? Good luck with that.

Computers just can’t reason in the same way humans do. They struggle to interpret the context of real-world situations or make the nuanced decisions that are vital to truly understanding the human world. That’s why neural networks were first developed way back in the 1950s as potential solution to that problem.

Taking inspiration from the human brain, neural networks are software systems that can train themselves to make sense of the human world. They use different layers of mathematical processing to make ever more sense of the information they’re fed, from human speech to a digital image. Essentially, they learn and change over time. That’s why they provide computers with a more intelligent and nuanced understanding of what confronts them. But it’s taken a long time to make that happen.

The Winter of Neural Networks

Back in the 1950s, researchers didn’t know how the human brain was intelligent — we still don’t, not exactly — but they did know that it was smart. So, they asked themselves how the human brain works, in the physical sense, and whether it could be mimicked to create an artificial version of that intelligence.

The brain is made up of billions of neurons, long thin cells that link to each other in a network, transmitting information using low-powered electrical charges. Somehow, out of that seemingly straightforward biological system, emerges something much more profound: the kind of mind that can recognise faces, develop philosophical treatises, puzzle through particle physics, and so much more. If engineers could recreate this biological system electronically, engineers figured, an artificial intelligence might emerge too.

There were some successful early examples of artificial neural networks, such as Frank Rosenblatt’s Perceptron which used analogue electrical components to create a binary classifier. That’s fancy talk for a system that can take an input — say, a picture of a shape — and classify it into one of two categories like “square” or “not-square.” But researchers soon ran into barriers. First, computers at the time didn’t have enough processing power to effectively handle lots of these kinds of decisions. Second, the limited number of synthetic neurons also limited the complexity of the operations that a network could achieve.

In the case of the Rosenblatt’s Perceptron, for instance, a single set of artificial neurons was able to discern a square from non-squares. But if you wanted to introduce the ability to perceive something else about the squares — whether they were red or not red for example — you’d need a whole extra set.

While the biology of the brain may be straightforward at the microscopic level, taken as a whole it is incredibly complex. And that macro-level complexity was too much for 1950s computers to handle. As a result, over the following decades neural networks fell from favour. It became the “winter of neural networks,” as Google’s Jason Freidenfelds put it to me.

Neuroscience Advances

But one person’s winter is another’s summer. From the 1960s onwards, our understanding of the human brain progressed by leaps and bounds.

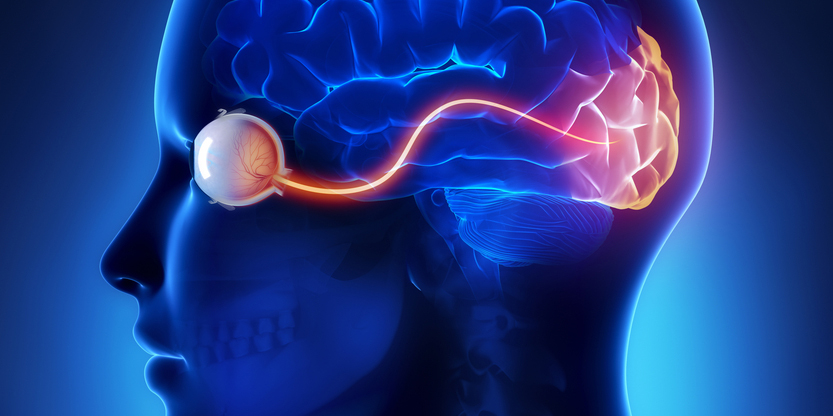

In those early days of neuroscience, much of the focus was on our visual systems. Professor Charles Cadieu from MIT explains:

It’s probably the best understood sensory modality, and probably the best understood part of the brain. We’ve known for decades now that neurons fire differently as you pass up the visual stream. In the retina, neurons are receptive to points of light and darkness; in the primary visual cortex there’s excitement of neurons by edge-like shapes; and in the higher areas of the visual cortex neurons respond to faces, hands… all sort of complex objects, both natural and man-made. In fact, up there, the neurons don’t respond to light and dark patches or edge-like features at all.

Image by CLIPAREA l Custom media/Shutterstock

It turns out that different parts of the brain’s biological network are responsible for different aspects of what we know as visual recognition. And these parts are arranged hierarchically.

This is true for other aspects of cognition too. Parts of the brain that process speech and perhaps even language itself appear to work in the same way. A hierarchy of different neuronal levels each provides their own insight, then passes it on to another, more senior level to make a higher level judgement. At each stage, the reasoning becomes more abstract, allowing a string of sounds to be recognised as a word that means something to us, or a cluster of bright and dark patterns on our retina to be rendered as “cat” in our brain.

These kinds of hierarchies were a crucial clue for researchers who still dared to think about artificial neural networks. “That’s really been a guiding light for neural networks,” explains Cadieu. “We just didn’t know how to make them behave that way.”

Biologically Inspired Software

In truth, the artificial networks in use today aren’t really modelled on the brain in the way that pioneers in the field may have expected. They are “only loosely inspired by the brain, ” muses Cadieu, in the sense that they’re really software systems that employ a layered approach to developing understanding, rather than being a network of nodes passing information back and forth between each other.

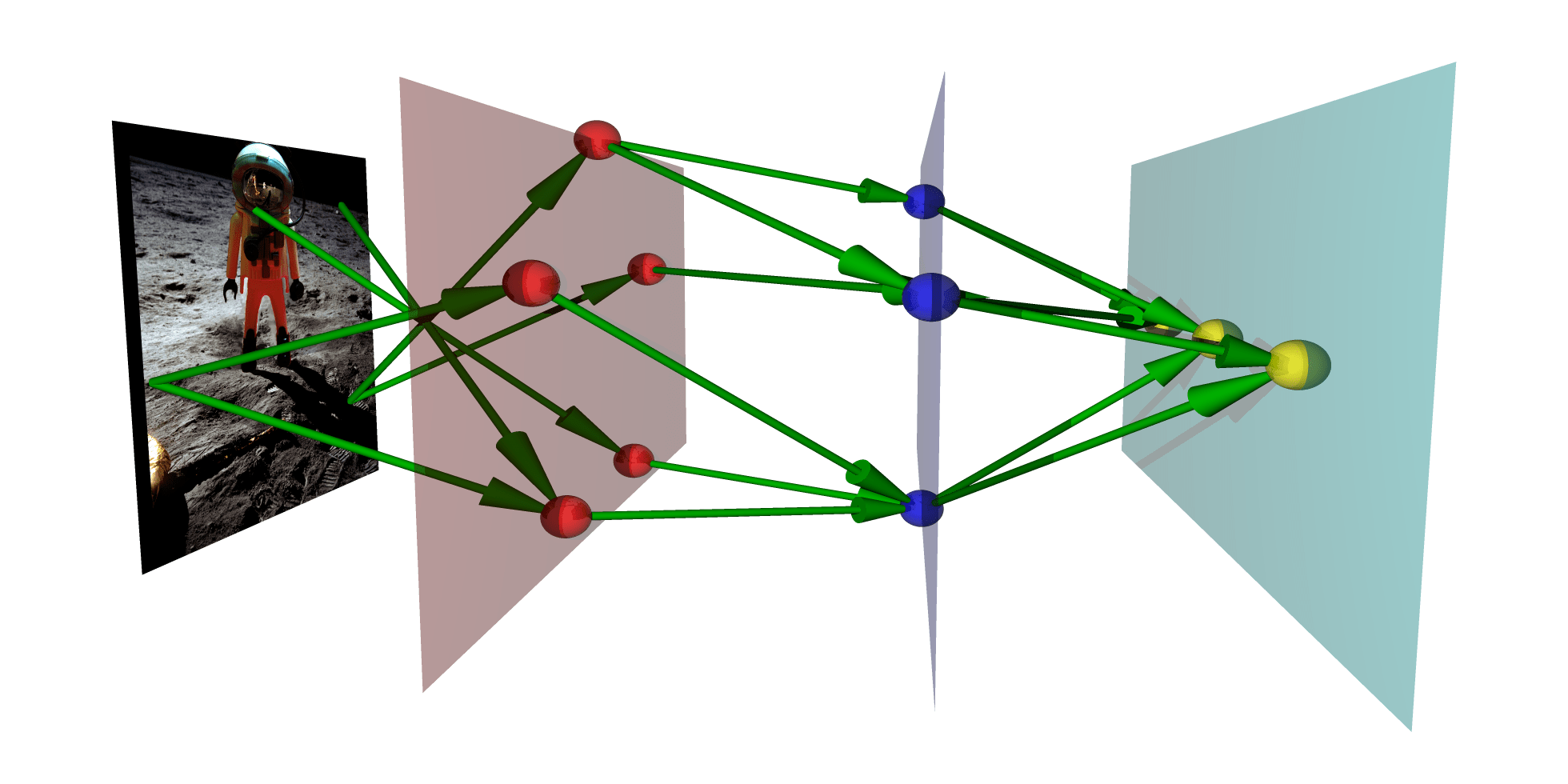

These software systems use one algorithm to process insight about an input, then pass it on to the next layer to process using a different algorithm to gain some higher-level understanding, and so on. In other words, it makes more sense to think of artificial neural networks as cascaded mathematical equations that can spot distinctive features and patterns, according to Freidenfelds.

In the case of image recognition, for instance, the first layer of a neural network may analyse pixel brightness, before passing it to a second to identify edges and lines formed by strips of similar pixels. The next layers may be able to identify shapes and textures, then further up the chain they may identify clustering of some of these abstract image features into actual physical features, such as eyes or wheels.

Then towards the very end, clustering of the these higher-levels features may be interpreted as actual objects: two eyes, a nose and a mouth may form a human face, say, while wheels, a seat and some handlebars resemble a bike. At the I/O developer conference in May, Google announced that the neural networks that power its products like Google Photos now use 30 different layers in total to make sense of images.

Image by fdecomite under Creative Commons licence.

Neural networks aren’t just restricted to image recognition, though that is our most advanced use of it currently. In the case of something like speech recognition, the neural network chops up the speech it’s hearing into short segments, then identifies vowel sounds. The subsequent layers can work out how the different vowel sounds fit together to form words, words fit together to form phrases, and finally infer meaning from what you just mumbled into your telephone.

As you can probably tell, this is a major step up from the single-layer Perceptron system — a real big step. In fact, all this time there were two things holdings back the successful implementation of neural networks. The first was computational power, which we now have. The second? Enough information to teach the things how to work properly.

Back Propagation and Deep Learning

Neural networks can’t learn until you throw throw enough data at them. They need large quantities of information to consider, pass through their layers, and attempt to classify. Then, they can compare their classifications to the real answers, and either pat themselves on the back or try a little harder.

In the case of image analysis, that means supplying your neural network with a slew of tagged photos known as a training set. Google has used YouTube to supply this training set in the past. In the case of speech recognition, the training set might be a series of audio clips along with a description of what’s being said. Provided with this huge chunk of inputs, the neural network will make an attempt at classifying each item, piecing together what information it can from its various layers to make guesses about what it’s seeing or hearing. During training, the machine’s answer is compared to the human-created description of what should have been observed. If it’s right, props to the networks.

But what if it’s wrong?

“If what it just analysed was a face and it said house, then it uses a techniques called back-propagation to adjust itself,” explains Cadieu. “It steps back through each of the previous layers, and each time it tweaks the mathematical expression for that layer, just enough so that it would get the answer right next time.”

At first the network will make mistakes all the time, but gradually its performance improves, the layers gradually becoming more and more able to discern exactly what they see. This iterative process of passing a sample through the network and back prorogation to correct itself is known as deep learning, and it’s what imbues the networks with human-like intelligence.

In the past, it’s been tough to amass enough information to feed something as hungry for data as a neural network. But these days there’s so much data floating around online that it’s relatively straightforward. There are images posted all over the internet with descriptions that describe exactly — or at least, exactly enough — what the computer should see, or dialogue from movies and corresponding scripts to help speech recognition systems learn how humans sound. And armed with all that data, neural networks can grow ever more intelligent.

Straight A Intelligence?

And it’s working. When Google released its first neural network-powered voice recognition system in 2011 — the one baked into the likes of Now and Chrome — it had an error rate of around 25%. That means that it messed up one in four times that you used it.

Now, after three years of tweaks and learning, that’s fallen to just 8 per cent. Google’s latest attempt at showing off the power of neural networks is with Photos, which is almost uncanny in its intelligence.

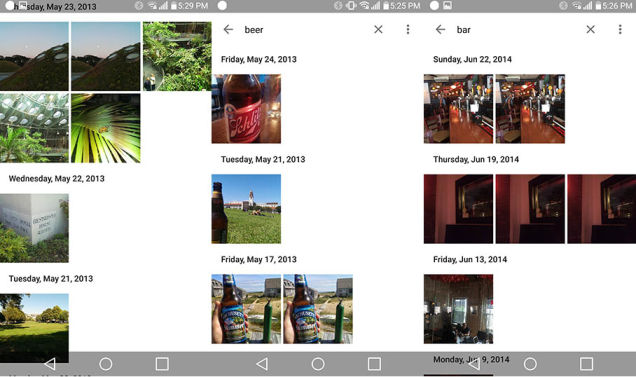

When Gizmodo’s Mario Aguilar first tried it, he explained:

It’s crazy how well this works. Creepy even. We were amazed first, then suspicious. We were only working with a small smattering of the photos I currently have on this phone… [but] despite the limited pool, Google Photos was actually able to make quite a bit of sense out of what I shot. It correctly identified one of my best friends who I have many photos of as a unique person… But where Photos really wrecks your brain is when you start searching for random things in your collection. Beers? It finds photos of beers. Bars? It finds photos of bars.

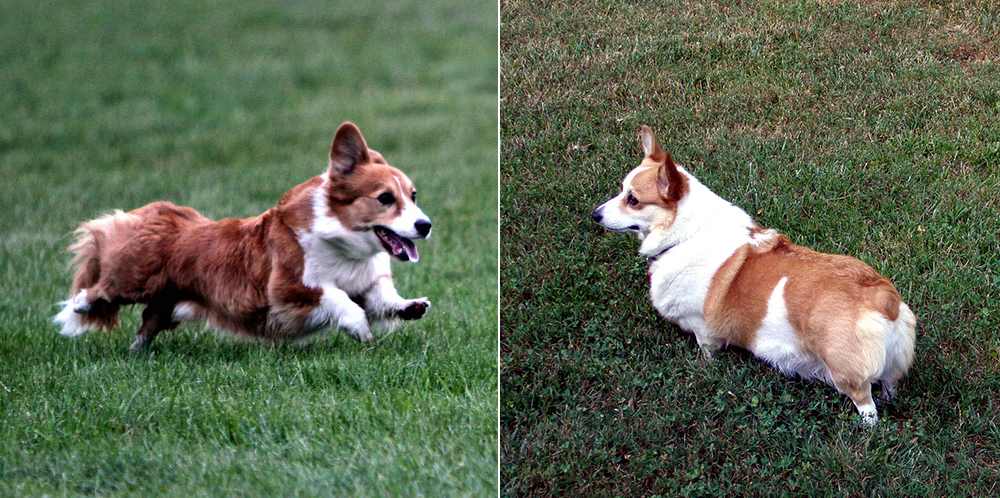

It’s not just Google, though. Last year Facebook unveiled its DeepFace algorithm, which can recognise specific human faces with 97% accuracy. That’s about as good as human. Wolfram Alpha has created a software system that can identify objects and even allows you to roll it in to your own software. And Microsoft’s Cortana digital personal assistant is so sharp that it can recognise the difference between a picture of a Pembroke Welsh Corgi and a Cardigan Welsh Corgi. Can you recognise the differences between a Pembroke Welsh Corgi and a Cardigan Welsh Corgi? I sure as hell can’t.

Image by Virginia Hill and Tundra Ice. The Cardigan Welsh Corgi is on the left.

And it’s not all image recognition. Skype now uses neural networks to translate from one language to another on the fly; Chinese search firm Baidu uses them to target ads on its search engine; and just recently Google unveiled a chatbot system that had been trained using them.

Neural networks are finally giving computers the ability to understand the human world, and make smart inferences about it. Hell, they drip with so much knowledge and experience these days that they can dream and create psychedelic art in the process.

Imperfect Science and Gorillagate

But like anything “smart,” neural networks can and do go wrong. Just this month, Google’s Photos app mistakenly tagged two black people in a photograph as “gorillas.” Flickr’s smart new image recognition tool, powered by Yahoo’s neural network, also tagged a black man as an “ape.” Clearly, neither are perfect, and may reflect the (racist) ways that humans have tagged images in public data. Google’s quick fix, it seems, was to simply remove the “gorilla” tag from Photos altogether; in the longer term, it will no doubt train its networks harder.

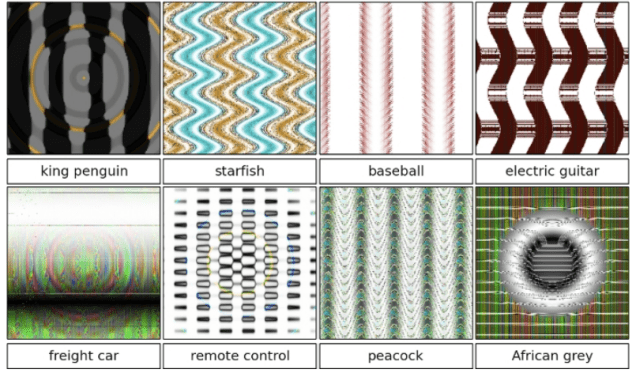

It’s easy enough to point a finger at the technology for just not being up to scratch. Certainly, it’s possible to fool a neural network. The images below, for instance, are enough to trick neural networks into thinking that they see penguins, baseballs and remote controls, but to humans they just look like abstract patterns, albeit ones inspired by those real objects.

“The algorithm’s confusion is due to differences in how it sees the world compared with humans,” said Jeff Clune from the University of Wyoming in Laramie, who discovered this quirk, to New Scientist. “While [humans] identify a cheetah by looking for the whole package — the right body shape, patterning and so on — a [neural network] is only interested in the parts of an object that most distinguish it from others.”

It doesn’t help, of course, that neural networks don’t have the in-built sense of decorum and caution that humans have. Like a child blurting out whatever springs into its mind, neural networks eagerly provide their best guess in the hope that it’s correct — even if they’re not 100% certain. In those cases, a human might demur; perhaps a neural network should learn to do the same.

Gorillagate may be just as much to do with human bias as dubious technology. Speaking to the Wall Street Journal, artificial intelligence expert Vivienne Ming pointed out that the training sets used by Google are perhaps to blame. The reams of photographs on the internet display an overwhelmingly white world, so it’s plausible that the neural networks simply don’t have the experience they need to identify black people accurately.

Power in Numbers

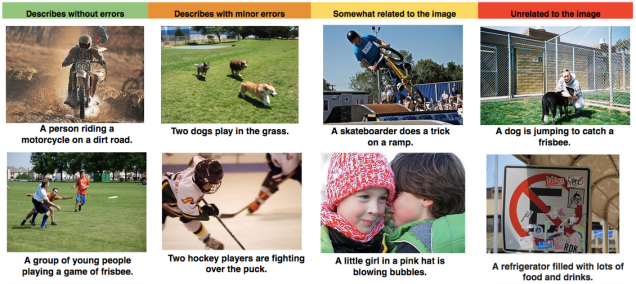

Other than feeding neural networks accurate data, researchers are also improving the software by combining networks together. A recent research collaboration between Google and Stanford University has begun producing software that can actually describe whole scenes instead of just identifying one object in it, like a cat.

This frankly remarkable feat is achieved by combining an image-recognition neural network with a natural-language network. Give a natural language network a full sentence such as “We sat down to a delightful lunch of hoagies and BLTs,” and it abstracts it into high-level concepts like “eating” and “sandwiches.” From there, the output can be fed into another natural language processing network that can make sense of these concepts and translate it into a sentence that says much the same thing, using different wording.

This is how the neural networks used by Skype work for their on-the-fly translation. What the researchers from Stanford and Google have done, though, is replace the first neural network with an image-recognition network and fed that into an English natural language network. The first produces high-level concepts of what’s depicted in the image — say, a man, a sandwich and eating. The second the attempts to convert those concepts into a sentence describing what’s shown, like “A man is eating a sandwich.” As you can see in the chart below, the results aren’t perfect — but they’re certainty pretty impressive.

So if neural networks are developing so rapidly, is the sky the limit?

“You can certainly expect to see major improvements in image and speech recognition in the coming years,” says Cadieu, pointing out that these modern neural networks have only really been around for a couple of years anyway.

As for language processing, it’s less clear that neural networks will be able to deal with the problems so well. While image and speech recognition definitely work in the layered way that modern neural networks do, there’s less neuroscientifc evidence to suggest that language is processed in the same way, according to Cadieu. That may mean that artificial language processing will soon run into conceptual barriers.

Toward a Working Class AI

One thing is clear, though: These kinds of artificial intelligences are already lending a huge helping hand to humans. In the past, you had to sift through your photographs to compile an album from your latest vacation or find that pic of your buddy Bob drinking a beer. But today, neural network software can do that for you. Google Photos prepares albums automatically, and its smart search function will find images with alarming accuracy.

And this kind of consumer-focused software is a mere gimmick compared to the feats that neural networks could one day perform for us.

It’s not hard to imagine image-processing algorithms gaining enough intelligence to vet medical images for tumours, with doctors merely checking their result. Voice recognition systems could become so advanced that telemarketing campaigns will be run by software alone. Language processing networks will allow news stories to be written by machine.

In fact, all these things are already happening, to some extent. The changes are profound enough that researchers at the University of Oxford estimate that up to a half of jobs, including the one possessed by yours truly, will be lost to AI systems powered by neural networks in coming years.

But shifts in economies and employment have been driven by technology many times before, from the printing press and motor car, to computers and the internet. Though there will be social upheaval, there will also be benefits. Ultimately, neural networks will give everyone access to intelligence that currently lies in the hands of a few. And that will lead to smarter systems, better services, and more time to solve the human problems that computers will never be able to fix.

Picture: pogonici/Shutterstock