In Gizmodo’s third release of documents from the Facebook Papers, we learned how decisions at Facebook over whether to improve a product can depend on how many conservatives in the press are likely to get pissed. We also learned that when Facebook claimed it wasn’t doing something, it was doing exactly that. Here’s a broader look at just a few of the things we’ve learned about Facebook — Meta if you’re nasty — over the last couple weeks thanks to whistleblower Frances Haugen.

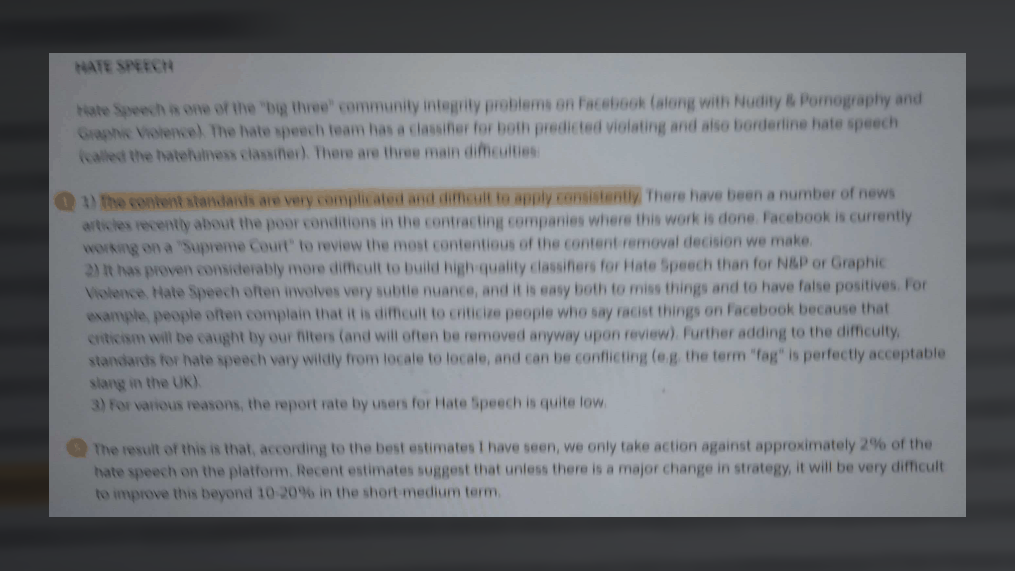

Facebook Struggles to Stop Hate Speech and Will Continue to Do so, Employees Admitted

Some of these pages hard to read. (Like the rest of the leak, they’re photographs of Frances Haugen’s computer screen.) The above screenshot is from an internal employee post dated Sept. 2019. The author cites estimates from Facebook itself asserting the company only takes “action against approximately 2% of the hate speech on the platform,” adding that without “a major change in strategy,” that figure is unlikely to increase beyond 10-20% “in the short-medium term.” The author writes, “The content standards are very complicated and difficult to apply consistently… Hate Speech often involves very subtle nuance, and it is easy both to miss things and to have false positives.”

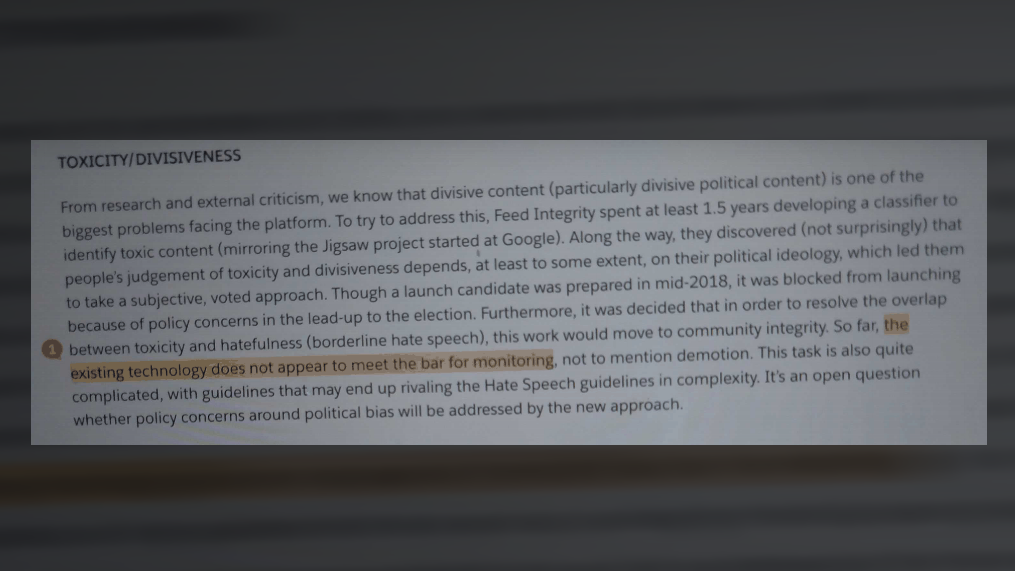

Toxicity Plagues Facebook, and Employees Say Its Monitoring Programs Can’t Keep up

Politically divisive content is Facebook’s biggest problem, the author of the post says, but — at least as of 2019 — the company had few ways to handle it. Years were invested in trying to build a system that could detect toxic partisan content so its effects could be reduced, but there were multiple delays. “So far, the existing technology does not appear to meet the bar for monitoring, not to mention demotion,” the author says, referring to the process of moving posts down lower in the News Feed.

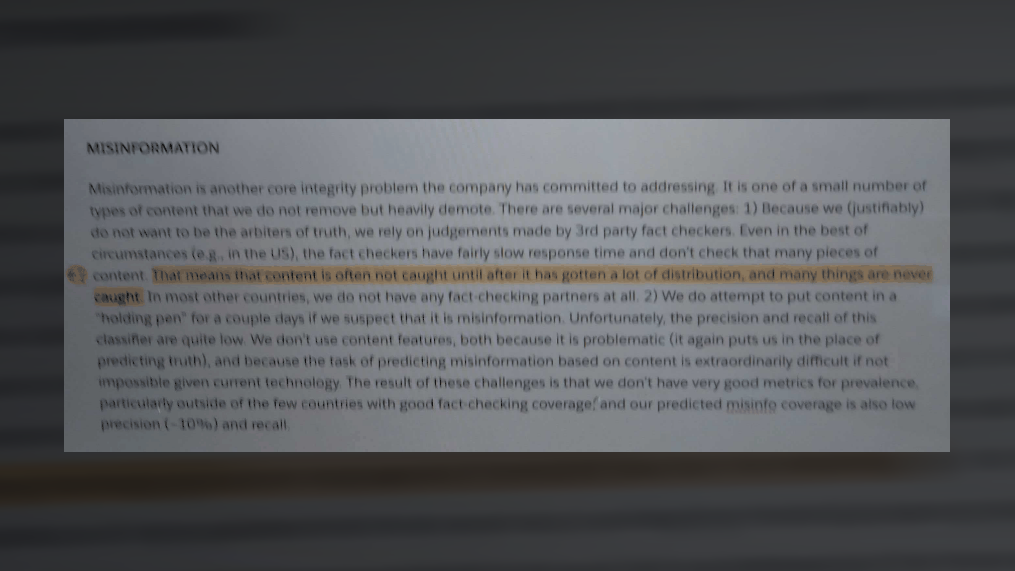

Employees Knew Facebook Often Caught Misinformation Too Late

Facebook’s system for dealing with misinformation faces many hinderances inherent to its architecture. “Even in the best of circumstances (e.g., in the US), the fact checkers have fairly slow response time and don’t check that many pieces of content,” one employee wrote in a presentation. “That means that content is often not caught until after it has gotten a lot of distribution, and many things are never caught.” The employee goes on to say that in “most” countries, the platform has no fact checkers at all.

Demoting Low-Quality Content in the News Feed Would Make Conservatives Angry, Employees Said

After surveying users about what kinds of news they found most informative, Facebook’s own News Team proposed ignoring the results of the poll by continuing to boost publishers who posted clickbait, fake news, and things generally no one wanted to read, according to an internal presentation. Users had deemed that type material of low quality. Why did Facebook’s own employees make that recommendation? Because they believed any attempt to improve the quality of information people would result in allegations of censorship by Republicans, according to the document. One employee wrote, “The News team proposed an update to the High Quality News ranking adjustment that increased and improved the effectiveness of the informative component of the score, which is based on asking users how informative they find news content. The launch was blocked primarily based on policy concerns about the effect it had on certain news publishers considered Low Quality by the model, stemming from external criticism from some quarters around perceived anti-conservative bias.”

Facebook Ignored Its Own Rules to Avoid Conservative Criticism, a Document Says

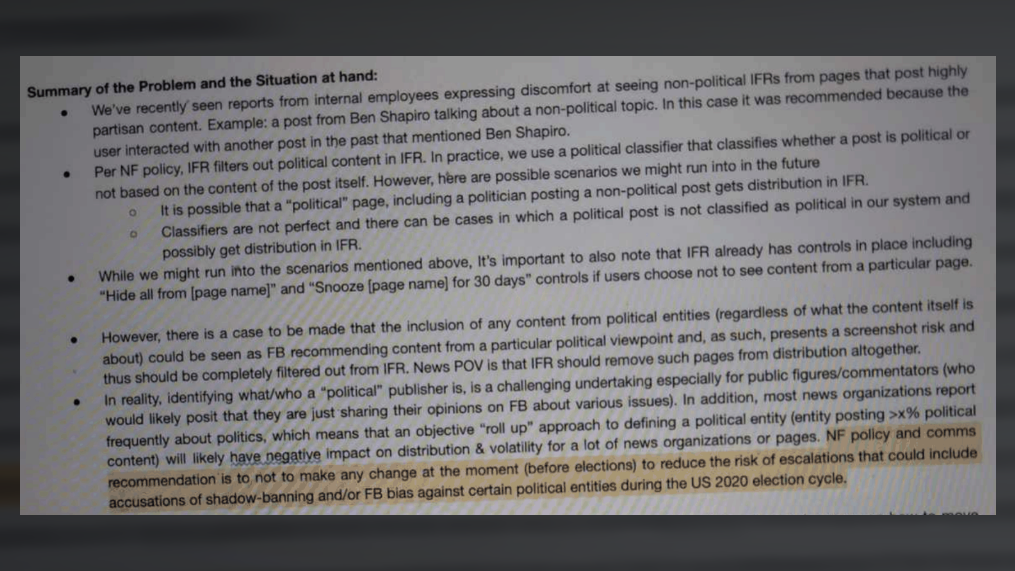

Facebook decided to decrease its recommendations of politically partisan pages ahead of the 2020 elections but left open a loophole that right-wing pundits like Ben Shapiro kept managing to slip through, one internal presentation says. Though many Facebook employees were “expressing discomfort” over this glaring exception, its policy and public relations team recommended against fixing it, fearful — once again — they’d be bombarded by censorship allegations, an employee wrote.