The use of biometrics — measurements of physiological characteristics to identify someone — has made interactions with our mobile devices a lot easier by trading passcodes for face scans and fingerprint readings. But are there other ways our physical interactions with devices can make them easier to use? Researchers in Japan think so, by staring deep into a user’s eyes through a selfie camera.

Tomorrow marks the start of the 2022 Conference on Human Factors in Computing Systems (or CHI, for short) in New Orleans. The conference’s focus is on bringing together researchers studying new ways for humans to interact with technology. That includes everything from virtual reality controllers that can simulate the feeling of a virtual animal’s fur, to breakthroughs in simulated VR kissing, to even touchscreen upgrades through the use of bumpy screen protectors.

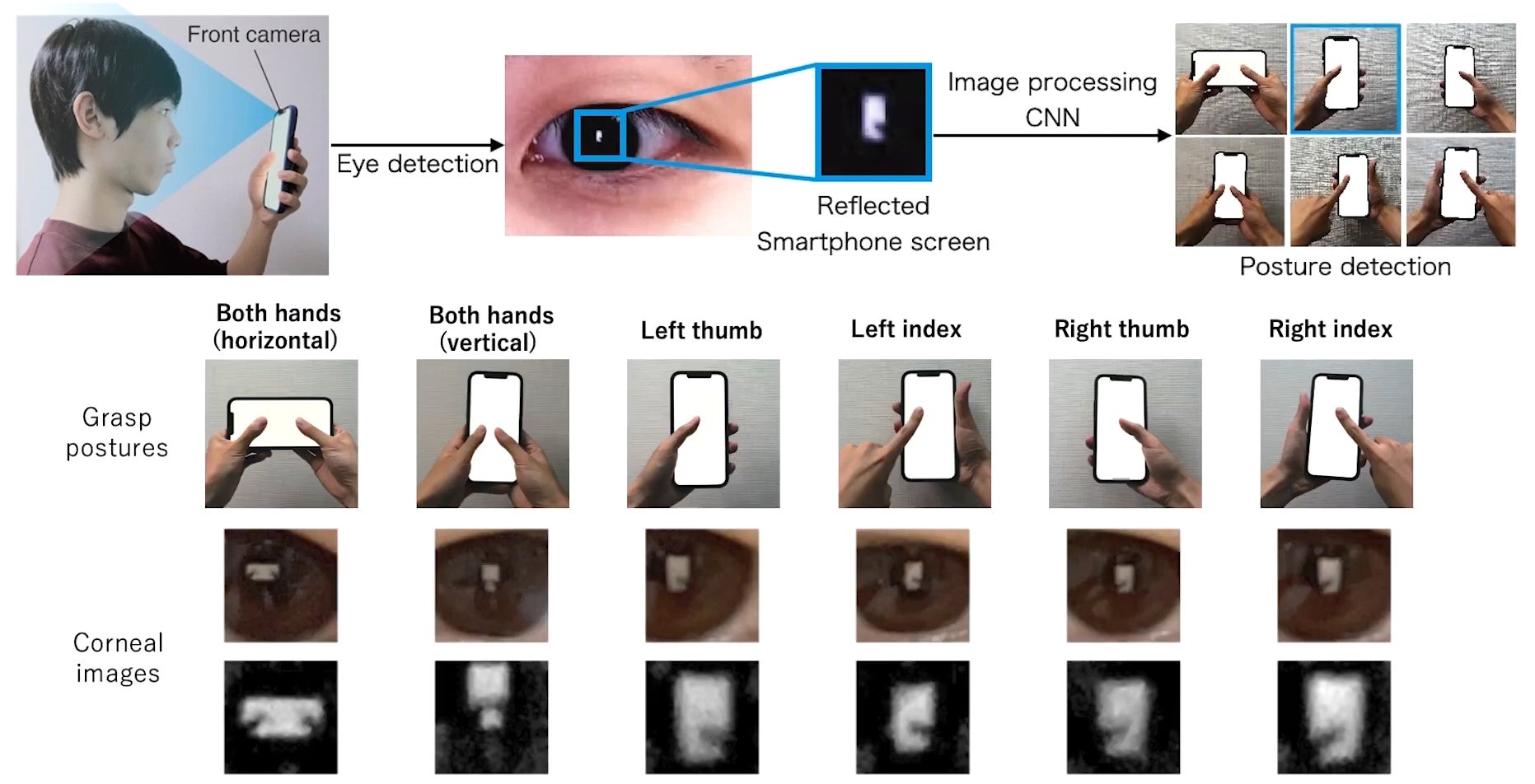

As part of the conference, a group of researchers from Keio University, Yahoo Japan, and the Tokyo University of Technology is presenting a novel way to detect how a user is holding a mobile device like a smartphone, and then automatically adapt the user interface to make it easier to use. For now, the research is focusing on six different ways a user can hold a device like a smartphone: with both hands, just the left, or just the right in portrait mode, and the same options in horizontal mode.

As smartphones have grown in size over the years, using one single-handedly has gotten harder and harder. But with a user interface that adapts itself accordingly, such as dynamically repositioning buttons to the left or right edges of the screen, or shrinking the keyboard and aligning it left or right, using a smartphone with just one hand can be a lot easier. The only issue is enabling a smartphone to automatically know how it’s being held and used, and that’s what this team of researchers has figured out without requiring any additional hardware.

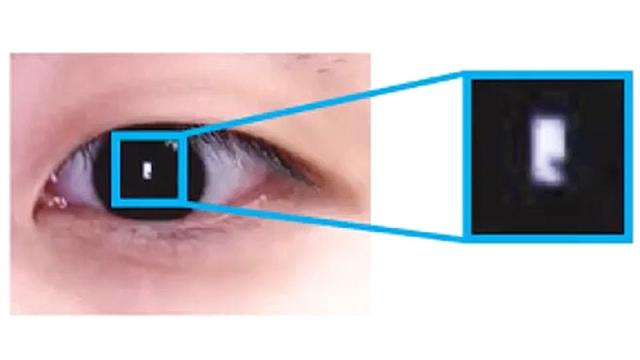

With a sufficient level of screen brightness and resolution, a smartphone’s selfie camera can monitor a user’s face staring at the display and use a CSI-style super zoom to focus on the screen’s reflection on their pupils. It’s a technique that’s been used in visual effects to calculate and recreate the lighting around actors in a filmed shot that’s being digitally augmented. But in this case, the pupil reflection (as grainy as it is) can be used to figure out how a device is being held by analysing its shape and looking for the shadows and dark spots created as a user’s thumbs cover the screen.

There is some training needed for the end-user, which mostly involves snapping 12 photos of them performing each grasping posture so the software has a sizeable sample size to work from, but the researchers have found they’re able to accurately figure out how a device is being held about 84% of the time. That will potentially further improve as the resolution and capabilities of front-facing cameras on mobile devices do, but that also raises some red flags about just how much information can be captured off a user’s pupils. Could nefarious apps use the selfie camera to capture data like a user entering a password through an on-screen keyboard, or monitor their browsing habits? Maybe it’s time we all switch back to using smaller phones that are single-hand-friendly and start blocking selfie cameras with sticky notes too.