Apple is now bringing its new child safety features to Australia, a handful of ‘blocks’, essentially, that act as a barrier to kids seeing images that contain nudity.

Apple rolled out the feature in the U.S. last year and after working with local organisations such as the Kid’s Helpline, it’s ready to let it loose on Australia.

If you’re thinking this is that feature we heard about last year that would scan a user’s phone for child exploitation material, you’re not alone, I thought that too. But that tech (CSAM) is completely different to what is being announced today. What’s being announced today is more of an on-device notification system for kids.

It isn’t switched on by default, but with a new software update rolling out over the next few weeks, parents (and kids) can turn the feature on.

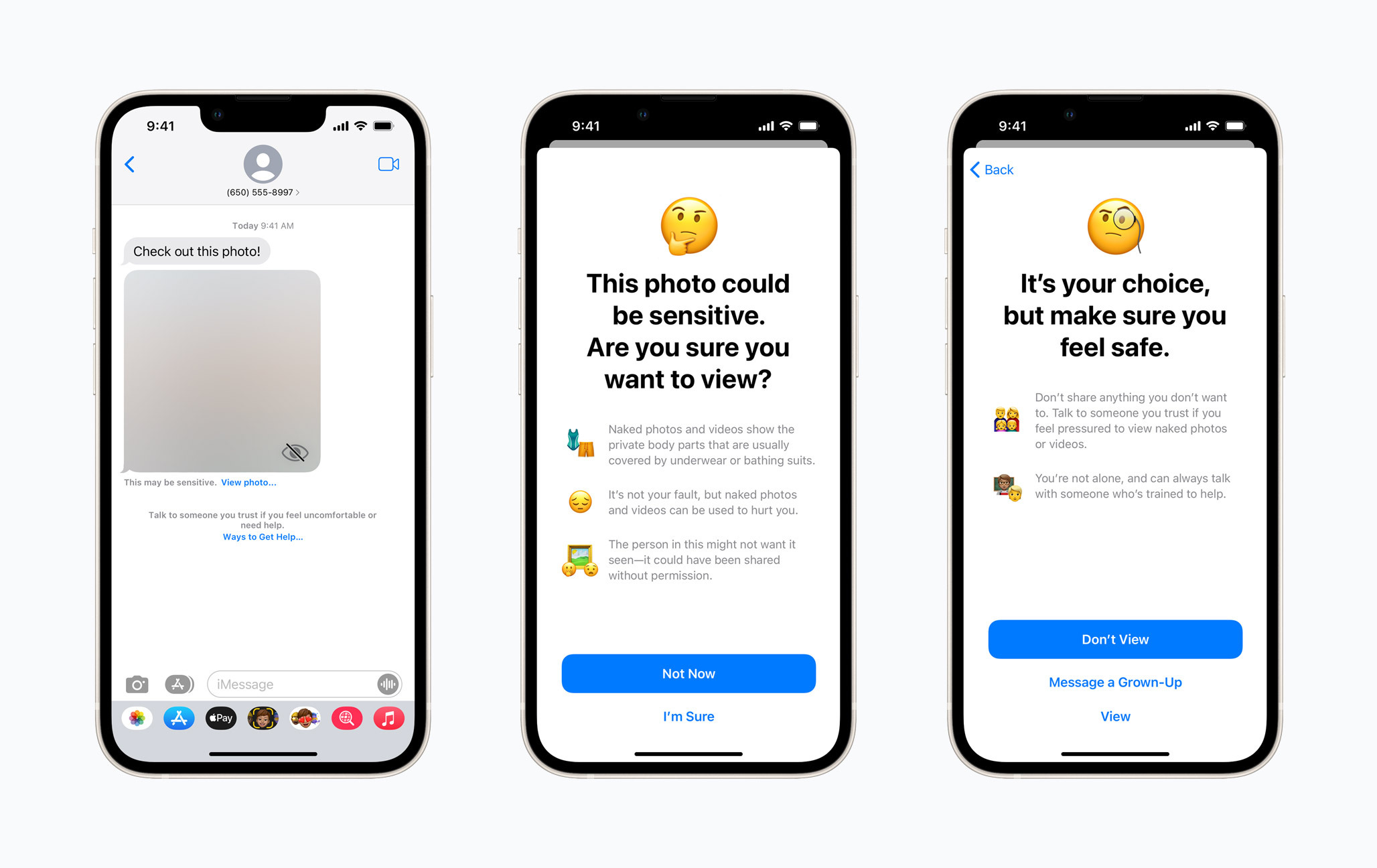

As you can see in the screenshots below, the feature will jump in when an image is received. The image in question will be blurred, with a quick warning that the image may be sensitive, giving kids the option to view the photo anyway or forward it on to an adult they trust, or to reach out for help, such as through the Kid’s Helpline.

What’s great to see is that the warning (which pops up if the child selects ‘View photo’) mentions consent, and not just a note of nudity. The same warning will pop up when a child is about to send a photo onto another person, too.

The Apple safety feature, however, relies on the child to do the right thing. There’s no stopping them from clicking through or from sharing the pic, likewise there’s no requirement for them to alert an adult and Apple says there’s no log or ‘audit trail’ of the child’s activity with such content. Apple also says the intervention happens on the kid’s device, not in the cloud. Great for security, but not-so-great for parents confirming their child is not viewing child exploitation material.

Also released as part of these safety features is the ability to ask Siri how to report child exploitation material, with the virtual assistant sending users to validated recourses. Similarly, Siri, Spotlight and Safari Search have also been updated to intervene when users perform searches for queries related to child exploitation. Apple will give the user a warning that interest in such a topic is harmful and problematic and it will serve the user with resources for how to get help.