Like it or not, Meta’s doubling down on its efforts to make 3D avatar playpens synonymous with future computing. In an event on Tuesday, the company showcased a variety of new AI tools, including a voice assistant, a universal language translator, and a programming tool capable of generating digital objects through voice commands, all aimed at one-day breathing life into an actual, usable metaverse.

The tools were showcased during Meta’s Inside the Lab: Building for the Metaverse with AI live-stream event on Wednesday. During the event, Meta revealed its development of an advanced digital voice assistant under the banner, “Project CAIRaoke.” Zuckerberg said the company hopes the tool will one day be used as a crucial vehicle for users to navigate around the metaverse.

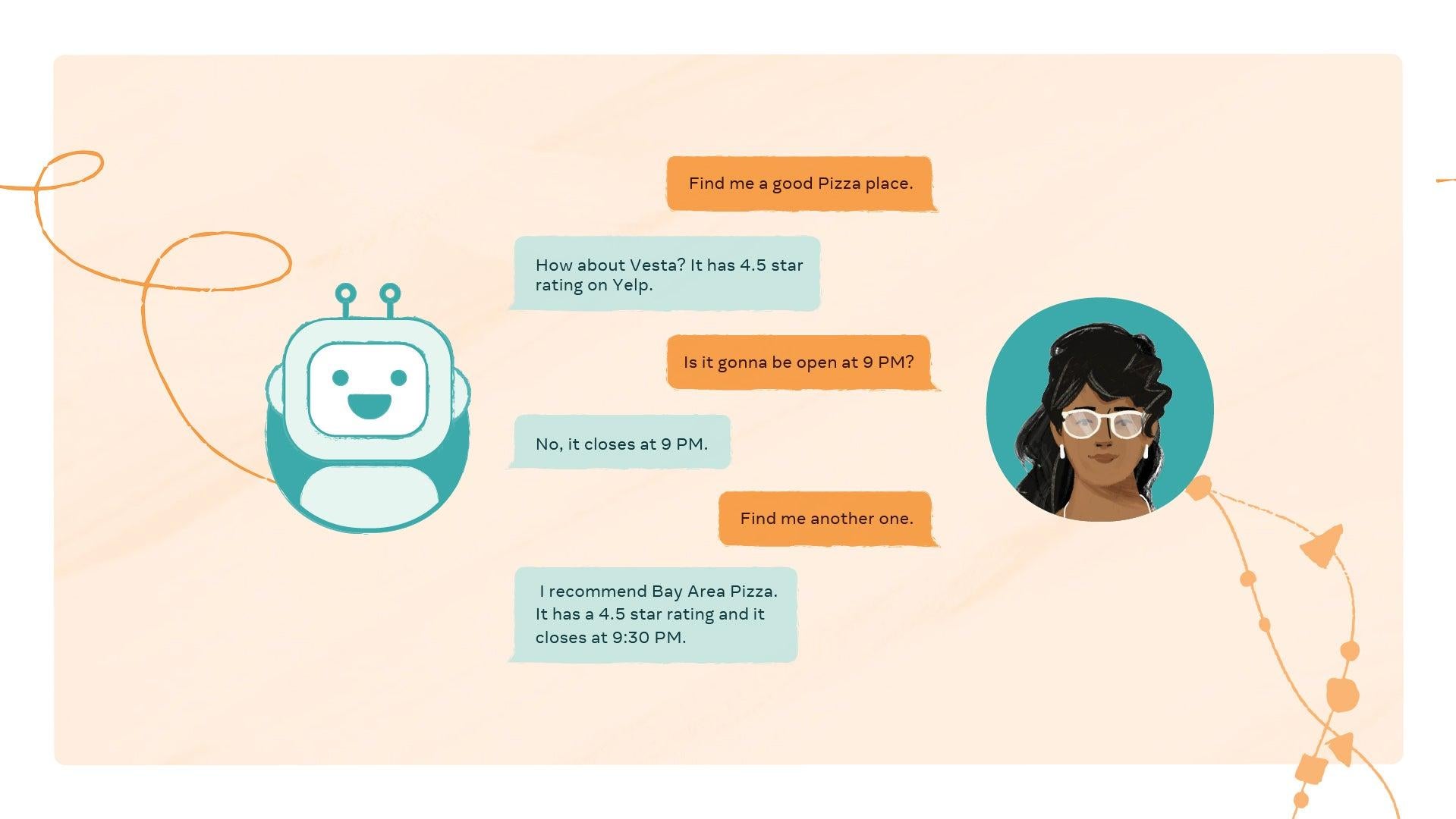

Though still early, Meta believes models created under Project CAIRaoke will be able to remember commands said earlier on during conversation or change topics altogether — fluid abilities so far absent from most current voice assistants. Imagine a Siri-like assistant but one capable of organically capable of engaging in multiple follow-up conversations. Meta AI Senior Research Manager Alborz Geramifard referred to these as “supercharged assistants.”

Ah finally, a voice assistant you can argue with in perpetuity.

Meta went on to say an early version of the assistant will begin rolling out across Portal home devices as a way to set reminders. If that doesn’t necessarily scream high-tech innovation, don’t worry, Meta says you might sooner or later be able to use the AI for personalised shopping!

Further down the road, the company imagines merging its AI assistant with AR and VR devices. In one example, a video showed a man wearing AR glasses using the assistant to guide him through a soup recipe. Text appeared transposed on the world as his assistant scolded him not to overdo it on the salt.

“By combining augmented and virtual reality devices with our Project CAIRaoke model, we hope the future of conversational AI will be more personal and seamless,” a narrator said during the presentation video.

Meta also revealed details around a new voice-powered AI generation tool called Builder Bot which it sees as crucial for navigating around virtual worlds. During the demonstration, Zuckerberg began interacting with an empty low-res virtual world and began building it out using voice commands. Zuckerberg’s avatar first changed the entire 3D environment to a park, then a beach, which he claimed to do using only voice commands.

The demonstration then shows the AI generating a picnic table, boom box, drinks, and other small objects based on voice commands. As The Verge notes, it’s unclear whether Builder Bot pulls on a library of already defined objects to complete these tasks or whether the AI itself is involved in generating them. (The latter would obviously be much more impressive). The goal of all this, according to Zuckerberg, is to “create nuanced worlds to explore, and share experiences with others with just your voice.”

Finally, the company also showcased its efforts to build an AI-based universal speech transition system. Meta outlined two primary approaches it’s taking to AI-enabled translation. The first, dubbed No Language Left Behind, is focused on less widely used, so-called “low-resource languages” which typically have less training data for AI systems to learn from them more widely used languages. Meta estimates around 20% of the world’s population currently use these types of languages, leaving them largely excluded from the online world and, presumably, from Meta. The company hopes this new AI tool will essentially enable high-quality translations for these otherwise underserved languages.

The second project, called Universal Speech Translator, is aimed at using AR and other tools to translate speech from one language to another in real-time. In a demonstration video, the company imagined one day combining this AI translator with AR glasses or other wearables to let users communicate with people speaking different languages in real-time. And of course, here too there’s a metaverse angle. The company claims that “in the not too distant future,” these translation tools could be integrated into virtual worlds to let users interact with anyone, “just as they would with someone next door.”

So, that’s what Meta has cooking. On the positive side, Wednesday’s announcements offered a glimpse into some far more interesting, potentially useful, tools that were on display during its first sprawling, unfocused metaverse presentation. At the same time, most of these proposals seem pretty far away from becoming reality. It’s also unclear whether any of these tools will really act as a catalyst to inspire more actual interest in the metaverse as a concept among regular everyday people.

At the time of writing, Meta’s stock price is down another 1.8 per cent for the day.