A Reddit user posted about a wreck in their 2020 Tesla Model X, a wreck that occurred while the car was in Autopilot. What’s interesting about this wreck is that it happened at a location where, allegedly, five other Teslas in Autopilot have also wrecked, in very similar ways. What’s going on with this innocuous-looking split section of road?

Now, full disclosure, I have so far only been able to confirm via an outside source two other wrecks of Teslas at this location, based on a call to the garage services/towing company listed for Yosemite, but the Model X poster, who goes by BBFLG on Reddit, was told of a total of five by a combination of locals and Rangers:

Rangers told me 3 Tesla accidents in the past here, then my accident… a local stopped to tell me their Tesla always has issues here and also say there’ve been multiple accidents here… then just today my tow truck driver sent me pictures of another accident with a Model S last Friday – that’s 5 that I know of.

Whether it’s three (the Reddit poster’s wreck plus the two others confirmed by the towing company) or the whole five mentioned in the post, that’s still a surprising amount to happen at one very specific point. The garage believed these were Autopilot-related but didn’t have hard confirmation, though it would make sense as that would be a common element — the same driver-assistance — for all of these incidents.

Here’s how the Model X driver describes what happened:

Just sharing my story of my 2020 Model X LR+ accident on July 25th. 40 km/h speed zone, two lanes, lane one curves left, lane two goes straight. Hands on wheel, eyes on road, vehicle just wanted to keep going straight, I took control, entered gravel and smashed into a boulder. No airbag deployment, but couldn’t drive. Towed out to entrance of park (2 hours, almost $US400 ($545)), then towed from there to Fresno (2.5 hours, $US500 ($682)), then took a 3 hour Uber to San Luis Obispo where I caught a ride from a friend (3 hours, almost $US300 ($409))… then flew home from San Luis Obispo to Flagstaff, AZ ($US613 ($836))… now having transported to Tesla Body Centre in Tempe, AZ (over $US900 ($1,227)).

Just really, really want to figure out how to get this message to Tesla and have them do something and look at their maps… 5 accidents is definitely an issue that needs to be looked into. Thankfully a low speed 40 km/h zone… but it wanted to go straight or so it seems, then it barely followed the curve at the last minute and even with hands on the wheel there was no way I could stop or turn as it entered a gravel-covered area. Just got new CrossClimate SUV tires 3 days earlier… argh.

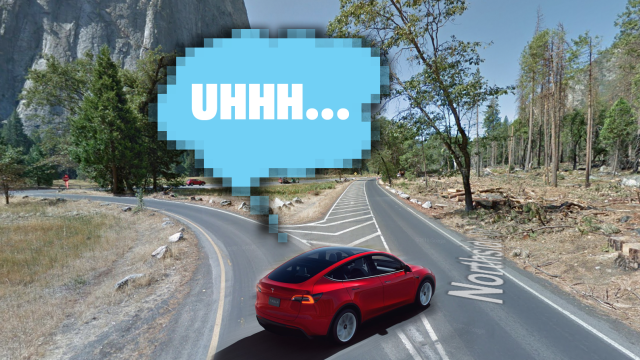

For reference, here’s what this location looks like:

It’s a road that splits off in a Y-type formation, but nothing really that exotic. A highway exit is essentially the same sort of thing, just bigger and higher-speed.

According to the original poster, the Model X wanted to “keep going straight” and while the driver took control away from Autopilot when they realised what was happening, it was a bit too late as the car had entered the gravel area and hit a rock, resulting in the wreck that left the car looking like this:

Interestingly, the owner also mentioned that their Tesla was mistaking the full moon for a yellow traffic light that same weekend, a phenomenon we just covered here recently.

Looking at that split in the road, the alleged confusion of Tesla’s autopilot could be related to other similar issues that have been seen before with Autopilot and FSD regarding lane splits and, especially ones that use the diagonal-hashed road lines to indicate the upcoming split, a road marking that Tesla’s semi-automated systems do not seem to acknowledge.

Not having access to the decision-making tree of the neural nets that compute the path of the Tesla, I don’t really know exactly what the hell the car was pretend-thinking when it tried to keep going straight. It sounds a bit like it was following the solid lane lines, and then at the point when the split started to happen, may have interpreted the diverging lane lines in the middle as defining a non-existent straight middle lane, but that’s just a guess.

What I can say with more certainty is that what’s going on here appears to be a great example of an issue discussed here just about a month ago: the difference between “top-down” and “bottom-up” reasoning that defines the fundamental differences between human and AI driving.

I suggest reading the full (and not too long, you can read it!) paper from M.L. Cummings about this subject, but I think we can do a quick evaluation of this incident with the concepts in mind as an example.

No reasonably alert human would find this road split difficult, because we use “top-down” reasoning effectively. That means that we, as humans, are able to view the scene in the full context of what we understand the world to be like.

We know generally where we are, and we know that roads can sometimes split, and we know that the hashed stripes on the road mean we shouldn’t drive there, and we can see the whole terrain and understand that there’s lots of rocks about, we know we’re in a national park and how that changes our attentiveness levels and how we’re expected to drive. We get the entire situation, and can use this understanding to adapt to new situations quickly and effectively.

An AI doesn’t enjoy this level of awareness, because AIs haven’t lived full, exciting human lives like you or (maybe to a lesser extent) I have. AIs drive with a “bottom-up” approach, where they’re operating more on a sensor input-reaction cycle, seeing the road lines, acting to keep the car centered in that lane defined by those lines, but with no understanding of the bigger context around those immediate visual inputs.

As a result, it has no backup to rely on when confusion happens. When those lane lines split, the system’s brittleness is apparent, as all it can do is try and find the best match for the job it knows to do — stay centered in the lane, follow the broader directions — even if the context (like about to drive into a lovely Sierra Nevada boulder) doesn’t fit.

We still have a long way to go before full self-driving. Situations like this, unfortunate as they are, are really important examples to see why. Sure, Tesla’s smart programmers can likely train the system to understand this particular situation in the future, but similar yet slightly different road layouts exist in innumerable places all over the world, and there’s no guarantee the AI will be able to associate those with the correct course of action.

I think there are ways to make some manner of mostly self-driving work, likely by setting our goals on an infrastructure-assisted Level 4 as opposed to a fully, universally-autonomous Level 5, but that’s a less sexy solution, which tends to spell doom.

I reached out to the Model X owner for more details, and will update if and when I get a response.

I guess we’ll see. In the meantime, maybe just drive your Tesla manually next time you decide to enjoy Yosemite.