If you’re paying any attention at all to the the world of Tesla or the ongoing quest for self-driving cars (not something I’m recommending for healthy, well-adjusted people, necessarily) you’ve probably been hearing a lot about something called Tesla Vision, which is not Tesla’s new Netflix competitor that deepfakes Elon Musk into every movie, but is rather Tesla’s new camera-only-based semi-automated system. Or, to maybe put it more bluntly, it’s a fancy name Tesla gave to the deletion of radar units in their top-selling cars.

Here’s how Tesla describes the new system themselves:

We are continuing the transition to Tesla Vision, our camera-based Autopilot system. Beginning with deliveries in May 2021, Model 3 and Model Y vehicles built for the North American market will no longer be equipped with radar. Instead, these will be the first Tesla vehicles to rely on camera vision and neural net processing to deliver Autopilot, Full-Self Driving and certain active safety features. Customers who ordered before May 2021 and are matched to a car with Tesla Vision will be notified of the change through their Tesla Accounts prior to delivery.

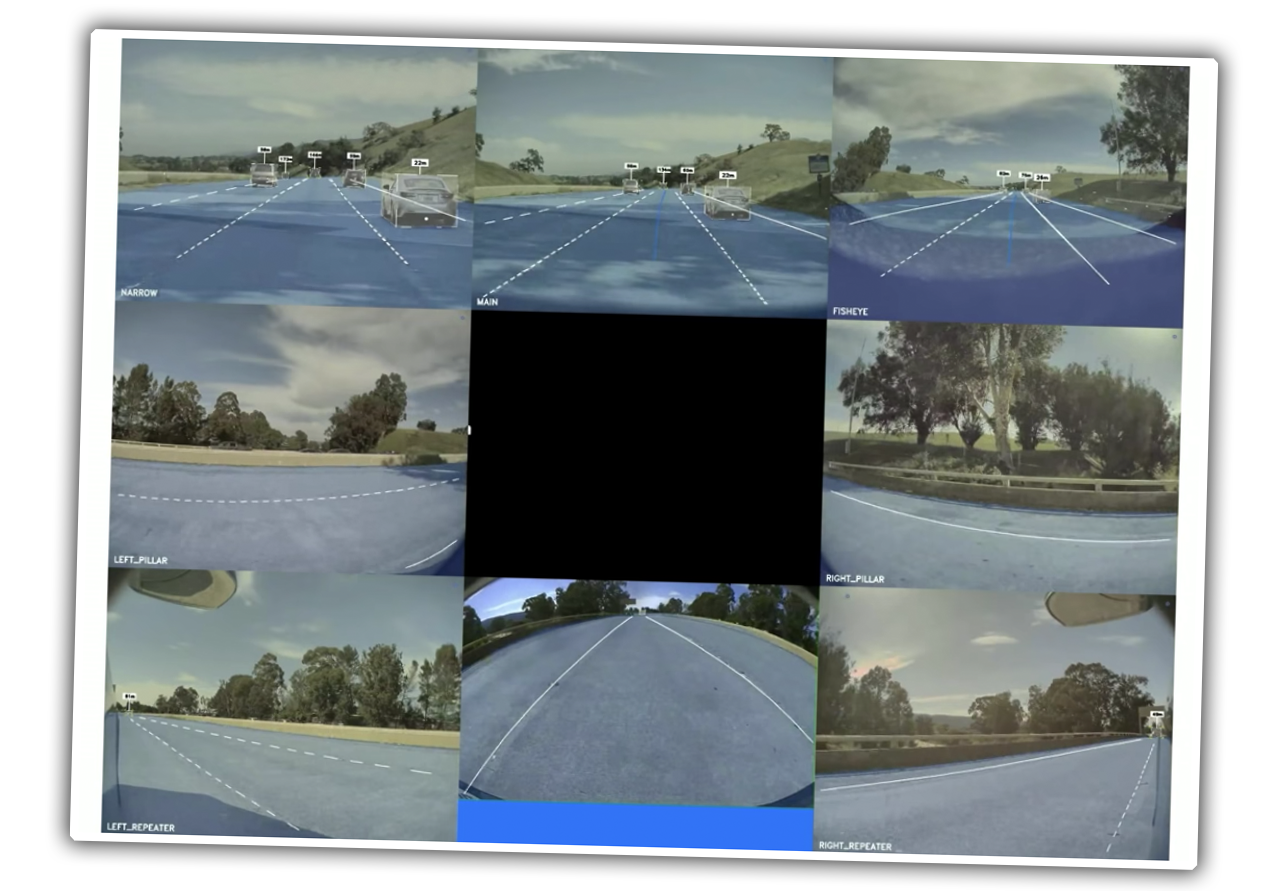

Previously, the radar systems in Tesla’s Autopilot driver-assist system worked in conjunction with the eight cameras used by the car to provide data about velocities and distances of objects. Radar is a very effective sensor for determining how far the car is from an object in front of it (as Tesla only uses forward-facing radar) and can work in the dark or in low visibility conditions that would impair a camera-based solution.

Radar can also bounce waves under cars in front of it to see objects that a camera can’t; you can see an example of this with a Tesla using its emergency braking feature (many cars have similar radar-based emergency braking systems) to stop the car because it spotted a car stopping in front of the car the Tesla was behind (you can hear the beeps when it detects the issue):

Interestingly, less than a year ago, it seems that Tesla was investigating the use of a more advanced radar system, as discovered by Tesla researcher/hacker GreentheOnly:

The vendor appears to be Arbe Robotix and their website has a bunch of material that many of you would find resembling output of a sensor that Tesla cannot include due to perceived loss of face. https://t.co/364G43oXvJ pic.twitter.com/EtDFRzuxdj

— green (@greentheonly) October 22, 2020

This isn’t the path for Model 3s and Ys, though, which will now be radar-less. The loss of the radar seems to have introduced some limitations, according to Tesla:

For a short period during this transition, cars with Tesla Vision may be delivered with some features temporarily limited or inactive, including:

- Autosteer will be limited to a maximum speed of 121 km/h and a longer minimum following distance. Smart Summon (if equipped) and Emergency Lane Departure Avoidance may be disabled at delivery.

In the weeks ahead, we’ll start restoring these features via a series of over-the-air software updates. All other available Autopilot and Full Self-Driving features will be active at delivery, depending on order configuration.

On previous radar-equipped cars, Autosteer would work up to 90 MPH, and the need for a longer minimum following distance is likely to make up for the lack of the quick and accurate radar-based distance sensor.

The part about Smart Summon and Emergency Lane Departure Avoidance (steers you back into your lane if it detects you’ve drifted out of it) maybe being disabled is confusing. Why can’t they say for sure if it’ll be disabled or not? Do those systems rely heavily on radar or don’t they?

The reasons why Tesla is eliminating radar from their highest-volume cars isn’t known just yet. While Tesla has long advocated for a more vision-based system (hence their rejection of Lidar) they haven’t been anti-radar, and they are keeping radar units on the more expensive Models S and X, which leads to speculation that there’s supply/cost issues that are motivating the removal.

Of course, among the Children of Elon, the elimination of a whole sensor system was cause for celebration and praise:

Tesla Vision: What other company would have the courage to make such a step!? I takes so much will to *actually do* what you otherwise just *could do*. But this is the only way progress goes. Such a bold move. Bullish. $TSLA #Tesla @WholeMarsBlog @elonmusk

— Jonas ✨⚡️ (@jonastsla) May 26, 2021

I’m just not convinced this is really a “courage” based decision, here. Without radar, all of the depth perception will have to be done algorithmically using input from two of the three forward-facing cameras, which have different lenses. It’s not ideal, as camera-based systems have to deal with all sorts of interference from light and weather and any number of things, where radar can give much more consistent distance readings.

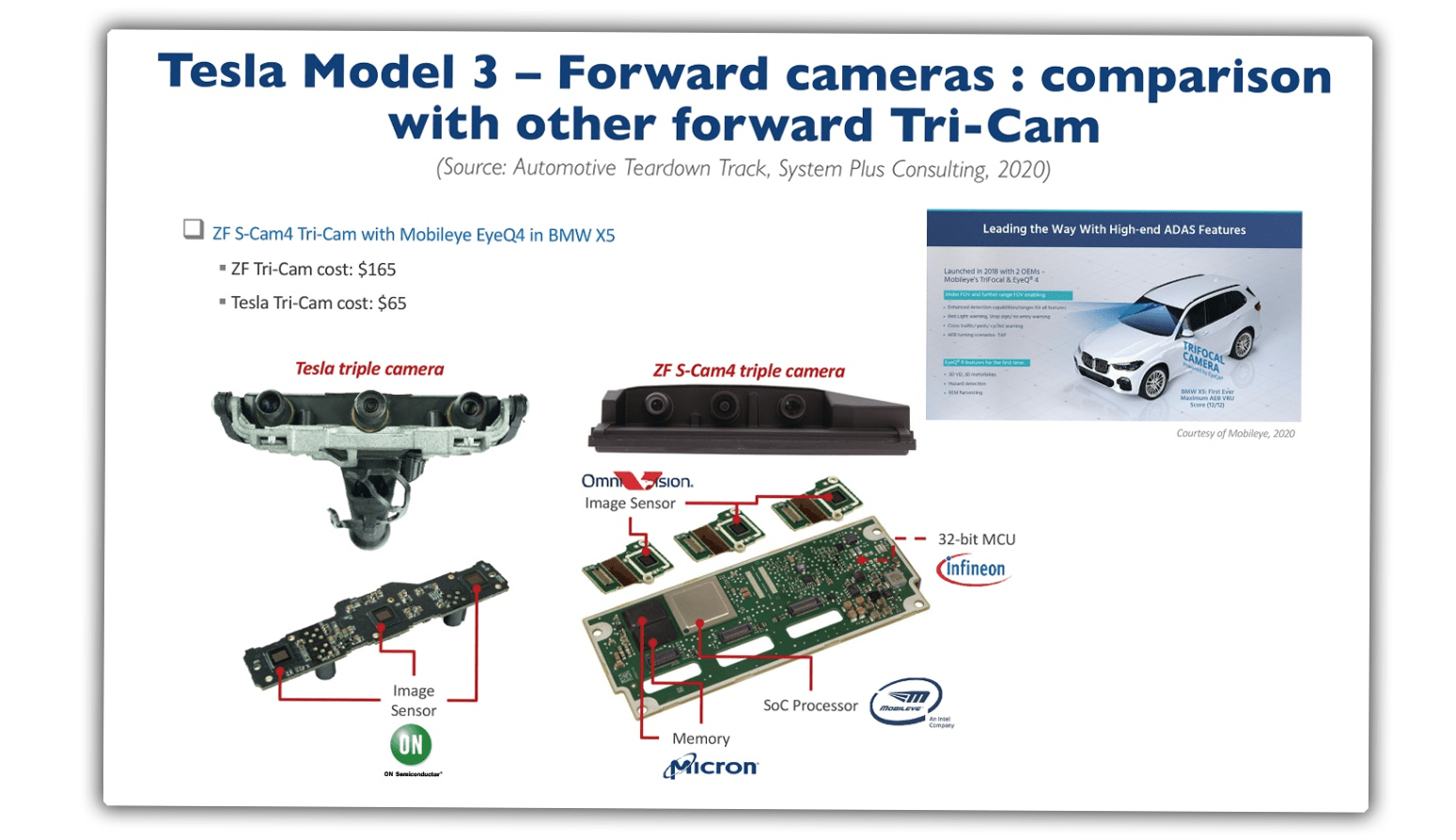

Plus, it’s not like those cameras are especially good; a teardown of the Model 3’s camera systems described the 1280×960 resolution cameras as

Eight cameras in total designed into Model 3 are based on the same 1.2 Megapixel image sensors released by On Semiconductor in 2015. “They are low cost. They are neither new nor high resolution,” observed Fraux.

The same report described the radar setup like this:

The package also incorporates a forward-facing radar system with improved processing capabilities. It provides additional data about the surrounding environment on a redundant wavelength that can see through heavy rain, fog, dust and even beyond previous cars.

The key parts there are that the radar provides redundant distance data that can work in conditions cameras can’t; all of this seems pretty valuable to me. The radar unit used on the Model 3, a Continental ARS4-B, is used on a lot of other mid-priced cars like the Volkswagen Tiguan and Nissan Rogue, and isn’t a particularly expensive component, considering.

Other people, including well-known Tesla expert E.W. Niedermeyer, have noted the reality of the Model 3/Y cameras as well:

I’ll say this much for Tesla’s big move today: “Tesla Vision” definitely sounds a lot better than “the same-old 2015-era cameras that a teardown once called ‘mature’ and ‘all about cost.’”

Or, even more accurately, “putting your life in the hands of a $65 tri-camera setup.” https://t.co/PRw2vXHk5a

— E.W. Niedermeyer (@Tweetermeyer) May 25, 2021

And, remember, Tesla’s cameras are not redundant; if the ones in that central pod of three get obscured by ice or rain or squished bugs or bird shit, or even blinded a sunrise/sunset, Autopilot disengages, and when it does, I sure as hell hope someone in that car is ready to take over.

There’s another justification for the decision I’ve seen that I’ve seen before in other contexts, and don’t really like it:

Given Tesla’s ability to capture and label data at fleet-wide scale this is a smart approach. Computer Vision continues to advance quickly and we have a proof point in that human drivers don’t need radar :).

— Mike Schroepfer (@schrep) May 25, 2021

Here, our buddy Mike (who happens to be Facebook’s CTO) notes (correctly, I checked) that human drivers don’t need radar.

I’ve heard similar defences of a vision-only approach, and claims that a Tesla can drive better than a human because we only have two little mobile cameras and a Tesla has eight.

This is true, but it’s an idiotic comparison, because how the visual data is processed from these cameras biological (eyes) and technological (cameras), couldn’t be more different.

Mammalian binocular vision with depth perception has been “in development” for millions of years, and the need to track prey while running in a wide variety of light and weather conditions adapts very well to the tasks of driving — there’s a hell of a lot of complex visual processing going on in a human brain when we drive, or catch a ball, thread a needle, or chase a dog escaped from their leash.

We don’t need radar because we’ve evolved in a world where depth perception is important, and we’re quite good at judging it, and none of how we do it is remotely like the HydraNet architecture of Tesla’s FSD computer, even if we call those “neural” nets.

The approaches just aren’t the same. My dishwasher has spinning arms that spray water over dishes and I don’t, but I can wash dishes, too — albeit in a completely different way.

I’m sure it’s possible to do most of the Level 2, driver-always-ready-to-take-over semi-automation of Autopilot with just a camera-based system. Tesla is already shipping cars that will do it. I’m not convinced it’s better than having a redundant sensor system, and I’m not alone there.

Here’s Tesla hacker/researcher GreenTheOnly again:

“HELP WANTED: if you pay us a bunch of money we’ll let you play a role of crash test dummies while we figure out how to safely use vision. Even if you already paid, we’ll let you know if you have opportunity to participate. Don’t pass this up, we really need your help!”

— green (@greentheonly) May 25, 2021

Elon himself provided another explanation, one that I find especially unconvincing. This is from April, when the “pure vision” approach was being discussed:

When radar and vision disagree, which one do you believe? Vision has much more precision, so better to double down on vision than do sensor fusion.

— Elon Musk (@elonmusk) April 10, 2021

The problems of sensor fusion are indeed difficult, but the result is a much richer view of the world, and more redundancy. Just because it’s hard shouldn’t mean that it should be abandoned, at least not for safety-critical things like cars. At one time, Elon was really into radar, too:

“Being radar it can see through rain, fog, snow, dust, and it recovers quite easily,” Musk said earlier in the call when describing radar’s advantages over the camera. “So even if there was something where you’re driving down the road and visibility was very low and there was a big multi-car pileup ahead of you, you can’t see it, but the radar worked, it would initiate braking before your car being added to the multi-car pileup.”

What happened to all that, Elon? It’s all still true, and it shows how radar can fill in some of the holes of a purely visual system.

I don’t think anyone outside of Tesla knows exactly why Tesla has decided to eliminate radar — cost issues, supply chain issues, complexity of integrating radar data with vision data, genuine belief in a vision-only system — but I don’t think they’ve yet managed to definitively prove that not having a redundant distance-measuring system that operates on different inputs than the primary system is a good idea.

If the goal is to be safer than a human driver, then I’m not yet convinced this makes sense. Maybe it’ll work out, for the current limited driver-assist level automation, but if Tesla’s still claiming they can get to full Level 5 autonomy by the end of this year, I don’t think the hardware in their current lineup can pull that off, and eliminating a whole sensor system like radar isn’t going to help.