Tesla, as usual, is very generous in providing us with lots to talk about, especially when it comes to their Level 2 driver assist system known, confusingly, as Autopilot and/or Full Self Driving (FSD). Yesterday there was a crash of a Tesla using Autopilot that hit a cop car, and now a video of a largely Autopilot-assisted drive through Oakland is making the rounds, generating a lot of attention due to the often confusing and/or just poor decisions the car makes. Strangely, though, it’s the crappiness of the system’s performance that may just help people to use it safely.

All of this comes on the heels of a letter from the National Transportation Safety Board (NTSB) to the U.S. Department of Transportation (USDOT) regarding the National Highway Traffic Safety Administration’s (NHTSA) “advanced notice of proposed rulemaking” (ANPRM), where the NTSB is effectively saying what the fuck (WTF) should we be doing with regard to autonomous vehicle (AV) testing on public roads.

From that letter:

Because NHTSA has put in place no requirements, manufacturers can operate and test vehicles virtually anywhere, even if the location exceeds the AV control system’s limitations. For example, Tesla recently released a beta version of its Level 2 Autopilot system, described as having full self-driving capability. By releasing the system, Tesla is testing on public roads a highly automated AV technology but with limited oversight or reporting requirements.

Although Tesla includes a disclaimer that “currently enabled features require active driver supervision and do not make the vehicle autonomous,” NHTSA’s hands-off approach to oversight of AV testing poses a potential risk to motorists and other road users.

At this point, the NTSB/NHTSA/USDOT letter isn’t proposing any solutions, just bringing up something that we’ve been seeing for years now: Tesla and other companies are beta-testing self-driving car software on public roads, surrounded by other drivers and pedestrians that have not consented to be part of any test, and, in this beta software test, the crashes have potential to be literal.

All of this gives some good context for the Autopilot in Oakland video, highlights of which can be seen in this tweet

ok i’m never getting into a self-driving tesla pic.twitter.com/yeHx3MzyQr

— Bes D. Socialist (@besf0rt) March 17, 2021

… and the full, 13-and-a-half minute video can be watched here:

There’s so much in this video worth watching, if you’re interested in Tesla’s Autopilot/ FSD system. This video is using what I believe is the most recent version of the FSD beta, version 8.2, of which there are many other driving videos available online.

There’s no question the system is technologically impressive; doing any of this is a colossal achievement, and Tesla engineers should be proud.

At the same time, though, it isn’t close to being as good as a human driver, at least in many context, and, yes, as a Level 2 semi-Autonomous system, it requires a driver to be alert and ready to take over at any moment, a task humans are notoriously bad at, and why I think any L2 system is inherently flawed.

While many FSD videos show the system in use on highways, where the overall driving environment is far more predictable and easier to navigate, this video is interesting precisely because city driving has such a higher level of difficulty.

It’s also interesting because the guy in the passenger seat is such a constant and unflappable apologist, to the point where if the Tesla attacked and mowed down a litter of kittens he’d praise it for its excellent ability to track a small target.

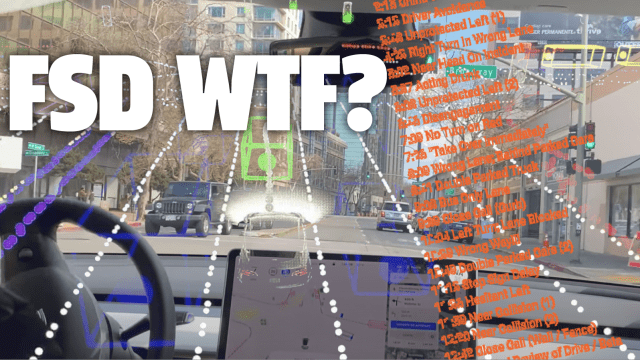

In the course of the Oakland drive there’s plenty of places where the Tesla performs just fine. There’s also places where it makes some really terrible decisions, driving in the incoming traffic lane or turning the wrong way down a one-way street or weaving around like a drunk robot or clipping curbs or just stopping, for no clear reason, right in the middle of the road.

In fact, the video is helpfully divided into chapters based on these, um, interesting events:

0:00 Introduction

0:42 Double Parked Cars (1)

1:15 Pedestrian in Crosswalk

1:47 Crosses Solid Lines

2:05 Disengagement

2:15 China Town

3:13 Driver Avoidance

3:48 Unprotected Left (1)

4:23 Right Turn In Wrong Lane

5:02 Near Head On Incident

5:37 Acting Drunk

6:08 Unprotected Left (2)

6:46 Disengagement

7:09 No Turn on Red

7:26 “Take Over Immediately”

8:09 Wrong Lane; Behind Parked Cars

8:41 Double Parked Truck

9:08 Bus Only Lane

9:39 Close Call (Curb)

10:04 Left Turn; Lane Blocked

10:39 Wrong Way!!!

10:49 Double Parked Cars (2)

11:13 Stop Sign Delay

11:36 Hesitant Left

11:59 Near Collision (1)

12:20 Near Collision (2)

12:42 Close Call (Wall / Fence)

12:59 Verbal Review of Drive / Beta

That reads like the track list of a very weird concept album.

Nothing in this video, impressive as it is objectively, says this machine drives better than a human. If a human did the things seen here you’d be loudly inquiring what the hell was wrong with them, over and over.

Some situations are clearly things where the software hasn’t been programmed to understand, like how parked cars with hazard lights on are obstacles that should be carefully driven around. Other situations are the result of the system poorly interpreting camera data, or overcompensating, or just having difficulties processing its environment.

Some of the defences of the video help to bring up the bigger issues involved:

How many human accidents is there in a day ? Way more than any autonomous car. Way safer than the normal driver. It’s easily 10x safer than the normal driver. Yes FSD is in beta

— Zain (@ZainS180) March 18, 2021

The argument that there are many, many more human accidents in a given day is very deceptive. Sure, there are many more, but there’s vastly more humans driving cars, too, and even if the numbers there were equal, no carmaker is trying to sell shitty human drivers.

Plus, the reminders that FSB is a beta only serves to remind us of that NTSB letter with all the acronyms: should we be letting companies beta test self-driving car software in public with no oversight?

Tesla’s FSB is not yet safer than a normal human driver, which is why videos like this one, showing many troubling FSD driving events, are so important, and could save lives. These videos erode some trust in FSD, which is exactly what needs to happen if this beta software is going to be safely tested.

Blind faith in any L2 system is how you end up crashed and maybe dead. Because L2 systems give little to no warning when they need humans to take over, and untrusting person behind the wheel is far more likely to be ready to take control.

I’m not the only one suggesting this, either:

The fact that “Full Self-Driving” is so laughably bad is actually the main reason we haven’t had crashes yet. If it improves to the point where it only makes a potentially fatal screwup every 100 miles or more, that’s when people will become inattentive and over-trusting. https://t.co/dxh43iI0jX

— E.W. Niedermeyer (@Tweetermeyer) March 17, 2021

The paradox here is that the better a Level 2 system gets, the more likely it is to make the people behind the wheel trust it, which means the less attention they will pay, which leads to them being less able to take control when the system actually needs them to.

That’s why most crashes with Autopilot happen on highways, where a combination of generally good Autopilot performance and high speeds leads to poor driver attention and less reaction time, which can lead to disaster.

All Level 2 systems — not just autopilot — suffer from this, and hence all are garbage.

While this video clearly shows that FSD’s basic driving skills still need work, Tesla’s focus should be not on that, but on figuring out safe and manageable failover procedures, so immediate driver attention is not required.

Until then, the best case for safe use of Autopilot, FSD, SuperCruise, or any other Level 2 system is to watch all these videos of the systems screwing up, lose some trust in them, and remain kind of tense and alert when the machine is driving.

I know that’s not what anyone wants from autonomous vehicles, but the truth is they are still very much not done yet. Time to accept that and treat them this way if we ever want to make real progress.

Getting defensive and trying to sugarcoat crappy machine driving helps nobody.

So, if you love your Tesla and love Autopilot and FSD, watch that video carefully. Appreciate the good parts, but really, really accept the bad parts. Don’t try and make excuses. Watch, learn, and keep these fuckups in the back of your mind when you sit behind a wheel you’re not really steering.

It’s no fun, but this stage of any technology like this always takes work, and work isn’t always fun.