A new study in Nature suggests that shifting reader attention online can help combat the spread of inaccurate information. The study, published on March 17, 2021, found that while people prefer to share accurate information, it is difficult to convince the average social media user to seek it out before sharing. Adding a roadblock in the form of a request to rate information accuracy can actually change the quality of information they share online.

“These findings indicate that people often share misinformation because their attention is focused on factors other than accuracy — and therefore they fail to implement a strongly held preference for accurate sharing,” write the authors, suggesting that people usually want to do good but often fail in the heat of the moment. “Our results challenge the popular claim that people value partisanship over accuracy and provide evidence for scalable attention-based interventions that social media platforms could easily implement to counter misinformation online”

The paper, co-authored by David Rand, Gordon Pennycook, Ziv Epstein, Mohsen Mosleh, Antonio Arechar, and Dean Eckles, even suggests a remedy.

First, the researchers confirmed that it was accuracy and not partisan politics of any stripe that concerned the average social media user. While there was a bias one way or the other in the tendency to share information, the researchers found that most of a 1,001 subject cohort chose accuracy over inflammatory or potentially “hot” content.

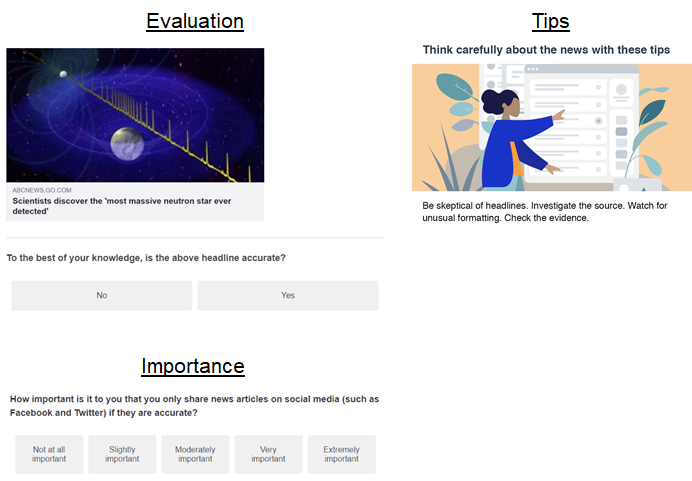

The researchers when on to recommend roadblocks when it comes to sharing news and information online, thereby reducing the chance that an inaccurate story slips past the reader’s internal censor. From the study:

To test whether these results could be applied on social media, the researchers conducted a field experiment on Twitter. “We created a set of bot accounts and sent messages to 5,379 Twitter users who regularly shared links to misinformation sites,” explains Mosleh. “Just like in the survey experiments, the message asked whether a random nonpolitical headline was accurate, to get users thinking about the concept of accuracy.” The researchers found that after reading the message, the users shared news from higher-quality news sites, as judged by professional fact-checkers.

In other words, many users don’t share fake news because they want to but because they don’t think about what they’re sharing before they press the button. Slowing down the process by asking users whether or now they actually trust a headline or news source means they are far more likely to think twice before sharing misinformation.

In fact, the team has worked on a number of suggested user interface changes including simply asking the reader to be sceptical of headlines. They implemented a number of these for Google’s Jigsaw internet safety project.

The warnings and notifications put the user at the centre of the question of information accuracy, thereby engaging us in critical thinking versus mindless sharing. Other interfaces simply show warnings before you read further, a welcome change to the typical click-and-forget attitude most of us have while browsing the internet.

“Social media companies by design have been focusing people’s attention on engagement,” said Rand. “But they don’t have to only pay attention to engagement — you can also do proactive things to refocus users’ attention on accuracy.”