Smartphones have come a long way toward becoming the best cameras we carry. A long journey through megapixels, multiple lenses, optical zoom, image stabilisation, and various other upgrades has gotten us to today’s handsets, but today, much of the work in capturing a great photo is happening on the software side.

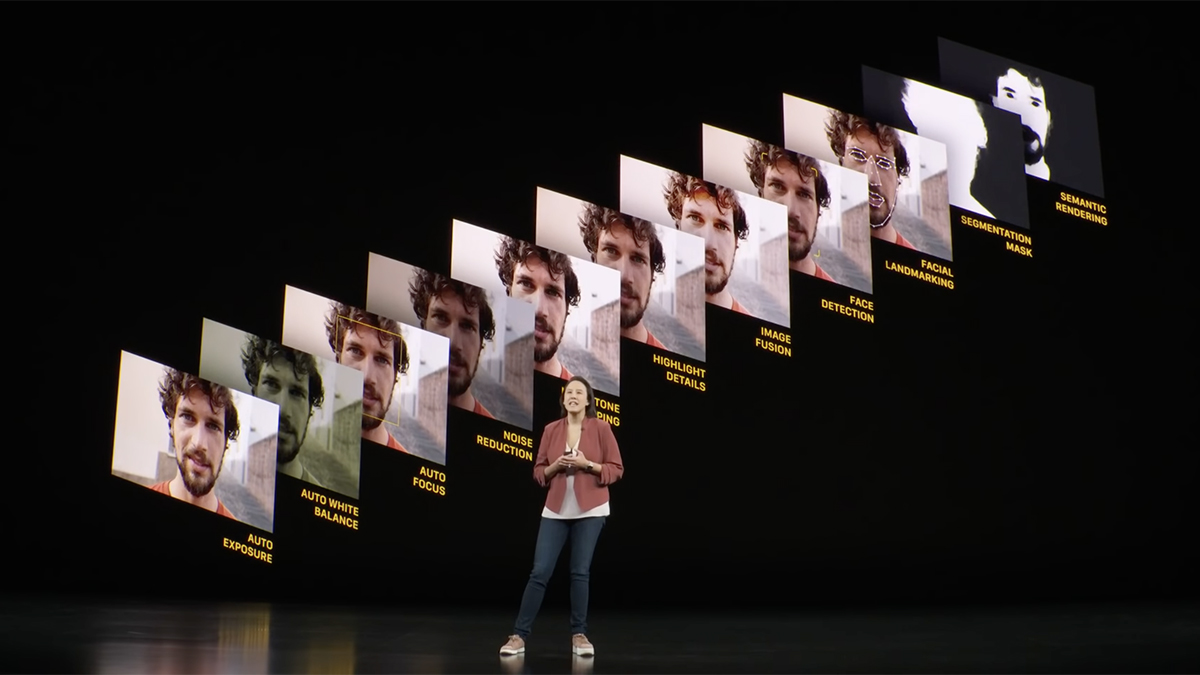

Phone cameras can take pictures with a ton of extra information attached to them, including data about lighting, colour, intensity, focus and so on. These additional bits of data can then be used to transform the photo after it’s been taken.

Taking multiple photos in an instant is also a key part of this new era of computational phone photography, because photos can be compared, analysed, and combined to adjust colours, fix focus, and apply a variety of other smart tricks to an image. These tricks and the machine learning networks underneath them vary between phone manufacturers, but there are some broad techniques that they’re all using.

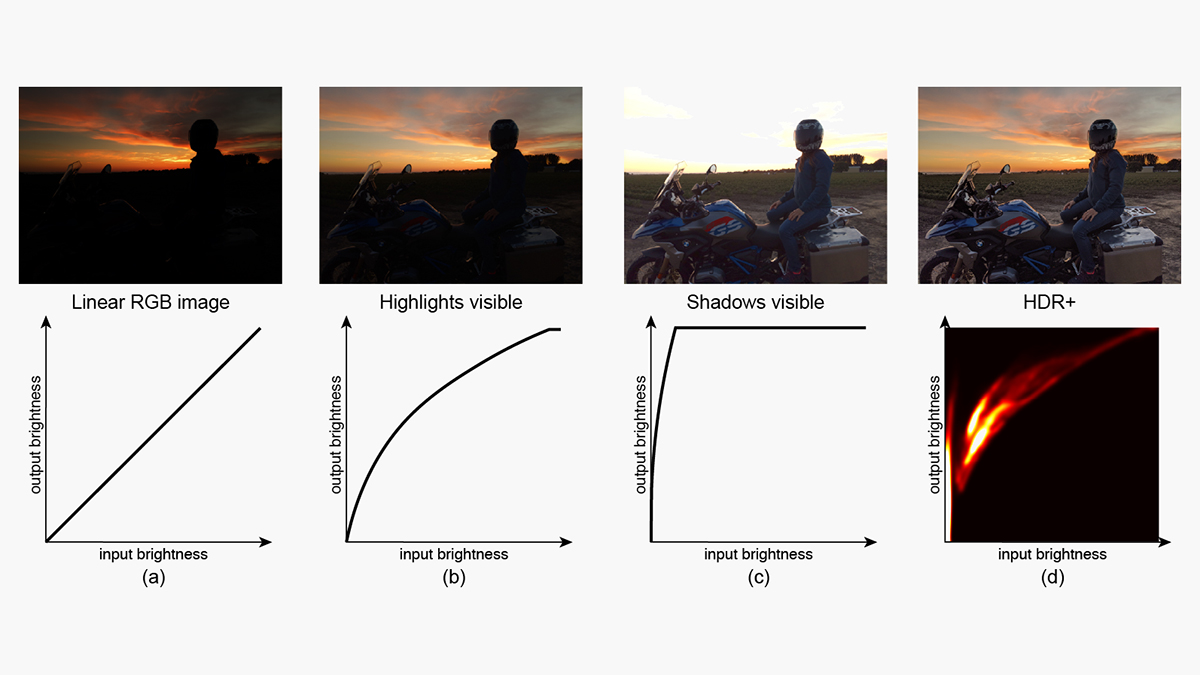

Balanced exposures

High Dynamic Range (HDR) has been with us for a while, on TVs and movie streaming services as well as smartphone cameras. The idea is to keep details visible in both the darkest and lightest areas of a picture — avoiding the silhouette effect you might see when taking a photo of someone standing in front of a bright window, for example.

By taking multiple photos with multiple exposures, smartphones can grab the details in all areas of the picture, effectively applying different processing rules to different sections of the image. The bright spots get a certain tweak, the dark spots get another tweak, and so on until the picture is balanced.

As Google explains, these HDR effects are improving as phones get faster and algorithms get smarter. They’re becoming more natural-looking and more balanced, and they can now be previewed in real time through the phone screen, rather than everything being done after the shutter button has been pressed.

What’s also getting better, through the ever-improving algorithms that have been trained on libraries of millions of images, is the way that different sections of a photo can be stitched back together. To the user, there should be no sign at all that the phone has pulled together multiple exposures and broken up the individual images to treat different segments of them separately: It should just look like one photo, snapped in an instant.

Background blurs

Portrait mode was one of the very first computational photography tricks to roll out as a built-in smartphone feature, and just like HDR, the results are getting more impressive and more accurate as time goes on. Information from multiple lenses and depth sensors (if the phone has them) is combined with smart guesses from neural networks to figure out which parts of a picture should be blurred and which shouldn’t.

Again, multiple pictures can be used in combination, with the ever-so-slight differences between them telling the image-processing algorithms which objects are closer to the camera than others — and which pixels need blurring. These algorithms can also be deployed to judge whether or not the photo is suitable for a portrait shot to begin with.

Recent improvements are adding more data to the mix, such as which areas of an image are fractionally sharper than others, which again can indicate how far an object is from the camera. You can see the progress being made year over year: Cameras capture more detail and new signals, and the AI processing gets better at putting everything together in a finished image.

Not every step forward is because of software, though. The introduction of LiDAR on the Pro models of the latest iPhone 12 handsets means that more depth data can be captured right from the start, and hardware upgrades will continue to progress alongside software ones.

Low-light photography

We’ve seen some spectacular leaps forward in low-light photography in recent years, and it’s largely due to computational photography. Again, the idea is that multiple photos with multiple settings are combined together to bring out as much detail as possible, while keeping noise to a minimum. This type of processing is now so good that it can almost make the nighttime look like the daytime.

The compromise that’s made here is time: Low-light photo modes need more seconds to capture as much light as they possibly can. In the case of the astrophotography mode on the Pixel phones, you might be waiting several minutes for the capture to finish, and the phone needs to be kept perfectly still to achieve usable results.

Even with the extra time and camera stability required, it’s barely believable that we can now take photos of a starry sky with a mid-range phone that fits in a pocket. Neural networks are stacked on neural networks, with some dedicated to picking out the faintest points of light and others solving other tricky problems — such as how to nail white balance in such a challenging shot.

This technology is constantly moving forward too. The neural networks behind it are getting more adept at recognising which parts of a photo are which, working out how to focus on objects in darkness and make them as sharp as possible, and identifying the difference between a dark sky and a dark mountain — so you don’t suddenly end up with stars that are in a perfectly bright blue sky.

Other improvements

The benefits of computational photography don’t stop there. Multiple frames can also be analysed and pushed through algorithms to reduce noise and increase sharpness as well, which is an area which Apple’s Deep Fusion technology covers. It looks at multiple exposures and then applies artificial intelligence techniques to pick the options for crisp detail, sometimes down to the level of individual pixels. The final photo picks out all the best bits of the multiple snaps that have been taken.

Pixel binning is another related technology that you might hear mentioned when it comes to top-end smartphone cameras. It essentially uses information from several pixels in a camera sensor to create one master, super-accurate pixel in a finished image — it means finished pictures are smaller than the megapixel rating of the camera might suggest, but they should be sharper and clearer too.

That’s a lot of image processing done in milliseconds across millions of individual pixels, but the power that’s packed into modern-day flagship smartphones means that they can keep up. The same principles are being applied to making digital zoom as good as optical zoom too. Right now this also helps premium handsets stand out from budget and mid-range phones, because they have the extra processor speed and memory required to get all of this optimisation done at a satisfactory speed.

RAW formats (including Apple ProRAW) are still available on many phones if you just want all the data captured by the camera and think you can do a better job than the algorithms. However, computational photography has now seeped into just about every phone on the market, to a greater or lesser extent: Most of us are happy to take the improvements it brings, even when the finished image doesn’t match what we actually saw.