We’ve written about the hacker/security researcher who goes by GreenTheOnly on Twitter before, as he has done a lot of digging into the data stored in modern cars, with a focus on Teslas. As a result, occasionally salvage yards will send him black boxes and memory modules from cars, which is how he acquired this video and data from a wrecked Tesla. The video shows a pretty harrowing high-speed impact with another car, and while Autopilot was not active right at the time of impact, I think the problems of any Level 2 semi-autonomous system are involved here.

The crash took place at night, on highway CA-24 near Lafayette, CA, in clear weather. The Tesla (which was recording via its on-board cameras) was driving quite fast and rear-ended a Honda Civic hard enough to send it flying.

There was minimal other traffic around, and the Tesla could have easily passed the Honda on either side. Here, just watch the video:

Ugh, another one for the “who drives like that?” book I guess.

Looks like poor Honda went airborne but I don’t see any news for that date/location so hopefully nobody died or was seriously hurt.

Speed at impact unknown yet (no canbus data)#TeslaCrashFootage pic.twitter.com/dpT8QSiR5Q— green (@greentheonly) November 12, 2020

Green was also able to extract video that included some of the Tesla’s object-sensing, lane-sensing, and other visualisations:

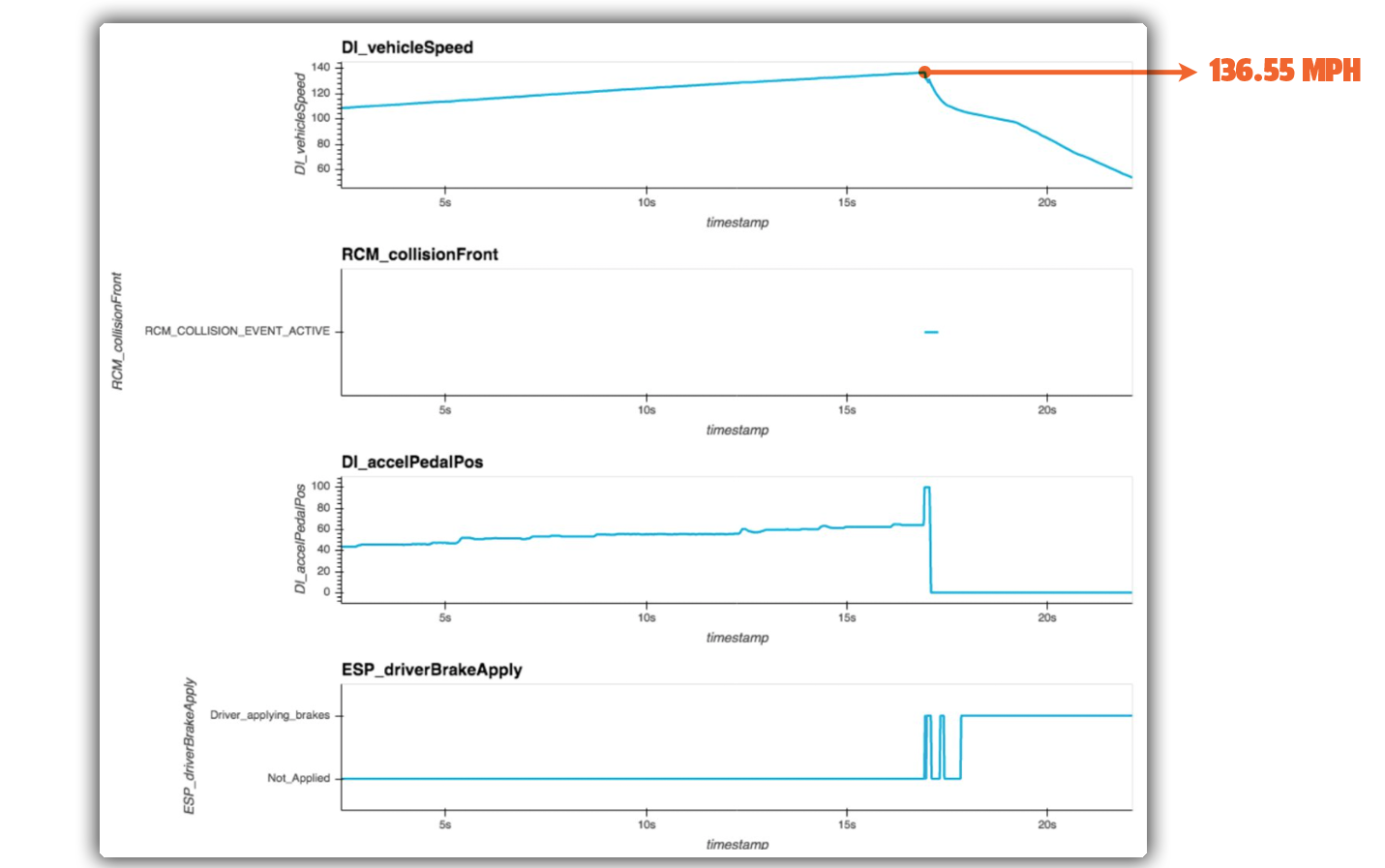

Speed differential at impact 63MPH

This was not on autopilot at impact, but AP disengaged like 40 seconds before due to FCW. There was another FCW 2+ seconds before this impact. pic.twitter.com/nOhh9bzMAI— green (@greentheonly) November 12, 2020

The data shown in this footage suggests a speed differential between the Honda and the Tesla of about 101 km/h, which is, of course, a hell of a lot.

Autopilot disengaged about 40 seconds prior to impact due to the Tesla issuing a Forward Collision Warning (FCW) chime which happened again about two seconds prior to impact.

It’s worth mentioning that FCW does not apply the brakes and is not Automatic Emergency Braking, which is another system. From the Tesla manual (Model 3 shown, but essentially the same in other versions):

Forward Collision Warning

The forward looking camera and the radar sensor monitor the area in front of Model X for the presence of an object such as a vehicle, bicycle or pedestrian. If a collision is considered likely unless you take immediate corrective action, Forward Collision Warning is designed to sound a chime and highlight the vehicle in front of you in red on the instrument panel:

Warnings cancel automatically when the risk of a collision has been reduced (for example, you have decelerated or stopped Model X, or a vehicle in front has moved out of your driving path).

If immediate action is not taken when Model X issues a Forward Collision Warning, a collision is considered imminent and Automatic

Emergency Braking (if enabled) automatically applies the brakes (see Automatic Emergency Braking on page 97).

So, what seems to have happened here is that Autopilot was on, it detected a collision warning 40 seconds before impact, which kicked Autopilot off, but that warning was just a chime and Automatic Emergency Braking does not seem to have been enabled.

The driver must have continued to accelerate because black box data recovered by GreenTheOnly records a speed at the time of impact of 219 km/h:

The data seems to show the throttle being applied, as the car was very clearly heading toward that hapless Honda. What’s going on, here?

First, I need to be clear that at this point I do not know for sure what happened — I don’t know if anyone was injured or worse, I don’t know who was in either car, I don’t know what the Tesla driver was doing. All I’m going on is the data found and presented by GreenTheOnly. So please keep that in mind as I think through this.

What we do know is that the Tesla was going way too fast for a public road and that, based on the video, the wreck could have been easily prevented by a driver even paying the slightest bit of attention. This was not a complex situation. This was the first few seconds of Pole Position level of difficulty, at worst.

We also know that Autopilot had been engaged up to a point 40 seconds before the wreck and that multiple FCW chimes seemed to have no effect on the driver, at least in terms of getting the driver’s attention.

If we assume that this wasn’t a deliberate act of destructive insanity, then the hypothesis I would lean to has to do with my biggest complaint about autopilot and any Level 2-semi-autonomous system: they don’t work well with human beings.

Humans are simply not good at passing off 80-plus per cent of a task and then staying alert to monitor what’s going on, which is what Autopilot demands. Since Level 2 systems offer no failover capability and need a human to be ready to take over at any moment, if you’re not paying constant attention, the wreck we see here is precisely the kind of worst-case nightmare that can happen.

It’s not just me saying this; experts have long known about how shitty people are at “vigilance tasks” like these for decades. Imagine having a chauffeur that had been driving you for hours, and then sees something on the road he doesn’t feel like dealing with, so, he jumps into the back seat and tells you it’s your job to handle it now.

You’d fire that chauffeur. And yet that’s exactly what Autopilot is doing here.

I don’t know exactly what the hell the Tesla driver was doing when this wreck happened, but I can sure as hell tell you what they were not doing: paying attention to the road.

I don’t know why they continued to accelerate, but everything else certainly fits the pattern of someone who had delegated the task of driving to the car and became distracted enough that when the car stopped driving, suddenly, they were not able to take over.

All Level 2 systems will have this sort of problem, and that’s why I think Level 2 is a problem itself. Even semi-autonomous systems need to figure out better ways to failover, and while that’s a non-trivial task, it’s a hell of a lot better than slamming into Civics at 209 km/h.

I’ll reach out to Tesla for comment, but, come on.