While a good chunk of Facebook’s Connect event was focused on tech you can use right now (or at least in the very near future) like the Oculus Quest 2, during yesterday’s livestream presentation Oculus chief scientist Michael Abrash also outlined a powerful vision of what Facebook is doing to create our future — and it’s in augmented reality, apparently.

While AR offers a huge range of capabilities and tools that can’t really be accomplished by traditional phones or computers, one of the biggest hurdles is creating a framework that can incorporate multiple layers of information and translate that into a digestible and understandable interface. So to help outline how Facebook is attempting to overcome these problems, Abrash broke the company’s research on next-gen AR interfaces into three main categories: input and output, machine perception, and interaction.

When it comes to input and output, Abrash referenced the need for AR to have an “Engelbart moment,” which is a reference to the legendary 1968 presentation in which Douglas Engelbart demoed a number of foundational technologies, including a prototype computer mouse. The use of a mouse and pointer to manipulate a graphical user interface (or GUI) became a guiding principle for modern computers, which was later expanded upon in the touch-based input used in today’s mobile devices.

However, because you can’t really use a mouse or traditional keyboard for AR, Facebook is trying to devise entirely new input methods. And while Facebook’s research is still very early, the company has two potential solutions in the works: electromyography (or EMG) and beamforming audio.

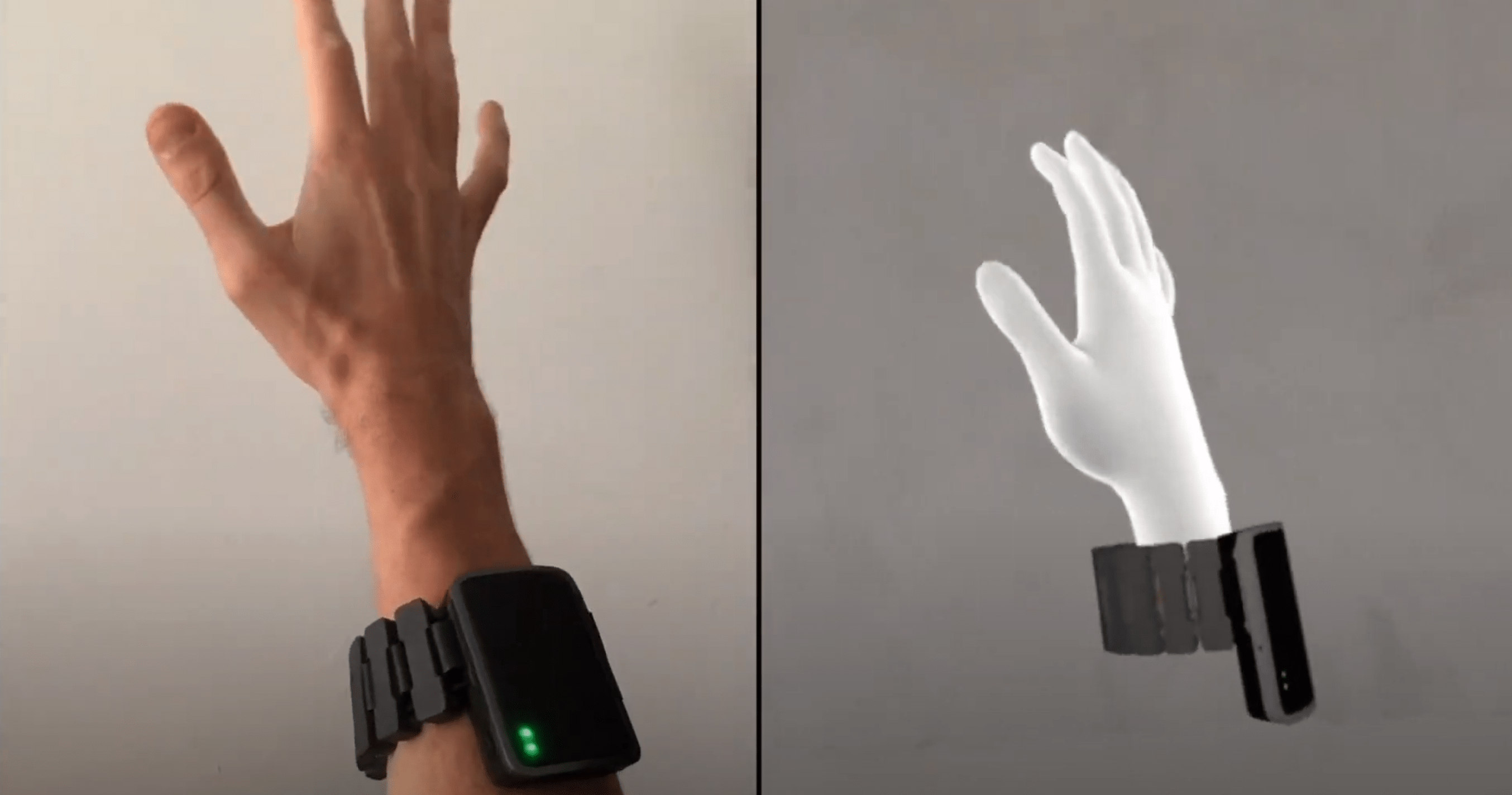

The way EMG works is that by placing sensors on your wrist, a small device can intercept the electrical signals your brain sends to your hands, effectively creating a new kind of direct but non-invasive neural input. Abrash even says that because signals picked up by EMG are relatively strong and unambiguous, EMG sensors can detects movements of just a millimetre, or in some cases, inputs that stem from thought alone.

Now if this sounds like some sci-fi computer brain interface, you’re not far off. But the end result is that by using EMG, people would be able to manipulate AR objects in 3D space or write text in a way that you can’t really duplicate with a keyboard and mouse. Abrash says the use of EMG may be able to give people features they don’t have in real life, like a sixth finger, or control over five fingers by a person who was born with limited hand function, as shown in Facebook’s demo.

To help make communication clearer and easier to understand, Facebook is looking into beamforming audio to help eliminate background noise, highlight speakers, and connect people who are talking both online and in person, even more so than just standard active noise cancellation.

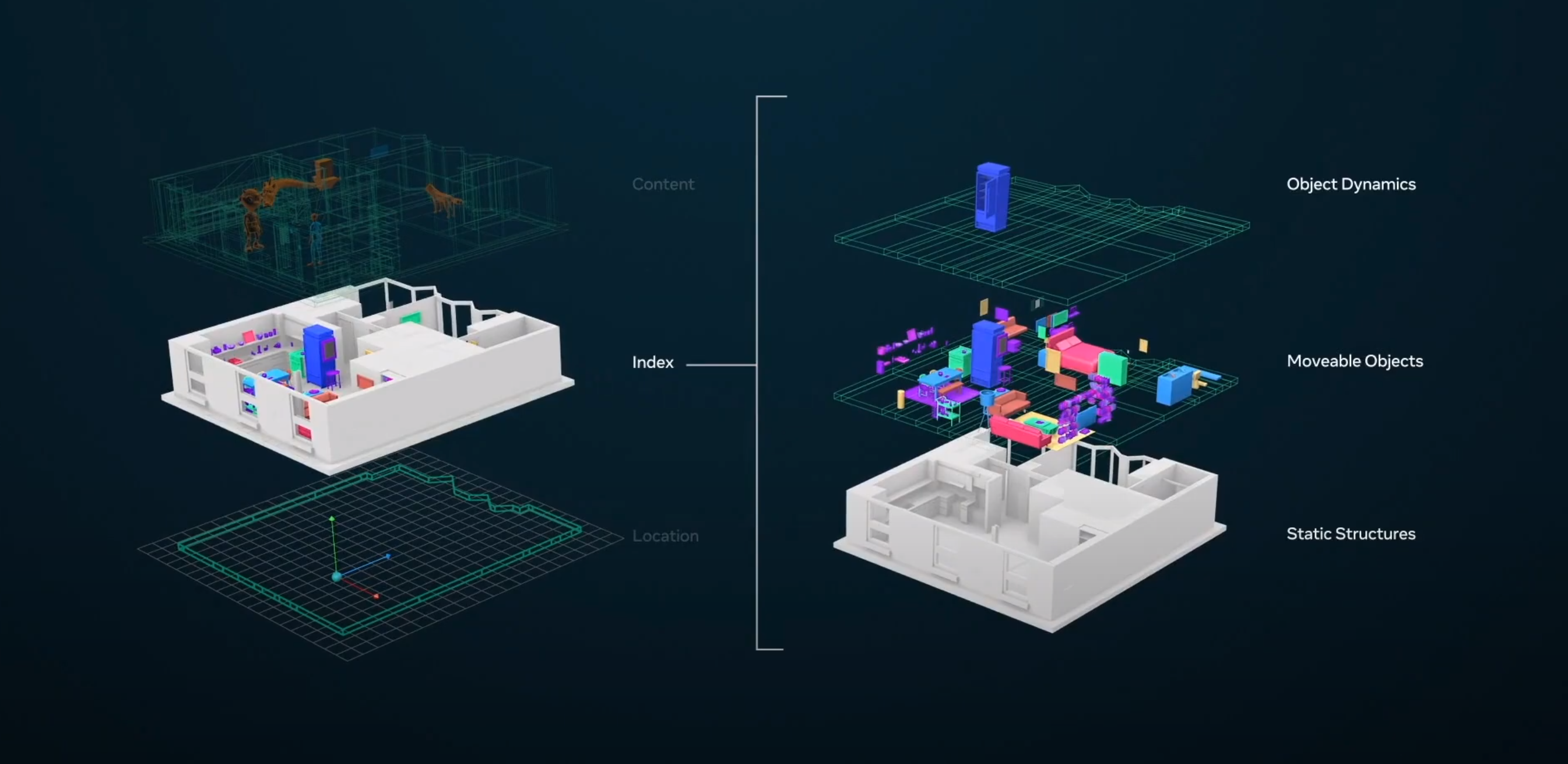

Moving on to machine perception, Abrash says we need to go beyond basic computer vision to support a contextual mapping that can bridge the gap between objects in VR or AR and how they appear in the real world. This is important: Let’s say you’re trying to talk to someone in AR, if their avatar keeps clipping in and out of a nearby wall or appears in the middle of a table instead sitting on a nearby chair, you’ll end up with a very distracting experience. Same goes for AR objects like a computer-generated tree or virtual art, which ideally would sit on a windowsill or on a physical wall instead of floating in mid-air, blocking your view whenever you walk by.

So to overcome these challenges, Facebook is working on a common coordinate system that can track physical locations, index your surroundings, and even recognise the types of objects around you, what they do, and how they relate to you. Sadly, there’s no easy way to gather all this info in one place, so in order to create these virtual, multi-layer models (or Live Maps, as Abrash calls them), Facebook is launching Project Aria.

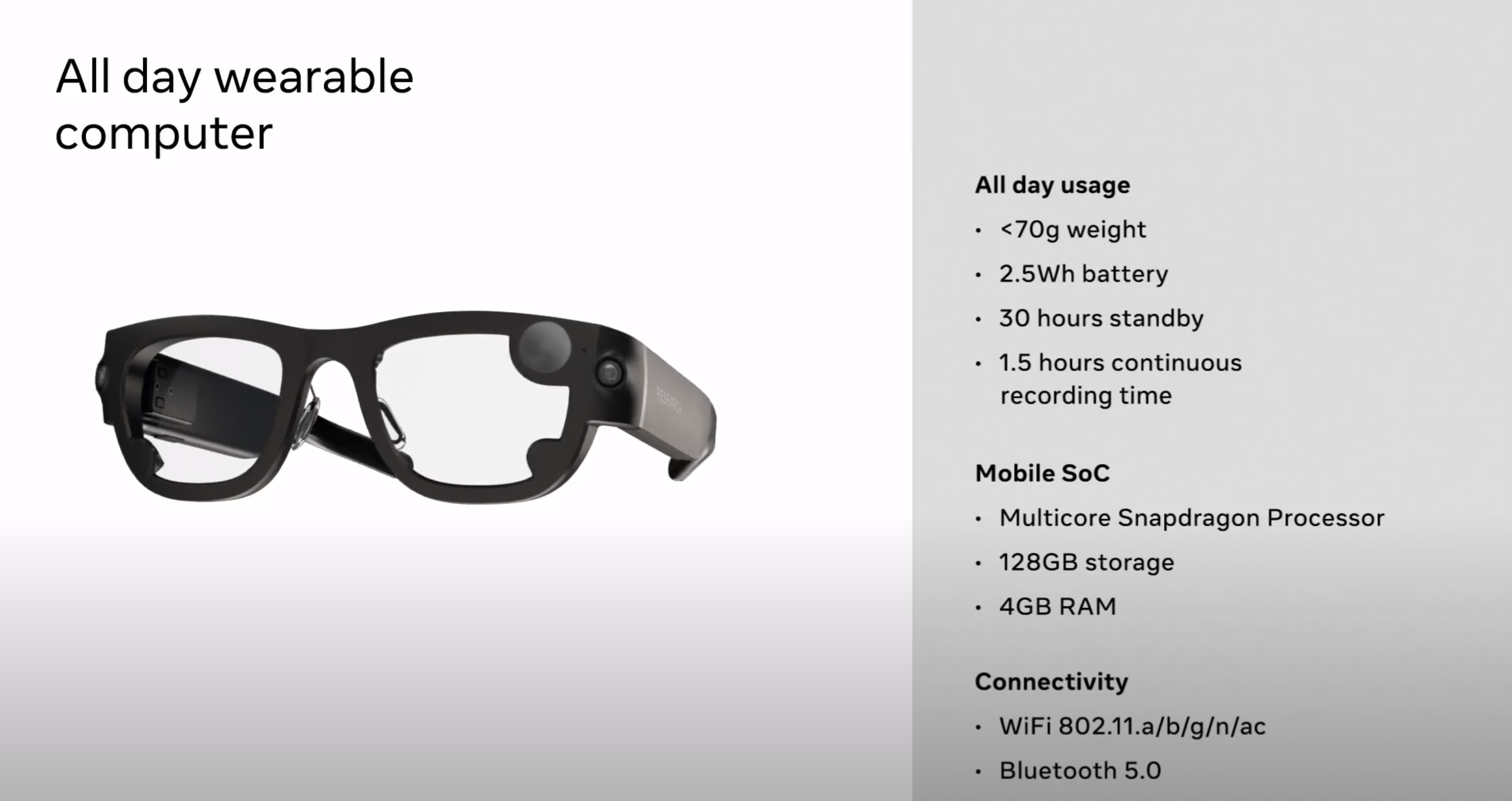

Designed strictly as a research tool used to gather rich mapping info, Project Aria is essentially a smaller version of the car-mounted or backpack-mounted sensor suites used to fill out Google Maps or Apple Maps — except for with Aria, all the sensors are crammed into a pair of glasses that’s paired to a phone. This allows Project Aria to be highly portable and relatively obtrusive, allowing people to build Live Maps of their surrounding environments.

Not only will Project Aria make the challenge of gathering the data used to create Live Maps easier, it will also allow researchers to determine what types of data are most important, while a special privacy filter designed by Facebook prevents Aria from uploading potentially sensitive data. Facebook has no plans to release or sell Aria to the public; the company will begin testing Aria in the real world starting this month.

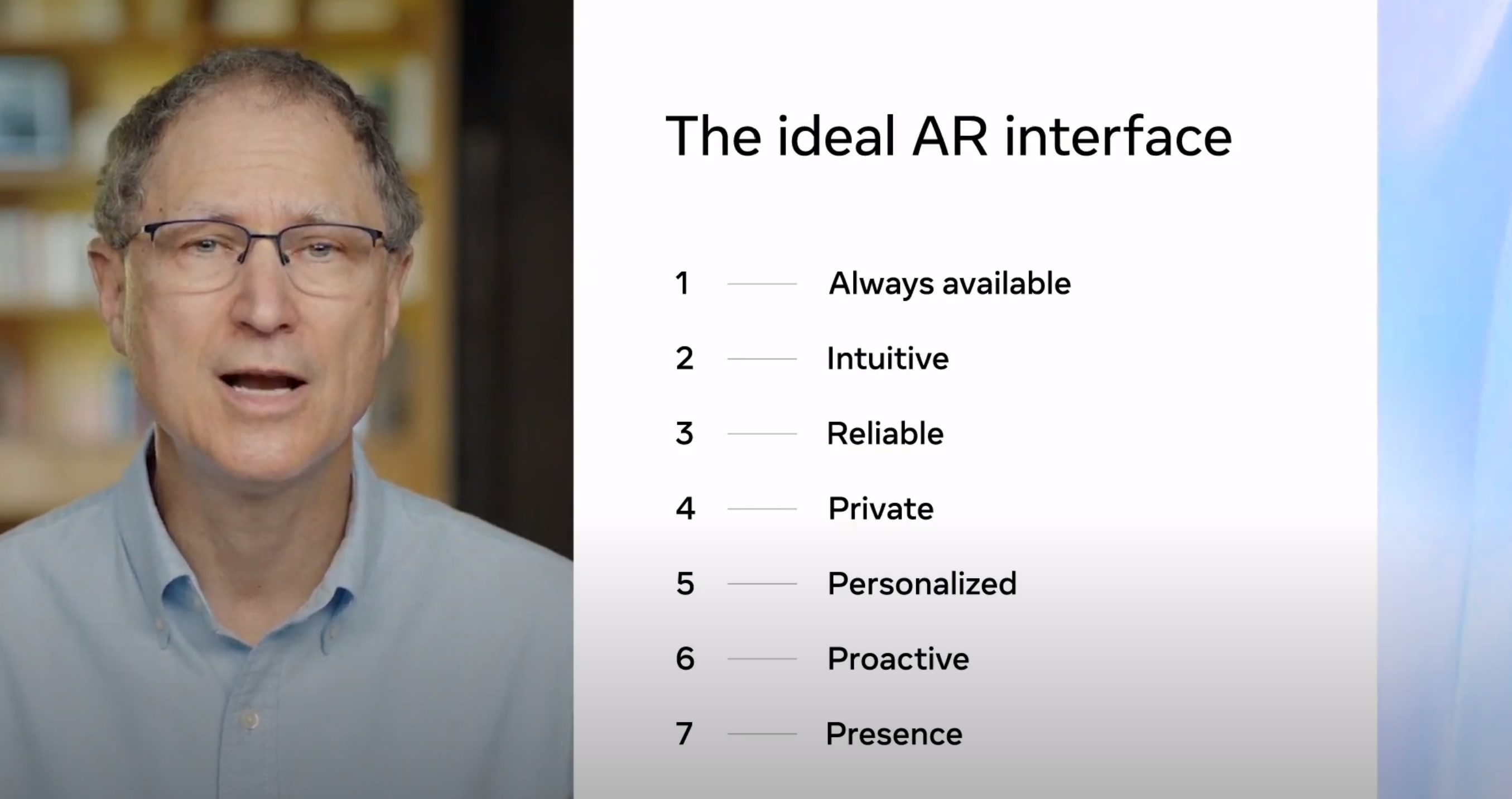

Finally, when it comes to comprehensive AR interaction, Facebook is working to combine “high-bandwidth focused interactions” like video calls or VR chats with a new type of always-available visual interface, which for now has been dubbed the ultra-low-friction contextualized AI interface, or ULFCAII. (Just rolls off the tongue, right?) While this is clearly the most far out part of Facebook’s AR research, Abrash says that ideally, ULFCAII will be as simple and intuitive as possible, while also requiring less input or, in some cases, no input at all. AI will be able to know what you’re trying to do without you having to ask.

In practical use, this would mean an AR display that automatically pops up a window showing today’s weather before you leave the house, or knowing when and when not to mute incoming calls based on what you’re doing at the time. You would also be also to get turn-by-turn mapping indoors, with all the necessary signs and info, or even responsive step-by-step instructions for all sorts of DIY projects.

Now while all this sounds incredibly fantastic and wildly futuristic, Abrash emphasised that people are already laying the groundwork for these advanced computer interfaces. And even with just a handful of glimpses at these early demos, next-gen AR devices might not be as far off as they seem.

To see a full replay of Abrash’s presentation, click here for Facebook’s official stream and scrub to 1:19:15, or scroll back up to the embedded video above.