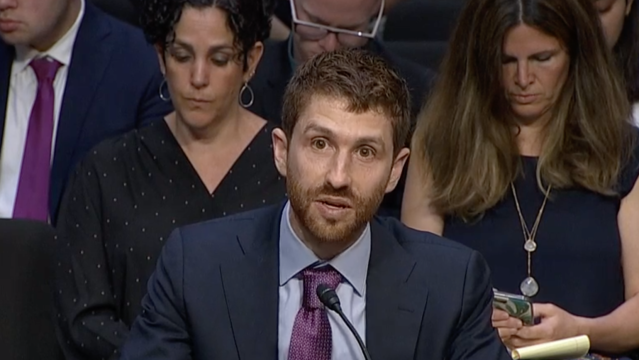

At a preliminary U.S. Senate hearing today on the subject of potentially putting legislative limits on the persuasiveness of technology – a diplomatic way of saying the addiction model the internet uses to keep people engaged and clicking – Tristan Harris, the executive director of the Center for Humane Technology, told lawmakers that while rules are important, what needs to come first is public awareness. Not an easy task.

Algorithms and machine learning are terrifying, confusing, and somehow also boring to think about. However, “one thing I have learned is that if you tell people ‘this is bad for you’, they won’t listen,” Harris stated, “if you tell people ‘this is how you’re being manipulated,’ no one wants to feel manipulated.”

Given that he went to Stanford specifically to study persuasiveness (granted, in a technological setting), let’s give Mr. Harris’s hypothesis a try, pulling from the expert testimony of today’s witnesses, of which he did the lion’s share of the talking.

Let’s begin with something simple we do hundreds of times a day, to the point where it’s completely automatic and seemingly innocuous. According to Harris:

It starts with techniques like ‘pull to refresh’, so you pull to refresh your newsfeed. That operates like a slot machine. It has the same kind of addictive qualities that keep people in Las Vegas hooked.

Other examples are removing stopping cues. So if I take the bottom out of this glass and I keep refilling the water or the wine, you won’t know when to stop drinking. That’s what happens with infinitely scrolling feeds.

Of course, the addictive qualities of platforms are also a result of their so-called network effects, where they grow in power exponentially based on how many people are already on them:

[With the introduction of likes and followers] it was much cheaper, instead of getting your attention, to get you addicted to getting attention from other people.

[…]

There’s a follow button on each profile. That doesn’t seem so [much like a dark pattern], that’s whats so insidious about it – you’re giving people a way to follow each other’s behaviour – but what it actually is doing is an attempt to cause you to come back every day because you want to see ‘do I have more followers than I did yesterday.’

The longer you spend in these ecosystems, the more machine learning systems can optimise themselves against user preferences.

[I]n the moment you hit play, it wakes up an avatar, a voodoo doll-like version of you inside of a Google server. And that avatar, based on all the clicks and likes and everything you ever made – those are like your hair clippings and toenail clippings and nail filings that make the avatar look and act more and more like you – so that inside of a Google server they can simulate more and more possibilities about ‘if I prick you with this video, if I prick you with this video, how long would you stay?’ And the business model is simply what maximized watch time.

As we’ve learned through research, reporting, and individual experience, there are quite a few negative externalities associated with solving for engagement and engagement alone:

It’s calculating what is the thing it can show you that gets the most engagement, and it turns out that outrage, moral outrage, gets the most engagement. It was found in a study that for every word of moral outrage you add to a tweet it increases your retweet rate by seventeen per cent.

In other words, the polarisation of our society is actually part of the business model. […] As recently as just a month ago on YouTube, if you did a map of the top 15 most frequently mentioned verbs or keywords on the recommended videos, they were: hates, debunks, obliterates, destroys […] that kind of thing is the background radiation that we’re dosing two billion people with.

[…]

If you imagine a spectrum on YouTube. On my left side there’s the calm, Walter Cronkite section of YouTube. On my right side there’s crazy town: UFOs, conspiracy theories, Bigfoot, whatever. […] if I’m YouTube and I want you to watch more, which direction am I going to send you?

I’m never going to send you to the calm section; I’m always going to send you towards crazy town. So now you imagine two billion people, like an ant colony of humanity, and it’s tilting the playing field towards the crazy stuff.

Worse yet, it works staggeringly well. YouTube, in particular, gets around 70 per cent of its traffic from recommendations, which are powered by these sorts of algorithms. And altering what information people get is catastrophically bad in and of itself, the implementation of algorithms isn’t limited to tweets and YouTube videos, as AI Now Institute policy research director Rashida Richardson stated:

The problem with a lot of these systems is they’re based on data sets which reflect all of our current conditions, which also means any imbalances in our conditions […] Amazon’s hiring algorithm was found to have gender-disparate outcomes, and that’s because it was learning from prior hiring practices.

[…]

They determine where our children go to school, whether someone will receive medicaid benefits, who is sent to jail before trial, which news articles we see, and which job-seekers are offered an interview […] they are primarily developed and deployed by a few power companies and therefore shaped by these companies values, incentives, and interests. […] While most technology companies promise that their products will lead to broad societal benefits there’s little evidence to support these claims and in fact mounting evidence points to the contrary.

Abandoning these systems is made as difficult as possible, too. Harris gives the example of Facebook:

If you say ‘I want to delete my Facebook account’ it puts up a screen that says ‘are you sure you want to delete your Facebook account, the following friends will miss you’ and it puts up faces of certain friends.

Now, am I asking to know which friends will miss me? No. Does Facebook ask those friends are they going to miss me if I leave? No. They’re calculating which are the five faces that are most likely to get you to hit ‘cancel.’

And much of the data needed to power these systems, again according to Harris, does not even require the arguably uninformed consent of allowing our data to be collected:

Without any of your data I can predict increasing features about you using AI. There was a paper recently that with 80 per cent accuracy I can predict your same big five personality traits that Cambridge Analytica got from you without any of your data.

All I have to do is look at your mouse movements and click patterns […] based on tweet text alone we can know your political affiliation with about 80 per cent accuracy. [A] computer can calculate that you’re homosexual before you might know you’re homosexual. They can predict with 95 per cent accuracy that you’re going to quit your job, according to an IBM study. They can predict that you’re pregnant.

Stephen Wolfram, whose name you might recognise from “knowledge engine” Wolfram Alpha, also gave testimony, where he explained to American lawmakers that their entire premise of algorithmic transparency fundamentally misunderstands how this technology works:

If you go look inside those programs, there’s usually embarrassingly little that we humans can understand in there. And here’s the real problem: it’s sort of a fact of basic science that if you insist on explainability then you can’t get the full power of a computational system or an AI. […] I don’t see a purely technical solution.

Summarising how bad things have gotten in stark terms, Harris likened big tech to a sort of cult leader:

We have a name for this asymmetric power relationship, and that’s a fiduciary relationship or a duty of care relationship—the same standard we apply to doctors, to priests, to lawyers.

Imagine a world in which priests only make their money by selling access to the confession booth to someone else, except in this case Facebook listens to two billion peoples’ confessions, has a supercomputer next to them, and is calculating and predicting confessions you’re going to make before you know you’re going to make them.

There was one other quote that was particularly striking from today’s hearing, but it didn’t come from any doomsaying tech expert. It came from Montana Senator Jon Tester, addressing the witnesses:

Il’l probably be dead and gone and probably be thankful for it when all this shit comes to fruition.

While Harris, Richardson, and Wolfram may be able to rattle off dozens of examples of massive abuse of user trust or cite studies showing the negative impacts these technologies have had on the lives of regular, unsuspecting people, it speaks volumes that a sitting Senator would prefer death to the future we’re currently building.