Technology can learn a lot about us, whether we like it or not. It can figure out what we like, where we’ve been, how we feel. It can even make us say or do things we’ve never said or done. And according to new research, it can start to figure out what you look like based simply on the sound of your voice.

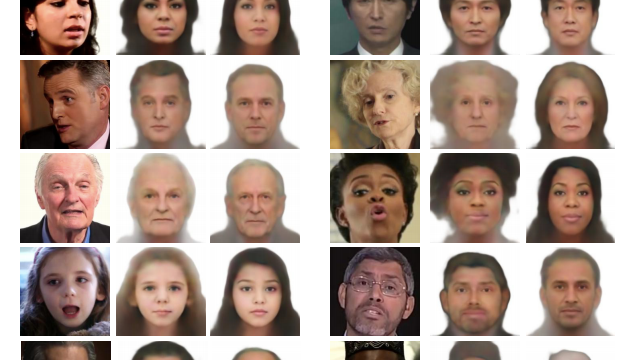

MIT researchers published a paper last month called Speech2Face: Learning the Face Behind a Voice which explores how an algorithm can generate a face based on a short audio recording of that person. It’s not an exact depiction of the speaker, but based on images in the paper, the system was able to create an image of a front-facing face with a neutral expression with accurate gender, race, and age.

The researchers trained the deep neural network on millions of educational YouTube clips with over 100,000 different speakers, according to the paper. While the researchers note that their method doesn’t generate exact images of a person based on these short audio clips, the examples shown in the study do indicate that the resulting portraits eerily resemble what the person actually looks.

It’s not necessarily similar enough that you’d be able to identify someone based on the image, but it does signal the new reality that even in a rudimentary form, an algorithm can guess—and generate—what someone looks like based exclusively on their voice.

The researchers do address ethical considerations in the paper, namely around the fact that their system doesn’t reveal the “true identity of a person” but rather creates “average-looking faces.” This is to ensure that it isn’t an invasion of privacy.

However, the researchers did raise some thorny ethical questions with the type of data they used for their model. One of the individuals included in the dataset told Slate that he didn’t remember signing a waiver for the YouTube video he was featured in that ended up being fed through the algorithm. But the videos are publicly available information, and so legally, this type of consent wasn’t required.

“Since my image and voice were singled out as an example in the Speech2Face paper, rather than just used as a data point in a statistical study, it would have been polite to reach out to inform me or ask for my permission,” Nick Sullivan, head of cryptography at Cloudflare who was used in the study, told Slate.

The researchers also indicate in their study that the dataset that they used isn’t an accurate representation of the world population since it was just pulling from a specific subset of videos on YouTube. It’s therefore biased—a common issue among machine learning datasets.

It’s certainly nice that the researchers pointed out the ethical considerations with their work. However, as advancements in technology go, they won’t always be iterated on and deployed by teams or individuals with good intentions.

There are of course a number of ways in which this type of system can be exploited, and if someone figures out a way to create even more realistic depictions of someone based simply on an audio recording, it points to a future in which anonymity becomes increasingly difficult to achieve. Whether you like it or not.