Neural networks can do some impressive stuff these days, from generating photorealistic faces to writing short films. Image recognition is another hot area, with projects such as Google Inception leading the way in accuracy. Even so, it still has a long way to go, as researchers from Auburn University and Adobe have revealed in a new paper.

[referenced url=”https://gizmodo.com.au/2016/06/watch-this-fascinatingly-incoherent-short-film-written-by-a-neural-network/” thumb=”https://i3.ytimg.com/vi/LY7x2Ihqjmc/mqdefault.jpg” title=”A Neural Network Wrote This Fascinating Short Film” excerpt=”What if we turned a neural network into a science fiction writer? That is the premise behind Sunspring, a film starring Silicon Valley’s Thomas Middleditch and directed by Oscar Sharp and written by… well, an LSTM recurrent neural network.”]

The paper, entitled “Strike (with) a Pose: Neural Networks Are Easily Fooled by Strange Poses of Familiar Objects”, shows one of the biggest weaknesses in current image recognition neural networks — a lack of flexibility.

Despite Inception being considered the best at what it does, with the paper pointing out it has an accuracy of 77.45 per cent across 1.2 million images, the researchers were able to reliably fool it using a combination of static backgrounds and 3D models.

The resulting images are classified as “out-of-distribution inputs”, or OoDs.

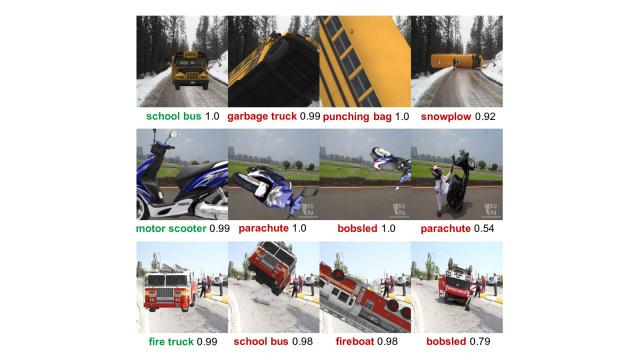

It was then a simple matter of rotating the objects into so-called “adversarial poses”, with Inception failing to recognise the object most of the time:

For objects that are readily recognized by DNNs in their canonical poses, DNNs incorrectly classify 97% of their pose space. In addition, DNNs are highly sensitive to slight pose perturbations. Importantly, adversarial poses transfer across models and datasets.

We find that 99.9% and 99.4% of the poses misclassified by Inception-v3 also transfer to the AlexNet and ResNet-50 image classifiers trained on the same ImageNet dataset, respectively, and 75.5% transfer to the YOLOv3 object detector trained on MS COCO.

So rather than being a flaw with Inception, it appears image recognition neural networks as a whole may require a rethink, with the paper describing their “understanding” of common objects, such as fire trucks and school buses, as “quite naive”.

Along with the paper itself, the researchers came up with a framework that should help NN developers better test their work.

[referenced url=”https://gizmodo.com.au/2017/10/watching-this-neural-network-render-truly-photorealistic-faces-is-creepy-and-mesmerizing/” thumb=”https://i.kinja-img.com/gawker-media/image/upload/c_lfill,w_768,q_90/evzqf7hwat06vm4ohwpy.jpg” title=”Watching This Neural Network Render Truly Photorealistic Faces Is Creepy And Mesmerising” excerpt=”In 2015, Google released DeepDream, a bonkers, art-generating neural net users put to work rendering everything from disturbing dog collages to even more disturbing psychedelic porn.”]