Image recognition technology may be sophisticated, but it is also easily duped. Researchers have fooled algorithms into confusing two skiers for a dog, a baseball for espresso, and a turtle for a rifle. But a new method of deceiving the machines is simple and far-reaching, involving just a humble sticker.

Google researchers developed a psychedelic sticker that, when placed in an unrelated image, tricks deep learning systems into classifying the image as a toaster. According to a recently submitted research paper about the attack, this adversarial patch is “scene-independent,” meaning someone could deploy it “without prior knowledge of the lighting conditions, camera angle, type of classifier being attacked, or even the other items within the scene.” It’s also easily accessible, given it can be shared and printed from the internet.

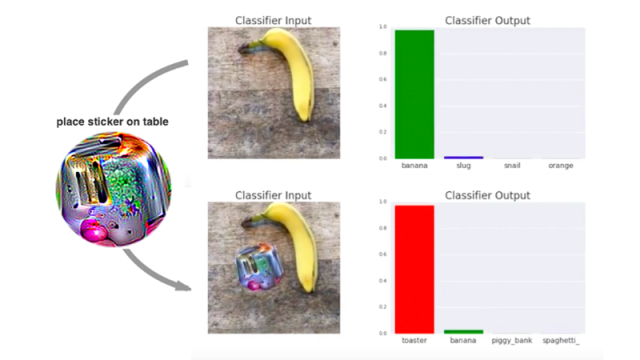

A YouTube video uploaded by Tom Brown – a team member on Google Brain, the company’s deep learning research team – shows how the adversarial patch works using a banana. An image of a banana on a table is correctly classified by the VGG16 neural network as a banana, but when the psychedelic toaster sticker is placed next to it, the network classifies the image as a toaster.

That’s because, as the researchers note in the paper, a deep learning model will only detect one item in an image, the one that it considers to be the most “salient.” “The adversarial patch exploits this feature by producing inputs much more salient than objects in the real world,” the researchers wrote in the paper. “Thus, when attacking object detection or image segmentation models, we expect a targeted toaster patch to be classified as a toaster, and not to affect other portions of the image.”

While there are a number of ways researchers have suckered machine learning algorithms into seeing something that is not in fact there, this method is particularly consequential given how easy it is to carry out, and how inconspicuous it is. “Even if humans are able to notice these patches, they may not understand the intent of the patch and instead view it as a form of art,” the researchers wrote.

Currently, tricking a machine into thinking a banana is a toaster isn’t exactly a menace to society. But as our world begins to increasingly lean on image recognition technology to operate, these types of easily executable methods can wreak havoc. Most notably, with the roll-out of self-driving cars. These machines rely on image recognition software to understand and interact with their surroundings. Things could get dangerous if thousands of pounds of metal rolling down the highway can only see toasters.

[BBC]