I generally don’t care for the poetics of life. When I’m walking down the street and something poetic happens – sunlight piercing rainclouds, golden leaves dancing in an autumn wind – I just go “ehh.” But human emotion is quantifiable, meaning it can be taught to computers. A collaboration between MIT’s Lab for Social Machines and McKinsey’s Consumer Tech and Media team want to use film to quantify and “teach” emotions to computers in order to create AI that can tell emotional stories.

Screengrab: Youtube/Disney

How do you teach emotions to AI? Make them watch movies. MIT’s machine learning model reviewed “thousands” of movies and eventually became able to track and label emotional arcs – not story beats exactly, but the shifts from happy to sad a viewer might feel as they watch the movie. Crucially, this research wanted AI to understand how the film triggered them, using music, dialogue, camera angles and so on.

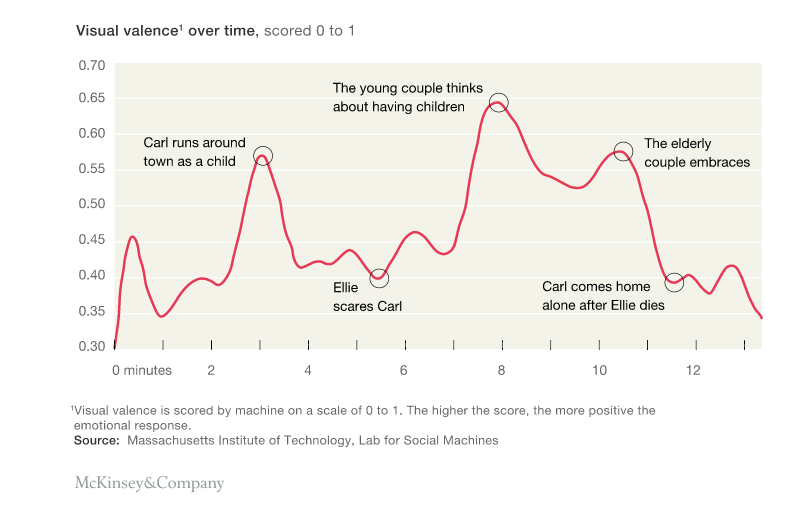

As discussed in the blog post, the machine watched the infamously sad opening sequence from Up, discerning the story beats that had the most emotional impacts, both positive and negative.

To measure the AI’s accuracy, the team had volunteers watched film clips, then record their own emotional responses (happy, sad, confused) and label the specifics of what prompted them – perhaps a close-up, an especially poignant music note and so on. They then weighted how much each element contributed to that emotion. Assigning numerical values to the survey let the researchers refine their neural network so it tracked alongside how people felt.

Though a long-term goal is for AI to create emotionally cogent and powerful stories on its own, for now, at least, they say the best way to use this tech is “to enhance [the work of storytellers] by providing insights that increase a story’s emotional pull – for instance, identifying a musical score or visual image that helps engender feelings of hope”.

Sounds like a product pitch – maybe as an add-on for junior filmmakers using Adobe Premiere or Final Cut Pro? While the blog begins with ruminations on the power of AI storytellers, it ends with insight into how emotion-detection might predict social media engagement. In fact, emotion-detection is becoming increasingly common; Disney has used face recognition to detect emotions during film screenings. It isn’t functionally different from screening the film for focus groups to market movies more efficiently, but the film industry is a business and it’s much more interested in AI as a profit-maker than as a poetry generator.

[McKinsey via Fast Company]