Image: YouTube

Maybe it’s happened to you. You’re cruising around YouTube and then boom: a video of Spiderman hanging out with girls in bikinis trying to make Elsa from Frozen jealous and then the Joker appears, ready to fight. This would seem like a weird video to any sane adult. But the weirdest thing is that it’s actually made for kids.

YouTube is aware of the issue. On Thursday night, the company quietly announced that it wants to crack down on these inappropriate videos. Let me offer up YouTube’s defence in its own words right away.

“Earlier this year, we updated our policies to make content featuring inappropriate use of family entertainment characters ineligible for monetisation,” YouTube’s director of policy Juniper Downs told Gizmodo in the same canned statement given to everyone. “We’re in the process of implementing a new policy that age restricts this content in the YouTube main app when flagged.”

This new policy should prevent the creepy videos from showing up in the YouTube Kids app, which launched in 2015. But the change would not necessarily prevent kids from seeing it on YouTube’s website or the regular YouTube app, although YouTube hopes that preventing the creators from getting ad revenue will stop them from making their bad videos. Still, if you’re a parent with a child who knows how to use a computer or a smartphone, there’s a very good chance they could Google “spiderman elsa” and get that weird bikini video in the search results. Actually, they will probably get a whole bunch of them. This Spider-Man-Elsa masterpiece has over 25 million views and 40 second pre-roll ad bringing in revenue for its creator as well as YouTube:

Before we dive deeper into the worrisome issue, let me float a couple thoughts. You should not let your young kids watch YouTube unsupervised. You also shouldn’t use this unfiltered video site as a babysitter. And you certainly shouldn’t trust that the YouTube Kids app is carefully curated by trained humans and free of inappropriate videos, because it’s not.

I’m not a parent, so I don’t have personal anecdotes to back up these thoughts. I have been covering YouTube and the internet for a decade, though. Sadly, more than ever before, it’s machines that are deciding what adults and children see online.

YouTube uses algorithms and machine learning to control how videos surfaces in search results. That’s actually part of the reason that these uncanny videos exist. They’re designed to show up in search results and the suggested videos widget in order to reach more viewers, many of whom are unsuspecting children. The YouTube Kids app includes an extra layer of filters meant to ensure they’re family friendly, but even YouTube now admits that the system doesn’t work 100 per cent of the time. It’s close! The company says that only 0.005 per cent of the videos on YouTube Kids were removed for being in appropriate. That still sucks for the handful of kids who saw the bad videos.

This isn’t a new problem for YouTube. News that YouTube will finally address the disturbing kids’ video problem follows a New York Times report on the issue — as well as YouTube’s failure to deal with it. The young mother at the center of the story described a terrifying experience when her son came to her crying after watching a YouTube video with the title “PAW Patrol Babies Pretend to Die Suicide by Annabelle Hypnotized.” PAW Patrol is a Nickelodeon show, though the video itself was an unlicensed knock off featuring death and dying by fire. The characters were familiar to kids. The dark themes were disturbing.

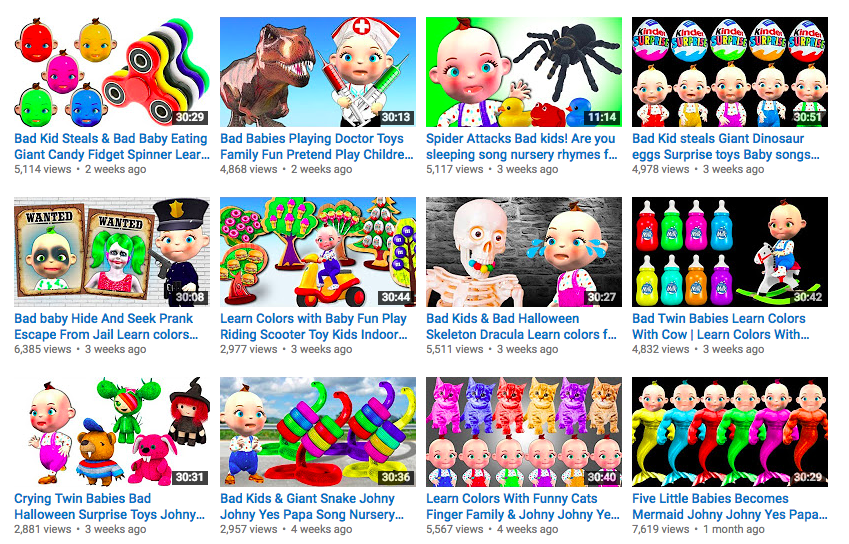

That seems to be some sort of sick rubric, too. It might even be accidental. As artist and writer James Bridle pointed out in a Medium post earlier this week, countless YouTube channels have found success by using popular TV and movie characters — Spiderman, Elsa, Peppa Pig, Hulk, and so on — in videos that recycle popular kid-video tropes. This includes memes like “bad baby,” educational themes like learning colours, and viral trends like unwrapping candy. (Who knew?) Bridle argues that many of these videos seem manufactured using both stock animations and actors, and then they’re packaged in such a way that they gain traction in search results. These tricks include old school SEO stuff like keywords in the title as well as simply making videos longer to suggest credibility and probably encourage parents to pull the YouTube-as-babysitter routine.

Image: YouTube

So let me repeat myself: Don’t use YouTube as a babysitter. Even if you pick a video from the official Peppa Pig channel, the autoplay and suggested videos might be some twisted garbage.

Laura June reported on this exact problem in an Outline post earlier this year, after she noticed her toddler watching a violent video that starts with Peppa Pig going to the dentist to get tortured. The video’s title is almost all keywords and hashtags and appears to be formulated to trick YouTube’s algorithm, and it’s still on YouTube, early eight months later. Regardless of how troubling a torture scene might be to a three year old, the problem is that YouTube is enabling its creators to deceive children on a massive scale.

“These videos are for kids, intentionally injected into the stream via confusing tags, for them to watch instead of legit episodes of beloved shows,” June writes. “Presumably made for ad revenue, they’re just slightly twisted enough that any parent with eyes will be upset when they realise what their kid is seeing.”

Some of the videos are much more twisted. “Peppa drinks bleach for the first time” is a good example. Some might call this parody, but as June argues, kids don’t know the difference between parody and reality. Maybe they watch the bleach-drinking video on YouTube and decide they want to try it.

It’s not news that the internet is full of disturbing content. YouTube certainly isn’t a safe space — even for adults. Now that the company is doubling down on feeding videos to kids, however, one has to wonder if its filters and algorithms are enough to keep what amounts to abusive video spam away from the curious eyes of children.

For now, bad videos only bubble up to YouTube’s human moderation team once they have been flagged by a user. Now that the weird kids videos and dark parodies have won international media attention for upsetting toddlers and adults alike, you’d think that YouTube take a more aggressive tack in terms of keeping bad content off of its platform. Instead, the company is turning off the ad money faucet for videos that have already been identified as inappropriate for children. That might lead to fewer disturbing videos targeted to kids, but the ones that already exist will still be out there.

Which brings me back to my original point. YouTube is a crappy babysitter, because YouTube is not for kids — even the version of YouTube that’s specifically designed for kids. Again, I’m not saying this as a parent because I don’t have children. I’m just pointing out a flaw in the technology that’s misled countless parents into thinking their kids are only watching cute cartoons when they start playing YouTube videos. Things can get real dark, real fast.

Next time you need time to yourself, try finding a movie or show that’s made to entertain children, not to trick an algorithm. Disney makes stuff for kids, as does PBS, Nickelodeon, Pixar, HBO, and countless others. Some of that stuff is even free to watch. Just avoid YouTube for this kind of thing. It’s still the wild west. It might always be.