The ever-expanding operations of Uber are defined by two interlocking and zealously guarded sets of information: The things the world-dominating ride-hailing company knows about you, and the things it doesn’t want you to know about it. Both kinds of secrets have been in play in the Superior Court of California in San Francisco, as Ward Spangenberg, a former forensic investigator for Uber, has pursued a wrongful-termination lawsuit against the company.

Image by Jim Cooke

The case, filed in May of last year, has weaponised Uber’s secrecy. Most sensationally, Spangenberg’s suit got significant press coverage in December for his claims that company employees accessed its data inappropriately to track exes and to spy on celebrities like Beyoncé. Uber responded to those claims by saying that employees only have access to the amount of customer data they need to do their jobs and that all data access is logged and routinely audited, with thorough investigations performed in the event of potential violations.

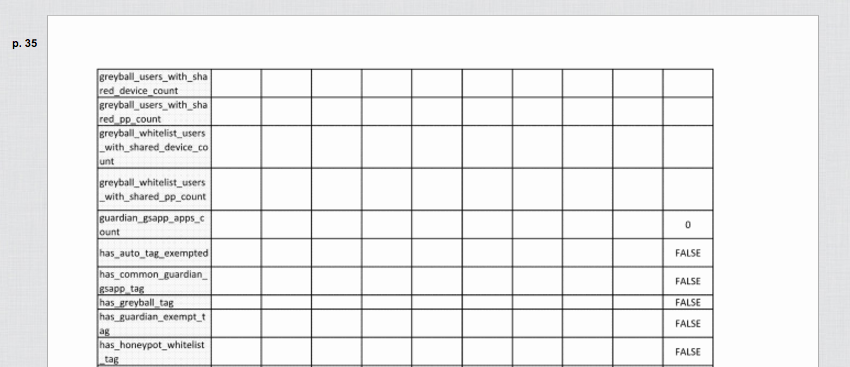

But the suit also offered a glimpse into the hidden mechanics of Uber’s everyday interactions with customers, in its proprietary corporate database. For two days in October, before Uber convinced the court to seal the material, one of Spangenberg’s filings that was publicly visible online included a spreadsheet listing more than 500 pieces of information that Uber tracks for each of its users.

A snapshot of Exhibit A

The document, presented to back up Spangenberg’s claims about the vast repository of information that Uber employees had the power to access, looks as if it had been pulled directly from the system, complete with unwieldy category names like “has_ride_allowed_low_risk_tag”. Spangenberg included his own Uber data as a sample set.

The near-endless cascade of information demonstrates the immense and granular effort Uber puts into logging every aspect of its interactions with customers — not just when you created your account, but where you were when you created your account (for Spangenberg, an office on Battery Street in downtown San Francisco), how quickly after creating that account you first called an Uber, and how long your account has been active, down to the second.

You can search the tags that might be attached to your account here. Try “gps” or “greyball”.

The spreadsheet also appears to mention a number of programs being run internally at Uber for inscrutable purposes. Among the data fields are 10 different ones whose names contain “greyball”, matching the name of a program that the New York Times revealed this March was used to “deceive authorities worldwide“. Once an account has been given a “greyball” tag, it’s used, as Uber chief security officer Joe Sullivan recently stated, “to hide the standard city app view for individual riders, enabling Uber to show that same rider a different version” of the app.

Uber says that “greyballing” can be used for a variety of innocuous purposes, such as delivering marketing to specific users, but in some places, such as Portland, Oregon for a brief period in 2014, Uber “greyballed” the accounts of city officials so that they wouldn’t be able to catch UberX drivers who were breaking local laws by participating in the service. The Justice Department is now investigating that use.

“The origins [for Greyball] were anti-abuse but other teams have found value in it,” said Uber spokesperson Melanie Ensign.

Other apparent code names that are used as some of the over 500 different possible tags on users’ accounts include “Guardian”, “Sentinel score” and “Honeypot”. Uber declined to explain the nature of specific tags, but Ensign said the Guardian project “is used to detect spoofing, like what’s described in this Bloomberg story from 2015″. The story details Uber’s challenges in China, where drivers were creating fake rides in order to scam the company.

Uber’s lawyers soon got the document sealed, arguing that it contained “confidential, proprietary, and private information… the very existence, content, and form of which are of extreme competitive sensitivity to defendant in that they demonstrate what data [Uber] considers important enough to capture”. They added that it “references the confidential and proprietary code names for Uber’s internally-developed software, databases, and systems”.

The spreadsheet certainly affirms that Uber knows its way around private information. It’s a vivid reminder of the extreme asymmetry between the users — who are simply interested in being able to hail a ride from Point A to Point B — and the machines that are tracking them. Uber’s automated systems gather small and seemingly insignificant details persistently over time, material that would otherwise be forgotten or bore a human surveillant to death.

Asked about the exhibit, Uber security spokeswoman Melanie Ensign explained that it’s “a catalogue of signals used by our machine learning systems to detect potentially fraudulent behaviour or compromised accounts”. Despite the apparent size of the database, Ensign described the material as being based on a small set of things that are “outlined in our terms of service”.

“All of these signals are derivatives of IP address, payment info, device info, location, email, phone number and account history,” Ensign said.

What’s staggering is that Uber can do so much with just those seven pieces of information.

For example, users give Uber access to their location and payment information; Uber then slices and dices that information in myriad ways. The company holds files on the GPS points for the trips you most frequently take; how much you’ve paid for a ride; how you’ve paid for a ride; how much you’ve paid over the past week; when you last cancelled a trip; how many times you’ve cancelled in the last five minutes, 10 minutes, 30 minutes and 300 minutes; how many times you’ve changed your credit card; what email address you signed up with; whether you’ve ever changed your email address.

And some of the tags appear to pass judgement on a given Uber user, such as the nefarious-sounding tags “suspected_clique_rider” and “potential_rider_driver_collusion”.

A key goal of all this surveillance is to identify and react to abnormal users: A fraudster, an abuser — or, as the Greyball scandal revealed, a government regulator trying to observe how Uber works. Where Uber has run into trouble in the past is when it sees as “abnormal users” those who stand in its way, even if they have good reasons.

In addition to the code names in the document — Guardian, Sentinel and Honeypot — there are fields called “in_fraud_geofence” and “in_fraud_geofence_pickup”. Geofencing is a technique to digitally rope off a given area. Ensign says these tags would be used to, for example, flag users who are attempting to misuse a promo code. If there was a promo code to take an Uber to a sporting event, this could help detect someone trying to use the same code for a different purpose, explained Ensign.

But it evokes two reports in the New York Times about Uber and geofencing, one in March that said Uber tracked whether accounts were being accessed in government buildings (indicating the user might be part of a government agency trying to crack down on Uber) and another story in April that Uber geofenced Apple’s headquarters so that the app performed differently for Apple employees to keep them from discovering that Uber was “fingerprinting” iPhones.

That fingerprinting allowed Uber to keep track of users even if they erased the content of their phones, but it was a violation of Apple’s privacy rules for app makers. Unfortunately, Uber couldn’t geofence the home address of every Apple employee and people working from outside Cupertino discovered fingerprinting and the geofencing, leading Apple CEO Tim Cook to personally reprimand Uber CEO Travis Kalanick in 2015.

The table offers insight into how Uber’s tagging system can be used beyond fraud prevention, to present different versions of the app to different users in different places.

We asked Rob Graham, a security consultant with Errata Security who often works with large databases, to review the document and to speculate as to why Uber was so concerned about its public exposure.

“I’m sure it will help Lyft a lot. They will have the context to understand these fields,” he said by email. “Likewise, it will help their adversaries, the Uber-haters in the world (I’m an Uber-lover), who will be able to use these fields to figure out exactly how that notorious ‘Greyballing’ works.”

When asked whether it had seen or benefited from the document, a Lyft spokesperson declined to comment.

Uber is far from alone among technology giants in using machine learning systems to attempt to profile its users at a granular level to find the activity and users that stick out as abnormal. But Uber has a history of misusing its systems of surveillance. Years ago, it used its rider-tracking system “God View” as a party trick, and later used it to casually track a journalist who regularly reported on the company. It used the anti-abuse tool Greyball to subvert government regulators. It’s currently being sued by drivers over a program called Hell that tracked their movements through a hack of the Lyft app to find out which drivers were working for both companies.

The code-named programs and hundred of tags in the table proffered by Spangenberg suggest there could be other, still unknown ways that Uber is aggressively tapping its data library.