Time and time again, police officers shoot, and sometimes kill, civilians holding harmless objects, later claiming they mistook them for guns: A mobile phone, a bible, and a Wii controller. In early February, police body camera manufacturer Taser announced that it had acquired the artificial intelligence startup Dextro Inc — a “computer vision” research team that claims it can use object recognition software to train officers to better discern actual threats. But privacy experts find the surveillance and profiling possibilities offered by this latest, but certainly not last, upgrade to police body cameras unsettling. Moreover, the question remains: The cameras may be getting smarter, but are they actually making the public safer?

Databases with massive surveillance potential

Dextro is a video analysis tool “trained” to recognise objects when scanning camera footage. Started in 2014 as advertising technology for tagging livestreaming videos, Dextro scans and pinpoints objects in footage that users are looking for, such as a book, a Nike shoe, lines of text, or a gun. Dextro can also pick up motion information, like handshakes or a punch.

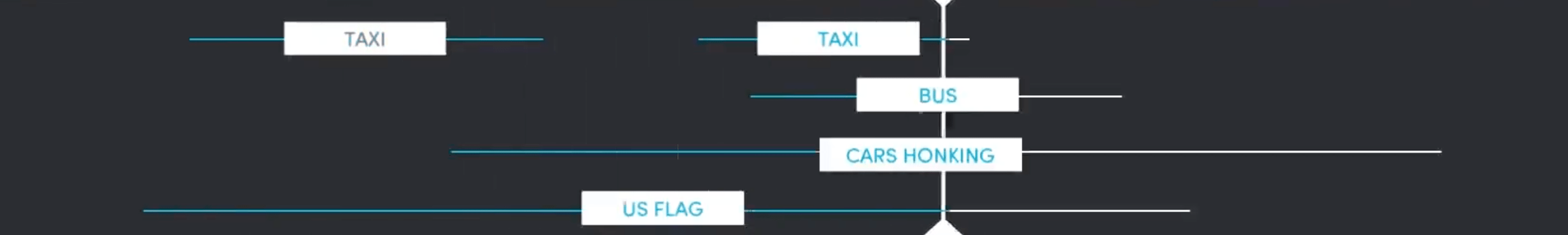

Once Dextro has identified the objects or movements, it creates a timeline for when they appear in the footage, providing timestamps and frequency data. Presumably, an officer could take four hours of boring footage, run it through Dextro, and then automatically redact everything but the moments in which the desired object or motion appears. Officers can then search entire databases for videos based on these key moments like “officer foot chase” or “traffic stop”.

Image: Front page of Dextro.co

Image: Timestamp demonstration via Dextro.co

“It’s really about improving redaction,” Marcus Womack, Taser’s executive vice president told Gizmodo. Before body camera footage can be released to the public and news outlets, police must blur faces and tattoos and edit out audio to maintain the privacy of those being filmed. “We’ve heard agencies say for every hour of video it takes eight hours of manual processing,” Womack said.

The other part of Dextro’s pitch is its capabilities as a police training tool. Once Dextro has been taught to recognise an object, it can detect it in any video footage it analyses, including dashcams, CCTV surveillance, and even aerial drone footage. Womack imagined an example of how that could help law enforcement: An officer’s body camera records an incident in which a cop mistook a mobile phone for a gun; the software helps pinpoint the precise moments when the cop made a mistake; and the video is later used for training. Police departments could potentially analyse and compile hundreds of videos for similar purposes. That’s a hypothetical, ideal scenario.

While Dextro’s technical capabilities seem effective, what concerns experts isn’t just Dextro itself, but how its use, or misuse, can enhance the already powerful surveillance capabilities of police.

“This gives police departments as a whole massive search capabilities that could be used to turn these tools into surveillance cameras,” Jay Stanley, a senior policy analyst with the ACLU, told Gizmodo. “Police body cameras capture a lot of video about a lot of people that’s not in the public interest… and for privacy reasons should not be indexed in the way this AI proposes to do so.”

Computer vision and ‘cyborg’ policing

There’s very strong support for body cameras throughout the US, especially from black communities, but experts question whether the public knows the full extent of what the cameras capture, and how tech like Dextro’s AI can be added to systems after the fact. Given how object recognition can compound with many other police technologies — surveillance footage access, drones, Stingrays, face recognition databases, licence plate readers — this technology can evolve in deeply troubling ways.

“Taxpayers wanted an accountability tool, not a surveillance tool,” Dr Alvaro Bedoya, Executive Director of the Center on Privacy & Technology at Georgetown Law told Gizmodo. Bedoya co-authored a landmark report on face recognition and police technology, which revealed that half of all American adults are in databases. On the Dextro tech he added, “It doesn’t strike me as an accountability tool.”

Taser’s VP Womack says there’s no current timeline or pricing for Dextro’s release, though he imagines the product will eventually go for sale as an optional, stand-alone upgrade after more R&D testing. Its release would have massive implications on Taser’s police clients all over the US — Los Angeles, Las Vegas, Atlanta and Minneapolis police departments, as well as Chicago PD, the second largest police department in the US (NYPD has a disputed contract with Seattle-based competitor, VieVu). In its announcement of the acquisition, Taser said Dextro will perform “deep dive analysis” on more than five million gigabytes of stored video data.

Turning police body camera and surveillance footage into a searchable database would change the very nature of public spaces by chipping away at our ability to be anonymous. Taser denies that it has any current plans of using Dextro for face recognition, but confirmed that footage is typically only redacted when it’s released to the public. Internally, agencies can index and categorise uncensored footage, with full audio and un-blurred faces, as they see fit. That’s potentially troubling given how much data can be gleaned from even a passing glance in front of cameras.

“There is a wealth of information in images even at very low resolutions,” Adam Harvey, the Berlin-based artist and anti-surveillance advocate, told Gizmodo, explaining that object recognition is a rapidly accelerating field, capable of identifying tattoos, emotions and so on. How long until this software becomes commonplace? “If Taser wants to remain competitive in the field of AI and computer vision, building these multi-modal biometric profiles is the inevitable path forward,” he said. Harvey speculates that eventually, computer vision technology will allow cops to recognise people based on a whole suite of identifying factors as they simply walk by any camera.

While Taser’s Womack insists Dextro’s technology is about relieving the burden on officers to edit and redact hundreds of hours of footage, Harvey says that Dextro’s AI-backed automation fundamentally changes the law enforcement process by accelerating the collection of evidence.

“Computer vision and facial recognition enable new ways of enforcing laws,” Harvey told Gizmodo. “Violations can be determined faster, cheaper, and over extended periods of time. Police officers are quickly becoming networked cyborgs, augmented by the speed and power of technologies once developed for war,” he said, referring in part to police drones, GPS and many other police resources that originate from the Department of Defence. On Dextro, Harvey added, “I think there are definitely privacy concerns here, but at a larger scale the issue is the amplification of enforcing laws that were originally designed to be enforced on a human-to-human level.”

Regulation and the anti-activist climate

Ironically, while Taser pitches Dextro and its database capabilities as privacy enhancers, functionally speaking, they present the opportunity to dismantle privacy by changing the function of law enforcement and the nature of anonymity in public spaces. The difference between use and misuse will largely depending on what the law allows for.

Police departments have already used face recognition and social media surveillance software to track Black Lives Matter activists during protests. And in 18 US states Republican lawmakers in have introduced bills that would punish protesters, likely reactions to Black Lives Matter, #NotMyPresident and NODaPL protests. The proposed bills vary, but some would punish protesters who wear masks or block highways. Object and facial recognition software would make it much easier for law enforcement to identify protesters, especially if they’re barred from wearing masks and bandanas that hide their face.

We’re giving up a lot of privacy in exchange for automated “video redaction”. And advancing this technology without proper regulation opens the door for troubling misuse.

Police accountability

“It’s really important that communities put in place policies that govern their use of technologies,” Taser’s Womack said. “It’s really imperative that there is an ongoing discussion with the community and the police about how the technology would be used and governed.”

But for these discussions to have any kind of effect, police departments need to be held accountable for their use of these technologies.

When it comes to body cameras, that accountability has been inconsistent at best. The officers who shot Alton Sterling last July claimed their cameras both “fell off”, an Albuquerque officer said a “malfunction” stopped the camera from recording his fatal shootout with a 19-year-old, and in Oakland police said they “mistakenly” deleted hundreds of hours of stored footage.

And even when the video technology is used properly, the existence of footage is not a guarantee of police accountability.

Matthew Mitchell is a security researcher and encryption specialist that runs Crypto Harlem, a workshop in the historically black New York City neighbourhood that teaches newcomers how to use encryption tools like PGP, WhatsApp and Tor. Mitchell says the entire premise that having a video of an incident fosters police transparency or accountability is flawed.

“You’ll never get camera footage that’s as good as the public execution of Eric Garner,” Mitchell told Gizmodo.

In 2014, NYPD officer Daniel Pantaleo was caught on tape by a bystander, placing Garner in a chokehold. Garner suffocated, the video went viral, and a grand jury decided not to indict the officer who killed him, even as millions watched Garner die at Pantaleo’s hands. Many supporters of body cameras believe in the importance of transparency, but what does transparency matter if it doesn’t lead to a conviction? Especially now that Attorney General Jeff Sessions, who leads the Department of Justice, suggested that he won’t monitor police departments accused of racial bias and chronic lack of accountability?

“That is probably the gold standard, as good as it can possibly get, of this technology serving the people,” said Mitchell of the Eric Garner footage. “And there was no justice.”

Mitchell argues that because body cameras are designed to point away from the officers themselves, law enforcement won’t be subject to the same amount of data-sourced scrutiny as the public. And as cameras are adopted more widely and the data extrapolated from this footage becomes the bedrock for officer training and policy decisions, the biases inherent to body cameras can become embedded in the larger police force.

“If we only get data of one perspective,” Mitchell said, “we’re only gonna have evidence of one perspective.” Mitchell says AI will exacerbate, rather than remedy this.

Worst-case scenarios

Given these concerns about privacy and overreach, Dextro co-founder, David Luan, was willing to address potential misuses of the technology. The first: Racial bias. Officers have long been trained to recognise certain jewellery, brands, tattoos and hand movements as “gang related” — remember when hoodies were a cause for national concern in the US? Because Dextro lets users choose their own objects for recognition, couldn’t the software automate racial profiling by scanning hundreds of hours of data for these same prejudiced indicators of criminality?

Luan said Dextro has been in contact with policy experts and academics at Stanford and UCLA about the risk for profiling.

“The goal of this is something that’s used after the fact to determine and basically release footage to the public and help departments speed up their ability to review lots of evidence,” Luan said. “As a result, there’s always a human left in there. Our goal in that is that we’re not replacing that last human judgment. There’s a separate issue of ‘Is that human judgment good?’”

Womack said that Taser would work closely with agencies and communities about the varying filters. Mitchell offered that officers using Dextro should disclose which keywords they search for, but acknowledges that that is asking a lot, since departments are already reluctant to release video footage.

Human error, or worse, menace, could be disastrous with this level of data comprehension. So I asked Luan: What if an officer wanted to misuse Dextro for his own defence? Could police officers, after a fatal shooting, go back to the footage to identify objects they could then later use as justification? Civil rights activists have long cautioned that, in cities where officers can view body cam footage before filing official reports, officers have the opportunity to tailor their report to the footage to avoid contradicting themselves. In fact, police departments in Boston, Aurora, Dallas and Las Vegas explicitly encourage officers to view the body cam footage before completing the written report.

In our conversation, Luan brought up a great point: An officer doesn’t need advanced AI technology to exaggerate evidence. “The fact that the footage exists already creates the ability to do [that],” he said. The technology doesn’t create officer malfeasance any more than it disables it.

Tech’s buzzy litany of cliches like “breakthrough” or “innovative” mischaracterises what technology actually does. Body cameras are a potential resource for enhancing police accountability; they don’t automatically make individual officers accountable or magically eliminate all possibilities of abuse.

I asked Luan if it worries him that he won’t have complete control over how this technology is used. “We can basically craft that argument over anything that’s been invented over the last 100 years,” Luan said. “The goal for the technology is not to make decisions for people. It’s people making the decisions at the end. We’re just helping speed up the process at which people can find the info they needed to make a decision, [and] speed up the search for their definition of truth inside video.”

Roughly 4000 US police departments use body cameras, with the two largest departments, the Chicago PD and NYPD, announcing plans to equip every patrolling officer by 2019. Body cameras are in schools and worn by bouncers, and face recognition is part of international travel procedures in Paris and Australia. Taser’s object-recognition conceit may be the latest attempt to rebuild community trust in law enforcement, but the realities of “computer vision” and object recognition adds dangerous new layers of surveillance opportunities. The privacy the public would sacrifice is not worth what such technology has to offer. And without regulations, there are no stop guards to prevent abuse of this software.

“There’s this idea that we can’t be bad if we’re using computers. It leads to ‘I was just following an algorithm’ instead of ‘I was just following orders,’” Mitchell said.