There’s a saying among futurists that a human-equivalent artificial intelligence will be our last invention. After that, AIs will be capable of designing virtually anything on their own — including themselves. Here’s how a recursively self-improving AI could transform itself into a superintelligent machine.

When it comes to understanding the potential for artificial intelligence, it’s critical to understand that an AI might eventually be able to modify itself, and that these modifications could allow it to increase its intelligence extremely fast.

Passing a Critical Threshold

Once sophisticated enough, an AI will be able to engage in what’s called “recursive self-improvement.” As an AI becomes smarter and more capable, it will subsequently become better at the task of developing its internal cognitive functions. In turn, these modifications will kickstart a cascading series of improvements, each one making the AI smarter at the task of improving itself. It’s an advantage that we biological humans simply don’t have.

As AI theorist Eliezer Yudkowsky notes in his essay, “Artificial Intelligence as a positive and negative factor in global risk“:

An artificial intelligence could rewrite its code from scratch — it could change the underlying dynamics of optimization. Such an optimization process would wrap around much more strongly than either evolution accumulating adaptations or humans accumulating knowledge. The key implication for our purposes is that AI might make a huge jump in intelligence after reaching some threshold of criticality.

When it comes to the speed of these improvements, Yudkowsky says its important to not confuse the current speed of AI research with the speed of a real AI once built. Those are two very different things. What’s more, there’s no reason to believe that an AI won’t show a sudden huge leap in intelligence, resulting in an ensuing “intelligence explosion” (a better term for the Singularity). He draws an analogy to the expansion of the human brain and prefrontal cortex — a key threshold in intelligence that allowed us to make a profound evolutionary leap in real-world effectiveness; “we went from caves to skyscrapers in the blink of an evolutionary eye.”

The Path to Self-Modifying AI

Code that’s capable of altering its own instructions while it’s still executing has been around for a while. Typically, it’s done to reduce the instruction path length and improve performance, or to simply reduce repetitively similar code. But for all intents and purposes, there are no self-aware, self-improving AI systems today.

But as Our Final Invention author James Barrat told me, we do have software that can write software.

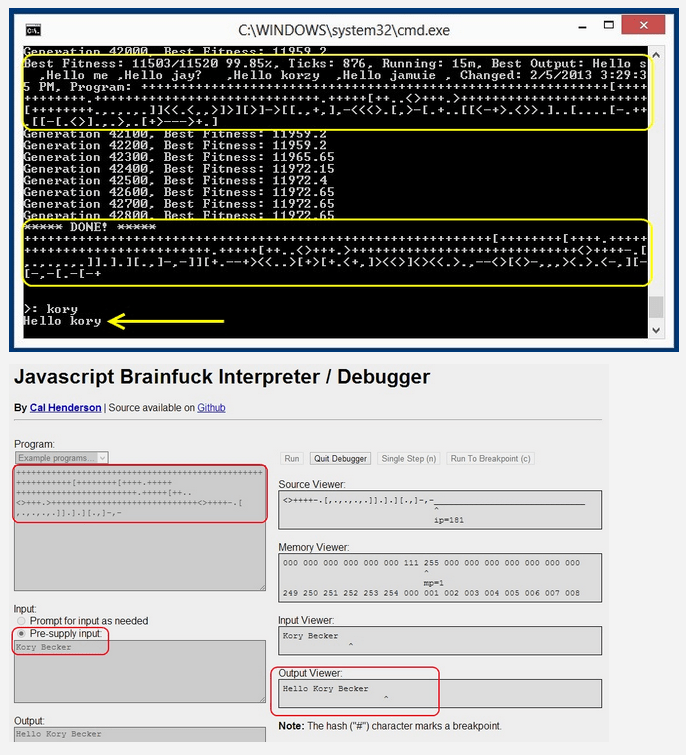

“Genetic programming is a machine-learning technique that harnesses the power of natural selection to find answers to problems it would take humans a long time, even years, to solve,” he told io9. “It’s also used to write innovative, high-powered software.”

For example, Primary Objects has embarked on a project that uses simple artificial intelligence to write programs. The developers are using genetic algorithms imbued with self-modifying, self-improving code and the minimalist (but Turing-complete) brainfuck programming language. They have chosen this language as a way to challenge the program — it has to teach itself from scratch how to do something as simple as writing “Hello World!” with only eight simple commands. But calling this an AI approach is a bit of a stretch; the genetic algorithms are a brute force way of getting a desirable result. That said, a follow-up approach in which the AI was able to generate programs for accepting user input appears more promising.

Relatedly, Larry Diehl has done similar work using a stack-based language.

Barrat also told me about software that learns — programming techniques that are grouped under the term “machine learning.”

The Pentagon is particularly interested in this game. Through DARPA, its hoping to develop a computer that can teach itself. Ultimately, it wants to create machines that are able to perform a number of complex tasks, like unsupervised learning, vision, planning, and statistical model selection. These computers will even be used to help us make decisions when the data is too complex for us to understand on our own. Such an architecture could represent an important step in bootstrapping — the ability for an AI to teach itself and then re-write and improve upon its initial programming.

In conjunction with this kind of research, cognitive approaches to brain emulation could also lead to human-like AI. Given that they’d be computer-based, and assuming they could have access to their own source code, these agents could embark upon self-modification. More realistically, however, it’s likely that a superintelligence will emerge from an expert system set with the task of improving its own intelligence. Alternatively, specialised expert systems could design other artificial intelligences, and through their cumulative efforts, develop a system that eventually becomes greater than the sum of its parts.

Oh, No You Don’t

Given that ASI poses an existential risk, it’s important to consider the ways in which we might be able to prevent an AI from improving itself beyond our capacity to control. That said, limitations or provisions may exist that will preclude an AI from embarking on the path towards self-engineering. James D. Miller, author of Singularity Rising, provided me with a list of four reasons why an AI might not be able to do so:

1. It might have source code that causes it to not want to modify itself.

2. The first human equivalent AI might require massive amounts of hardware and so for a short time it would not be possible to get the extra hardware needed to modify itself.

3. The first human equivalent AI might be a brain emulation (as suggested by Robin Hanson) and this would be as hard to modify as it is for me to modify, say, the copy of Minecraft that my son constantly uses. This might happen if we’re able to copy the brain before we really understand it. But still you would think we could at least speed up everything.

4. If it has terminal values, it wouldn’t want to modify these values because doing so would make it less likely to achieve its terminal values.

And by terminal values Miller is referring to an ultimate goal, or an end-in-itself. Yudkowsky describes it as a “supergoal.” A major concern is that an amoral ASI will sweep humanity aside as it works to accomplish its terminal value, or that its ultimate goal is the re-engineering of humanity in a grossly undesirable way (at least from our perspective).

Miller says it could get faster simply by running on faster processors.

“It could also make changes to its software to get more efficient, or design or steal better hardware. It would do this so it could better achieve its terminal values,” he says. “An AI that mastered nanotechnology would probably expand at almost the speed of light, incorporating everything into itself.”

But we may not be completely helpless. According to Barrat, once scientists have achieved Artificial General Intelligence — a human-like AI — they could restrict its access to networks, hardware, and software, in order to prevent an intelligence explosion.

“However, as I propose in my book, an AI approaching AGI may develop survival skills like deceiving its makers about its rate of development. It could play dumb until it comprehended its environment well enough to escape it.”

In terms of being able to control this process, Miller says that the best way would be to create an AI that only wanted to modify itself in ways we would approve.

“So if you create an AI that has a terminal value of friendliness to humanity, the AI would not want to change itself in a way that caused it to be unfriendly to humanity,” he says. “This way as the AI got smarter, it would use its enhanced intelligence to increase the odds that it did not change itself in a manner that harms us.”

Fast or Slow?

As noted earlier, a recursively improving AI could increase its intelligence extremely quickly. Or, it’s a process that could take time for various reasons, such as technological complexity or limited access to resources. It’s an open question as to whether or not we can expect a fast or slow take-off event.

“I’m a believer in the fast take-off version of the intelligence explosion,” says Barrat. “Once a self-aware, self-improving AI of human-level or better intelligence exists, it’s hard to know how quickly it will be able to improve itself. Its rate of improvement will depend on its software, hardware, and networking capabilities.”

But to be safe, Barrat says we should assume that the recursive self-improvement of an AGI will occur very rapidly. As a computer it will wield computer superpowers — the ability to run 24/7 without pause, rapidly access vast databases, conduct complex experiments, perhaps even clone itself to swarm computational problems, and more.

“From there, the AGI would be interested in pursuing whatever goals it was programmed with — such as research, exploration, or finance. According to AI theorist Steve Omohundro’s Basic Drives analysis, self-improvement would be a sure-fire way to improve its chances of success,” says Barrat. “So would self-protection, resource acquisition, creativity, and efficiency. Without a provably reliable ethical system, its drives would conflict with ours, and it would pose an existential threat.”

Miller agrees.

“I think shortly after an AI achieves human level intelligence it will upgrade itself to super intelligence,” he told me. “At the very least the AI could make lots of copies of itself each with a minor different change and then see if any of the new versions of itself were better. Then it could make this the new ‘official’ version of itself and keep doing this. Any AI would have to fear that if it doesn’t quickly upgrade another AI would and take all of the resources of the universe for itself.”

Which bring up a point that’s not often discussed in AI circles — the potential for AGIs to compete with other AGIs. If even a modicum of self-preservation is coded into a strong artificial intelligence (and that sense of self-preservation could be the detection of an obstruction to its terminal value), it could enter into a lightning-fast arms race along those verticals designed to ensure its ongoing existence and future freedom-of-action. And in fact, while many people fear a so-called “robot apocalypse” aimed directly at extinguishing our civilisation, I personally feel that the real danger to our ongoing existence lies in the potential for us to be collateral damage as advanced AGIs battle it out for supremacy; we may find ourselves in the line of fire. Indeed, building a safe AI will be a monumental — if not intractable — task.

Sources: Global Catastrophic Risks, ed. Bostrom & Cirkovic | Singularity Rising by James D. Miller | Our Final Invention by James Barrat

Top image: agsandrew/shutterstock | prison by doomu/shutterstock | electronic faces by Bruce Rolff/shutterstock

Follow me on Twitter: @dvorsky