Halfway between Brooklyn and Montauk, a steel cupola propped up on wooden legs once looked out over the Long Island Sound and beyond the horizon. Built in the first years of the 20th century, Wardenclyffe Tower served as the centrepiece of a real-life mad scientist’s laboratory. Lever pulling, lightning bolts, maniacal laughter – this is where that sort of thing was supposed to happen. And it almost did.

That mad scientist’s name was Nikola Tesla whose mission was to create a way to send wireless electricity as far as London. Thanks to funding from Wall Street luminaries like JP Morgan, the lab itself could have been the birthplace for our wireless future. The only problem? The cupola and its ambitions were destroyed due to a few poor business decisions and a lot of bad luck, long before Tesla could realise his dreams.

The early days of wireless technology were marked by struggle and confusion but also glory and Earth-shattering instances of scientific achievement. Wireless technology is brutally difficult. Progress from the first theories of electromagnetic waves to the first telegraph signal didn’t happen in a matter of years. It took decades. Advancing from sending little chirps across a waterway to connecting vast networks of computers over the air took well over a century.

But innovation tends to snowball. In the past few years, we’ve seen rapid advances in everything from cellular communications to wireless power and ideas as wild as using lasers to beam internet down to Earth from space. To understand what’s next, however, you have to understand how we got here.

The early days of wireless technology

Wireless communication has served as the lynchpin of modern society since the invention of the telegram. You could almost attribute the technology to Paul Reuter, who enlisted pigeons to carry stock quotes between Berlin and Paris in the mid-19th century. (After all, pigeons are technically wireless.) In the years that followed, however, a new technology called wireless telegraphy entered its nascent stages.

Wireless telegraphy – also known as radio telegraphy – involves transmitting radio waves through the air in short and long pulses. These “dots” and “dashes” – also known as Morse Code – were then picked up by a receiver and translated into text by a receiving operator. Put bluntly, this new method of communication enabled humans to communicate over vast distances with relative ease.

In order the understand how this new form of communication works, it helps to understand the early history. The origins of wireless technology can be pegged to the year 1865, when Scottish scientist James Clerk Maxwell published a paper about electric and magnetic fields. “A Dynamical Theory of the Electromagnetic Field” is now regarded as a foundational work of physics that not only laid the groundwork for wireless communications but also served as the starting point for Albert Einstein’s research into relativity. Maxwell correctly theorised that these electromagnetic waves could travel at the speed of light and, in 1873, published a set of equations (Maxwell’s equations) that would serve as the foundation of all electric technology. Things really got interesting, however, when other scientists started putting Maxwell’s equations into practice.

Heinrich Hertz proved the existence of electromagnetic waves in a series of experiments from 1886 and 1889. However, after essentially building the world’s first radio – a badass gadget known as a spark gap transmitter – the German scientist actually thought it was all pretty boring. “It’s of no use whatsoever,” Herz said at the time. “This is just an experiment that proves Maestro Maxwell was right – we just have these mysterious electromagnetic waves that we cannot see with the naked eye. But they are there.”

Turns out they were pretty useful. The international unit now used for frequency in radio waves, of course, is named after Hertz.

What followed Hertz’s experiments was a flurry of invention and innovation. The two biggest names that emerged in the final years of the 19th century were Guglielmo Marconi, who was primarily interested in wireless communications, and Nikola Tesla, who saw great promise in wireless electricity.

British postal engineers posing with Marconi’s telegraphy equipment. (Image: Cardiff Council Flat Holm Project)

Broadly speaking, Marconi is credited with building the world’s first radio station and marketing the world’s first wireless telegraphy equipment in the late 1890s. But in those same years, German scientist Ferdinand Braun was doing similar work using an induction coil designed and patented by Tesla. Marconi and Braun would go on to win the 1909 Nobel Prize for their accomplishments in wireless telegraphy.

Tesla, quite famously, was not so lucky. The scientist had remained resolute in creating a viable technology for wireless power. But after he failed to produce a viable wireless power transmitter with the Wardenclyffe Tower at his Long Island laboratory, Tesla died penniless in Room 2237 at the New Yorker Hotel, 34 years after the Nobel Prize was awarded to Marconi and Braun. That same year, 1943, the United States Supreme Court ruled that Tesla’s 1897 patent for a transmitter and receiver, which predated Marconi’s inventions, tacitly acknowledging Telsa’s pioneering contributions to the invention of telegraphy and radio technology. Perhaps more significantly, it was Tesla’s contributions that have proved more long-lasting and relevant to wireless technology today.

“Tesla actually goes a long way in thinking about how you’d send thousands of messages in its own frequency,” W. Bernard Carlson, author of Tesla: Inventor of the Electrical Age and a history professor at the University of Virginia, told Gizmodo in an interview. “Marconi was really broadcast technology which wasn’t really desirable for military purposes or other purposes.”

And as we’ll see, sending multiple messages on the same frequency would become absolutely integral to the development of wireless technology in the decades after Tesla.

Audio, video, disco

The first wireless transmitters in the late 1890s ushered in a century of innovation. While wireless technology effectively amounted to sending a single signal for a few miles, Victorian-era technologists would soon learn how to wireless transmit signals carrying audio, video, and eventually any type of data over any distance. By 1920, William Edmund Scripps started broadcasting “Detroit News Radiophone” over the radio, and a year later, the Detroit police introduced mobile radios into squad cars. In 1927, a General Electric lab in Schenectady, New York would become home to the world’s first television station, where high-powered radio frequency transmitters could send a signal carrying audio and video to a three-inch-by-three-inch screen about three miles away.

These are all major moments in the history of wireless technology, but with the exception of the police radios, none of it was mobile. Broadcasting was also, by definition, a one-way stream of data. Then, along came an invention called Motorola.

Produced by the Galvin Manufacturing Corporation, the Motorola radio became the world’s first car radio-phone in 1930. The two-way communicators were first adopted by police departments, and later, a more advanced and compact version called the “Handie Talkie” would earn historical importance for its role in World War II. The device’s official model number was SCR536.

A portion of an early Motorola Radio ad, showing the handheld two-way communicator. (Image: Motorola)

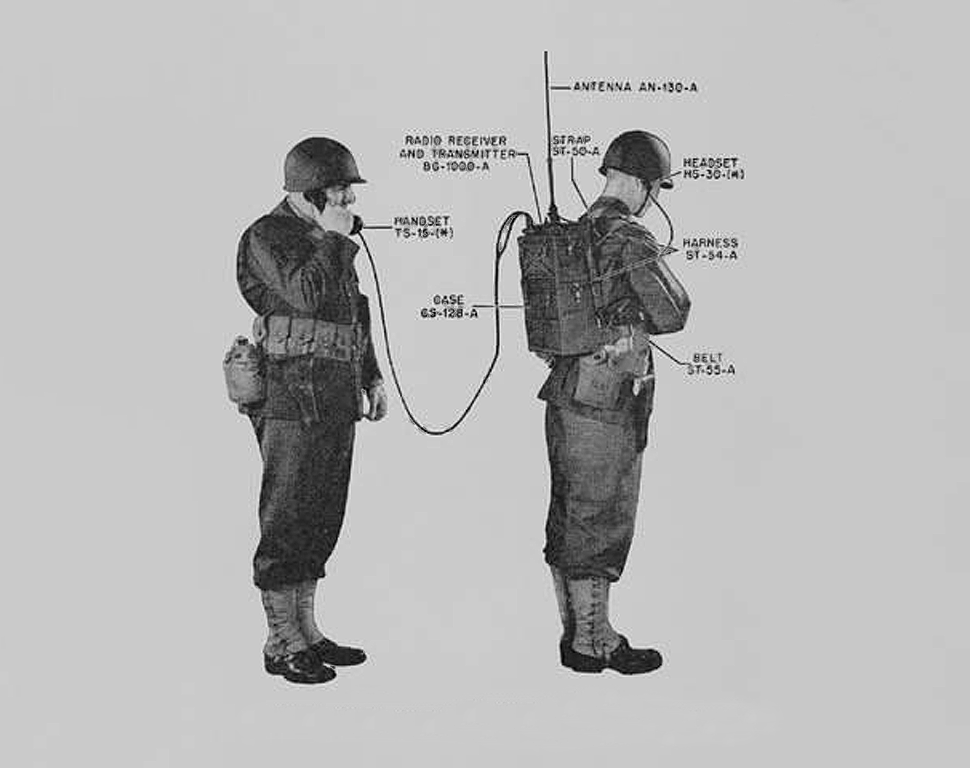

Suddenly, all of these wireless gadgets are starting to look familiar to 21st-century gadget enthusiasts. They were handheld, battery-powered, and pretty fucking cool. However, long-range mobile communications still required a crippling amount of hardware to be dependable. In 1943, Galvin released the Motorola SCR300 – also known as the “Walkie Talkie” – a hulking, 16kg FM radio device with a range of 10 to 20 miles that was worn like a backpack and sometimes required two people to operate. You probably remember seeing these in Saving Private Ryan.

A diagram showing the proper use of a Walkie-Talkie (Image: Motorola)

This idea had legs. FM (frequency modulation) radio was patented a decade before the Walkie-Talkie’s release and quickly gained popularity over its predecessor AM (amplitude modulation), since FM radio could carry higher quality audio transmission. So Galvin latched on to the idea that a two-way FM radio would be great for people to talk to each other. Taxis started using two-way Motorola radios in 1944, and after the war, in 1946, Motorola introduced the world’s first car phone: the Motorola Radiotelephone. The following year, Galvin changed its company name to Motorola.

It wasn’t long before a whole infrastructure developed around this technology. The Bell System teamed up with Western Electric around this time to create the General Mobile Radiotelephone Service. Using VHF (very high frequency) equipment and FM radios, this service divided itself into two systems: one for highways and one for cities. The necessary equipment was actually built into the car itself, with batteries under the hood, a transmitter in the trunk, and a handset near the driver’s seat. Motorola, General Electric, and others built similar systems.

A Trigild Gemini 2 pre-cellular “briefcase phone” built on the Mobile Telephone Service (MTS) first developed by the Bell System in 1946. (Photo: Wikipedia)

A wide range of increasingly smaller devices started hitting the market in the 1950s. Eventually, radio-powered mobile phones could fit inside of a briefcase. These were appropriately called “briefcase phones,” and people thought they were really next level at the time. It wasn’t until the late 1960s that Bell Labs developed Advanced Mobile Phone System (AMPS) technology and laid the foundation for mobile phones as we know them today. Put more bluntly, AMPS blew the lid off the barn. The original radio telephones are now known as 0G mobile phone technology. AMPS became 1G.

The cellular revolution

Motorola researcher Martin Cooper made the world’s first handheld mobile telephone call on a New York footpath in 1973. The device was very similar to the grey, brick-sized behemoths our parents used way back when, and it weighed a hulking 1.1kg. Battery life sucked, too – apparently, it lasted just 30 minutes and took 10 hours to charge – but it was enough for Cooper to call Joel S. Engel, his rival and head of AT&T’s cellular program. “Joel, I’m calling you from a cellular phone, a real cellular phone, a handheld, portable, real cellular phone,” Cooper said.

Martin’s troll was a historic one. Bell Labs had been working on AMPS since the 1960s, and the system promised endless possibilities, including the possibility that a countless number of people could place phone calls, over the air, on the same frequency without any interference. In fact, the Federal Communications Commission (FCC) set aside the 40MHz spectrum in 1974 for mobile technology, thereby carving out a specific lane for this type of wireless communications. The concept behind mobile technology was sound, but progress was slow.

Essentially, cellular technology divided geographic areas into – you guessed it – cells. Each cell hosts a base station, as well as a tower with an antenna on top. Depending on the technology, a cell tower can pick up a signal from up to 25 miles away. If the end user is on a call and travelling, the tower sending and receiving the signal can hand off the transmission to another tower as needed. (This process is called – you guessed it – a handoff.) This is why you’re able to talk on a mobile phone while driving down the highway and not drop a call. It isn’t perfect, but it’s a hell of a lot better than the best two-way radio.

The first mobile phones were not technology meant for the masses. The FCC approved a commercial model of the DynaTAC in 1983, and a year later, Motorola sold the device for $US3,995. (In 2017, that’s close to $US10,000 when adjusted for inflation.) Michael Douglas made the DynaTAC famous three years later, when his character, Gordon Gekko, brandished one in Wall Street.

Image: 20th Century Fox

In terms of mobile phones, we all know what happened in the ’90s and early Aughts. These two decades saw incremental but incredible improvements to cellular technology. The phones got smaller, and they got a lot cheaper. The networks got faster, and the service also got a lot cheaper. Whereas mobile phone service cost as much as a dollar a minute during the AMPS days, plans with hundreds of minutes were down to $US50 or $US60 a month by the early Aughts. Plus free nights and weekends!

But it was the improved data rates that changed how we used mobile phones most profoundly. The original, so-called 1G analogue technology behind AMPS was eventually supplanted by new digital standards that offered more efficient ways of encoding data, greater access to the wireless spectrum and, as a result, faster, more dependable connections. After the second generation of cellular connectivity, 2G, came the big breakthrough: Internet anywhere.

“With 3G, for the first time, you had a larger bandwidth and reasonable data rates to support meaningful experiences for the user, the idea that internet access would become possible arrived with 3G,” Bebek Behesthi, IEEE member and associate dean of School of Engineering and Computing Sciences at New York Institute of Technology, told Gizmodo.

Behesthi helped develop 3G technology, which allowed data rates up to 3 megabits-per-second. The next generation would blow that out of the water, he explained, but there were also social consequences.

“With 4G, we’re looking at data rates up to 100 mbps, already a 30-fold increase over 3G, and a much more integrated web,” Behesthi explained. “In terms of impact to consumers and to society, we have become much more tethered to our work and the outside world by having constant internet connectivity.”

The little handheld gadgets we now just call phones changed the way we communicated. The technology has changed the way we live. But in the midst of it all, more boutique wireless standards such as Wi-Fi as well as the internet of things started to change the way the world works.

The Wi-Fi mutiny

By the late ’90s, engineers had realised that wireless would transform everything very quickly. The technology wasn’t just about making phone calls from more places. Newly available bands of spectrum were opening up the possibility to send massive quantities of data over the air, and that idea upended the most basic concepts of how we stayed connected.

You didn’t need to be tethered to a phone line to connect to the internet. As early as 1988, industry visionaries realised that how an FCC decision made it possible to create a new standard for wireless internet service. The Institute of Electrical and Electronics Engineers (IEEE) called this new standard 802.11, and by 1997, the organisation had established the basic framework for wireless fidelity, a clunky name that was eventually shorted to Wi-Fi. This idea turned into a world-changing revolution, and fittingly, Apple was one of this first companies to offer Wi-Fi connectivity in its computers. (Steve Jobs called the feature “Airport”, for some reason.)

The beauty of Wi-Fi from day one was the fact that operated in the “garbage bands” of the radio spectrum: the 2.4 GHz UHF band and the 5GHz band. This is the same range that microwaves use to heat up food and became widely used for communication after cordless phones started using these bands. Wi-Fi gained most of its popularity under the 802.11b standard, which operates on the 2.4GHz band, although the newer 802.11ac standard is more popular now, since it can handle data transfer rates as fast as 1-gigabit per second. But 15 years ago, the concept of internet connectivity over the air at any speed was Earth-shattering.

“We stand at the brink of a transformation,” Wired’s Chris Anderson wrote of Wi-Fi in 2003. “It is a moment that echoes the birth of the Internet in the mid-’70s, when the radical pioneers of computer networking – machines talking to each other! – hijacked the telephone system with their first digital hellos.”

Anderson was not wrong. Wi-Fi was about to upend our very conception of connectivity. This idea that the internet could be everywhere would transform not only communication but also how humans understood the world. The sword-thrusting paragraph of that seminal Wired feature is worth quoting in full:

This time it is not wires but the air between them that is being transformed. Over the past three years, a wireless technology has arrived with the power to totally change the game. It’s a way to give the Internet wings without licenses, permission, or even fees. In a world where we’ve been conditioned to wait for cell phone carriers to bring us the future, this anarchy of the airwaves is as liberating as the first PCs – a street-level uprising with the power to change everything.

Crazy right? That was less than 15 years ago. Anderson’s predictions were only partially true, though. Little did Wired realise that the internet and the technology that enabled connectivity would later become a battleground for security, free speech, and political responsibility before too long. But the technology, at the time, it was revolutionary.

The internet of really cool things

While Wi-Fi was rapidly becoming the standard for wirelessly connecting to the internet, a number of other technologies emerged that offered a different type of communication. Instead of helping humans communicate with each other, this so-called Internet of Things enabled gadgets to talk to each other. The new standards that would govern these connections started appearing in the late ’90s, just as Wi-Fi was gaining mainstream popularity, and widespread adoption since then can only be described as chaotic.

The first IoT standard that took off is still the most popular: Bluetooth. Hilariously named after a medieval Scandinavian king who may or may not have had an actual blue tooth in his head, the close-range wireless standard found its origins in an unlikely partnership between Ericsson, Nokia, Intel, IBM and other researchers in 1997. The companies developed a new wireless standard that would let devices connect to each other locally. (Fun fact: Bluetooth was almost called personal-area-networking, or PAN, but that name was ruled out due to poor SEO.) Without the need for internet connectivity, this standard would open up an exiting new arena for wireless accessories – everything from keyboards and headphones to desktops and laptops – and change the way the entire world used gadgets.

Bluetooth is now in its fifth generation, and its range has stretched from about 9.14m to as much as 304.80m in the latest version. Like Wi-Fi before it, the technology operates on the 2.4GHz band of spectrum and also sucks up a fair amount of power to do so. This is, in part, what later led to the development of very low power, close range wireless standards like Zigbee and Z-Wave. Both of these protocols emerged in the 2000s and are now widely used for home automation technology like connected lightbulbs, smart locks, and security cameras. As Wi-Fi hardware becomes more compact and low-energy, however, it’s starting to be used in this space more and more.

On top of that, new wireless communication protocols like the one-way radio-frequency identification (RFID) and near-field communications (NFC), which is based on RFID technology but can both send and receive data, hit the market. Unlike Wi-Fi and Bluetooth, these wireless technologies can operate on a tiny trickle of electricity. NFC is now standard in most new smartphones and enables quick, wireless file transfers between devices. It’s also what powers most modern wireless payment systems. (Fun fact #2: one of the first appearances of NFC technology was in a 1997 Star Wars toy.) RFID, meanwhile, can be used for anything from tracking inventory in retail stores to helping Disney track guests as they wander through its amusement parks.

If you’ve read anything about the burgeoning popularity of IoT devices, you’ll know that security is a major concern. Generally speaking, the technology is so new and new devices are so often released into the wild without proper testing that hackers just love to find new ways to take over wireless networks by exploiting a vulnerability in an unsecured device. This is exactly what happened in late 2016, when an IoT exploit succeeded in shutting down half of America’s internet. In the sense that Wi-Fi was the wild west of wireless 15 years ago, the Internet of Things is a veritable shitshow in the late 2010s.

The next big things

In more ways than one, this is only the beginning of the wireless takeover. Telegraphy and radio, in many respects, were just the beginning. Wireless technologies have also coopted other methods of transmitting information and even electricity through the air. The use of infrared light in gadgets like remote controls is old hat, but companies like Facebook and SpaceX are currently experimenting with lasers to beam internet access from satellites down to Earth’s surface. This so-called free-space optical communication is still very expensive, but it might supplant electromagnetic waves for wireless communications since it can handle such vast quantities of data.

Wireless power, however, is already hitting the mainstream. But the current state of the technology is limited to very close ranges. Right now, the Qi specification governs how hundreds of different devices use electromagnetic induction to charge gadgets like smartphones, such as the Samsung Galaxy S8; smartwatches, like the Apple Watch; and power tools, like Bosch’s professional line-up. In each of these examples, you have to place the device on top of a charging pad to soak up that sweet wireless electricity. But you don’t actually need to plug anything in.

The technology will surely scale up in the years to come. Some companies are already getting pretty crazy with wireless power. In South Korea, for example, one city is testing out electric buses that received wireless power from cables laid underneath the road’s surface using using Shaped Magnetic Field in Resonance (SMFIR) technology.

So suddenly, finally, we’re finding our way back into that mad scientist territory. Tesla would be thrilled. Who knows when we might build some sort of giant coil that can blast electricity across entire oceans. It might never happen.

If you’d asked any pedestrian in the 20th century if we would one day be able to sit in a coffee shop with a pocket computer and talk to anyone in the world, without plugging into anything, they’d call you crazy. If you mentioned that you could charge the phone by placing it on the table, they’d call you crazy. If you suggested that the communications were being sent to space and back down to Earth with lasers, they’d call the police. And yet, here we are.