Facebook has a problem. Not the one where they admitted to being a megaphone for propaganda and psy-ops. Or the one where they narced on at-risk Australian teens. No, today’s news concerns how the social giant/massive data collection scheme has (increasingly) become an unwilling platform for users to broadcast violent crimes, sexual acts, child exploitation and suicide.

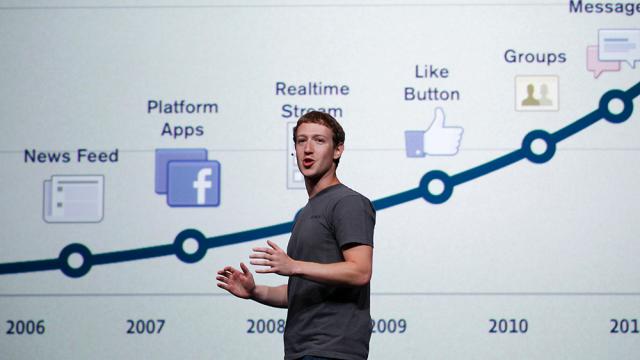

Image: AP Photo/Paul Sakuma

In a post earlier today, Mark Zuckerberg promised that, over the next year, Facebook will be “adding 3,000 people to our community operations team around the world — on top of the 4,500 we have today — to review the millions of reports we get every week, and improve the process for doing it quickly”. The 32-year-old CEO also promised to build additional reporting tools so Facebook users can better assist the company in taking down offensive videos.

On it’s face, it’s laudable Facebook would tackle this issue by effectively doubling its people ops team, especially since there’s some evidence suggesting suicides can lead to a “contagion” of subsequent suicides. Noticeably absent in Zuckerberg’s post, however, are any concrete details regarding the employment status, training or support these 3000 new team members will receive.

Reporting over the years has revealed that many of the largest companies outsource their moderation teams, letting low-paid international workers — who may not have intimate knowledge on US social mores — view the very worst posts, day in and day out. Viewing murders and suicides for months or years on end can cause moderators to develop very real psychological problems, and several former moderators I’ve spoken to have claimed to suffer from PTSD after sifting through troves of violent content and hate speech, or themselves becoming the subject of harassment by users.

If, as may be the case, the workers Facebook intends to hire for the sole purpose of removing its most upsetting videos are contractors rather than staffers, the company may not be obligated to provide health insurance, let alone counselling.

Facebook, like many social networks, has two sets of rules: The broad ones users are privy to, and the granular ones moderators are told to enforce. Opacity in policy and opacity in training both lead to incidents like Facebook’s memorable gaffe of pulling down a Pulitzer-winning photograph from the Vietnam war, and only restoring it once public outcry reached a crescendo. Graphic murder and suicide are intuitively upsetting in any culture, but beyond these most extreme examples, training moderators to be effective takes time and resources most companies are not interested in investing in.

A Facebook spokesperson told Gizmodo that they “don’t have any more details to share beyond Mark’s post to pass on at this time”, but that the company offers support to those reviewing the platform’s content. On balance, fewer avenues for broadcasting these sorts of videos is a positive, but remember it’s likely at the expensive of 7500 other people’s mental health somewhere halfway across the world.