Intel has announced that it’s moving away from its current “tick-tock” chip production cycle and instead shifting to a three-step development process that will “lengthen the amount of time [available to] utilise… process technologies.”

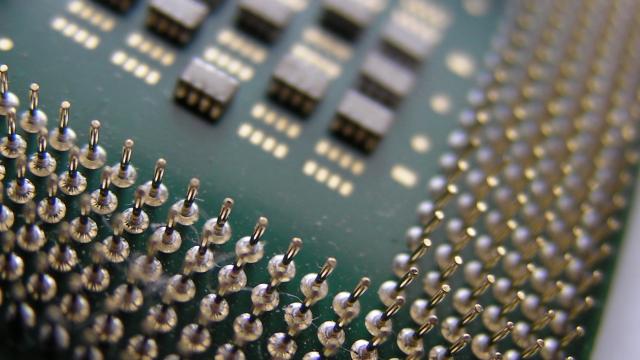

Image by Sh4rp_i

For years now, Intel has run its chip business on a ‘tick-tock’ basis: First it develops a new manufacturing technique in one product cycle (tick!), then it upgrades its microprocessors in the next (tock!).

But recently it’s been struggling to keep pace. The last advance in Intel’s chips was to move to a design created using 14 nanometre transistors aboard its Broadwell processors, which given the tick-tock cycle we’d expect to be miniaturized in 2016. But last year Intel was forced to announce that its 2016 chip line-up, called Kaby Lake, would continue to use 14 nanometre processes. Instead, the next shrinkage would arrive in the second half of 2017, when Intel said it would shift to transistors that measure just 10 nanometres in its Cannonlake chips.

Now, in an annual report filing, Intel has officially announced that it’s moving away from the tick-tock timing. Instead, it will run on a three-step development process that it refers to as “Process-Architecture-Optimization.” From the filing:

As part of our R&D efforts, we plan to introduce a new Intel Core microarchitecture for desktops, notebooks (including Ultrabook devices and 2 in 1 systems), and Intel Xeon processors on a regular cadence. We expect to lengthen the amount of time we will utilise our 14nm and our next generation 10nm process technologies, further optimising our products and process technologies while meeting the yearly market cadence for product introductions.

While it doesn’t explicitly refer to timescales, the news suggests that Moore’s Law — which states that the number of transistors on an integrated circuit doubles every two years — is stuttering at Intel. The size of the transistor, of course, dictates the number you can squeeze onto a chip. Indeed, last summer Intel’s CEO Brian Krzanich mused that “the last two technology transitions have signalled that our cadence today is closer to 2.5 years than two.”

All of this is, of course, the result of the original “tick” becoming increasingly difficult to bring about: The limit of what can be done with conventional silicon is fast being approached. IBM has announced that it can create 7-nanometre transistors, but it’s a new technique using silicon-germanium in the manufacturing process rather than pure silicon, and at any rate the process is a way off being fully commericialized.

The truth is that we may just have to start waiting a little longer for faster silicon, for now at least.

[Intel via The Motely Fool via Anandtech]