Artificial intelligence researchers at Google DeepMind are celebrating after reaching a major breakthrough that’s been pursued for more than 20 years: The team taught a computer program the ancient game of Go, which has long been considered the most challenging game for an an artificial intelligence to learn. Not only can DeepMind’s program play Go, it’s actually very good at it.

The computer program AlphaGo was developed by Google DeepMind specifically with the task of beating professional human players in Go. The group challenged the three-time European Go Champion Fan Hui to a series of matches, and for the first time ever, the software was able to beat a professional player in all five of the games played on a full-sized board. The team announced the breakthrough in a Nature article published today.

Coincidentally, just one day before the Google DeepMind team announced its scientific achievement, Facebook CEO Mark Zuckerberg wrote a public Facebook post saying that his AI team is “getting close” to achieving the same exact thing. He wrote that “the researcher who works on this, Yuandong Tian, sits about 20 feet from [his] desk”, and added, “I love having our AI team right near me so I can learn from what they’re working on.”

Facebook’s competitor to Google’s AlphaGo is called Darkforest. Yuandong Tian published the name in November, when he submitted a paper to the International Conference on Learning and Representations.

Mark Zuckerberg posted this video illustrating Facebook’s research.

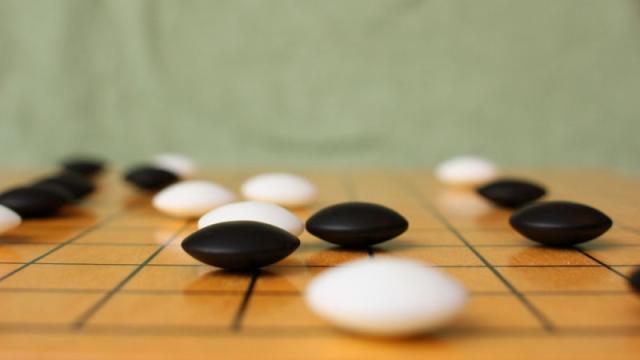

The history of Go dates back to ancient China, some 2,500 years ago. It’s played by placing black or white stones on a 19 x 19 grid. When a player surrounds any of his opponents pieces, they’re captured. The goal of the game is to control at least 50 per cent of the board. The reason that it’s so difficult for computers to play is because there is an estimated 10 to the power of 700 possible variations of the game. By comparison, chess only has 10 to the power of 60 possible scenarios.

The breakthrough achieved by Google DeepMind is important for several reasons: Broadly speaking, it will impact the way that computers are able to search for a sequence of actions. That will help AI programs get from one place to another and navigate through logic. To the average person, that can mean a lot of different things because they’re often asking an artificial intelligence to get from one place to another by reasoning through a set of logic equations. More specifically, things like facial-recognition processing and predictive search are the most easy advances to point to.

Following this announcement, the Google DeepMind team has issued a challenge to the best player in the world, Lee Sedol of South Korea, who has long been considered the greatest player of the modern era. The match is scheduled to take place in March 2016.

The Sedol vs AlphaGo match will have many similarities to the famous 1996 chess match between Chessmaster Gary Kasparov and IBM’s Deep Blue computer. In that match, IBM’s Deep Blue artificial intelligence was the first to defeat a professional chessmaster. In the upcoming match between Sedol and AlphaGo, DeepMind’s artificial intelligence will have to sort through a much larger decision tree than IBM’s Deep Blue in addition to sorting through a higher number of moves. Keep going at it! This is some fun competition.

Top image via Flickr