When will a neural network know who Donald Trump is? How long until one can come up with a joke on its own? How about recognise Yoda?

It may not be much longer, with neural network models progressing at breakneck speed. But there’s still a long way to go, as demonstrated by an experiment by Samim Winiger, who usually goes by just Samim, a self-described “narrative engineer” who experiments with AI and machine learning to tell stories.

Samim recently asked a neural net to caption a series of pop culture videos and clips from movies to illustrate the huge variance in how accurate these algorithms are — producing some amazing stupid and funny machine-written descriptions of Kanye West, Luke Skywalker, and even Big Dog.

This summer we’ve heard a lot about machine learning: We saw neural networks dream, converse with surprising ease, copy the work of famous artists. Thanks to a burgeoning community of people testing and publishing their experiments online on platforms like GitXiv, there are also plenty of examples of how advanced these algorithms already are.

But Samim wasn’t just trying to show how good neural networks are at recognising images; he wanted to show their biggest screw ups, too. Samim is interested in humour — he calls it “computational comedy.” It’s the kind of comedy that occurs when AI makes mistakes, but Samim points out it can also help humans understand AI. Earlier this year he built a robot that learned how to write its own inscrutable TED talk using the input from thousands of real talks. He also built one that writes similarly nonsensical Obama speeches.

“Humour and comedy are a great canvas for education,” he told me over email. “Especially as a system’s ‘near miss’ is a great way to display research targets, current levels of advances and societal implications of technology.”

His most recent computational comedy project popped up on his blog last week. In it, he set up an experiment that tested how well neural networks could caption videos from pop culture. He used an open source model developed by Google and Stanford called NeuralTalk, which looks at an image and describes it with a brief caption.

That’s a more complex problem than our naturally verbose brains might think — the network must be trained in natural language not only to identify what’s in an image, but describe a relationship between multiple elements of a scene using structured sentences.

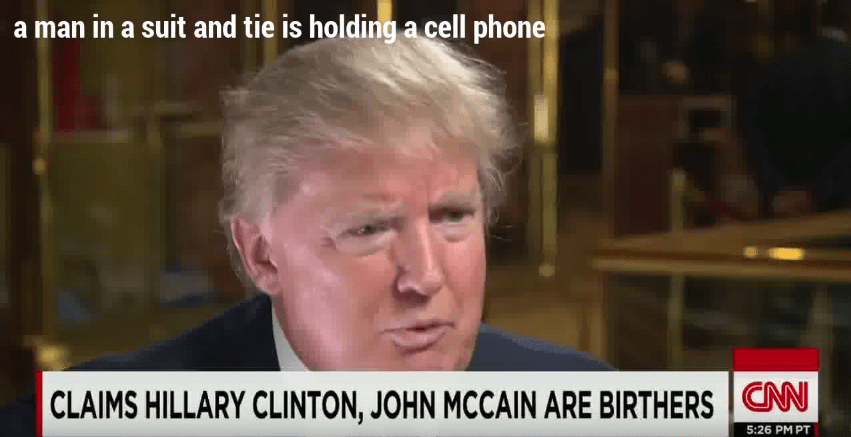

It’s the forefront of machine learning, and while it’s fascinating and already very advanced, it also has a long way to go, as Samim points out in his post about the project. He produced two captioned video montages showing both the success stories, where NeuralTalk was surprisingly good at correctly identifying everything from birds as birds to snowboarders as snowboarders to Donald Trump as a person (debatable), as well as the less successful captions.

For example, Luke Skywalker talking to Yoda? NeuralTalk captioned that famous scene thusly:

Does it recognise its fellow machine, Big Dog? Nah.

Or just a bird? Surely, a bird is simple?

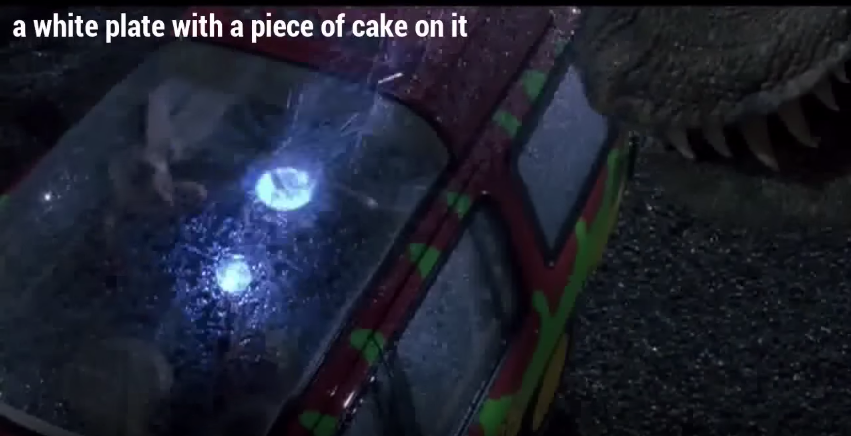

OK, how about a terrifying and iconic scene from Jurassic Park?

Of course, there are plenty of examples of where NeuralTalk correctly captioned a scene. But the absurdity of the incorrect captions is what really matters here — that the network can be so correct sometimes, yet so wrong other times, gives us a glimpse into the complex maths behind this emerging form of AI. Neural network models must be “taught” to recognise objects and categories in images — just as Deep Dream imagined certain types of hallucinations based on what Google’s engineers had taught to it, other neural networks are only experts when they have been schooled on the subject at hand.

Computational comedy isn’t just about making us chuckle at the pitfalls of AI. It’s also about a form of intelligence that often gets ignored by researchers. While many scientists will test their work against standard metrics having to do with recognising numbers or words, the subtle comprehension of jokes, creativity, and cultural references sits on another plane.

So the Turing Test is a bit of a simplistic way to judge AI, as Samim puts it. “Humour is a much harder metric to achieve — fundamentally human and engaging our cognitive abilities on many levels,” he says. So his Law of Computational Comedy makes a crucial addendum: “Any sufficiently advanced technology will develop comedy.” In the meantime, we’ll make our own at its expense.